Hello @Jogalekar, Mandar ,

As mentioned by @Alberto Morillo the change which you did is making the the table being created in the userDB as previously to tempDB . If I were you could have tried

- Upgrade the DB to a higher tier and scale down after the ingestion ( @Alberto Morillo also suggested this ) , the down side is this will increase your azure bill .

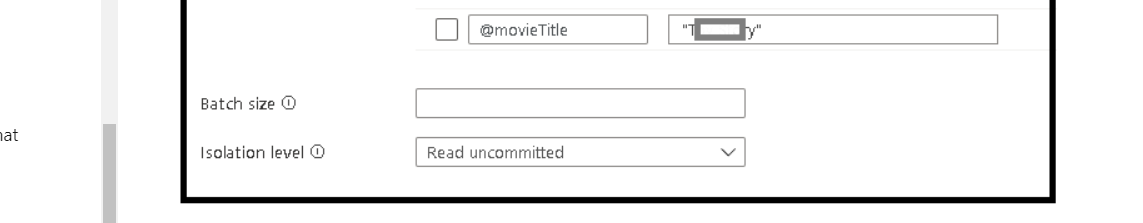

- You can set the Batch size , the challenge is to find the correct batch size , this will be comparatively slower then the above one .

Thanks

Himanshu

Please do consider clicking on "Accept Answer" and "Up-vote" on the post that helps you, as it can be beneficial to other community members