Hi,

I am coming across an issue to do with retaining the datetime values in the datasets that I have uploaded to AzureML.

This issue can be replicated in the following ways:

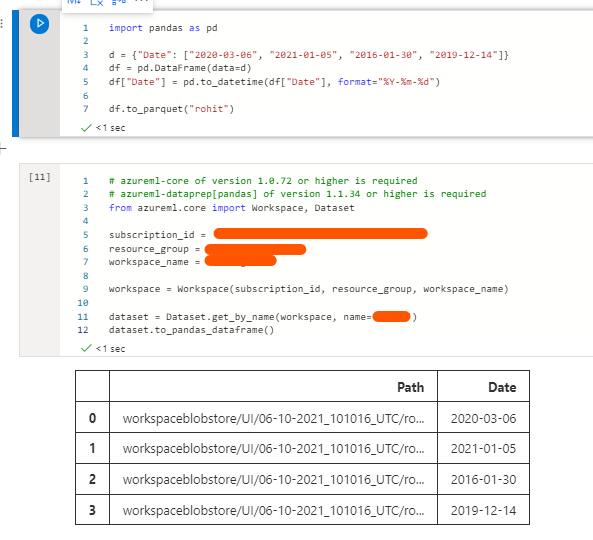

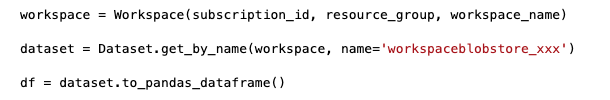

- Create a pandas dataframe with a column of datetime strings and parse them accordingly d = {"Date": ["2020-03-06", "2021-01-05", "2016-01-30", "2019-12-14"]}

df = pd.DataFrame(data=d)

df["Date"] = pd.to_datetime(df["Date"], format="%Y-%m-%d")

- Save this dataframe as a .parquet

- Upload to Azure Blob

- Create a Tabular Dataset object with the uploaded file

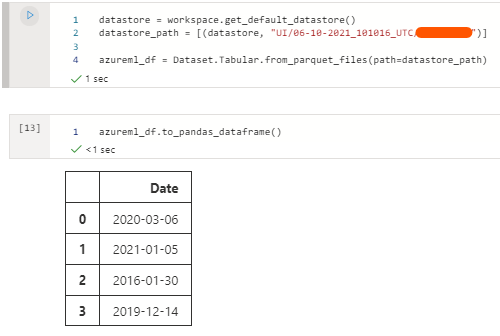

datastore = workspace.get_default_datastore()

datastore_path = [(datastore, "filename.parquet")]

azureml_df = Dataset.Tabular.from_parquet_files(path=datastore_path)

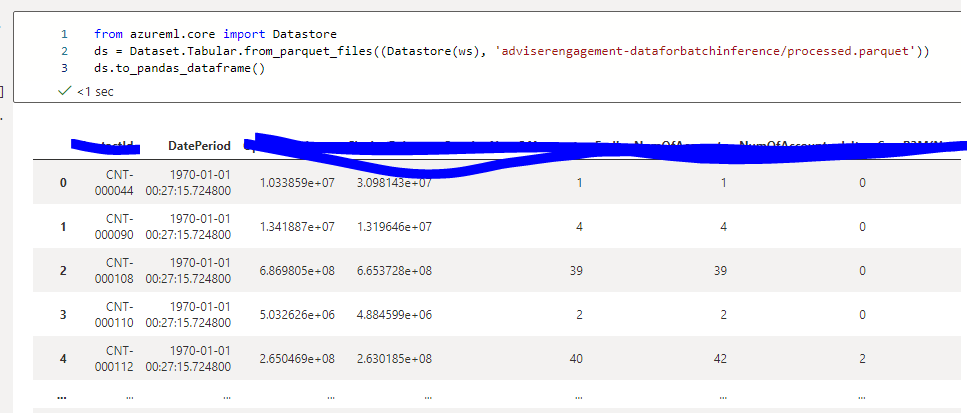

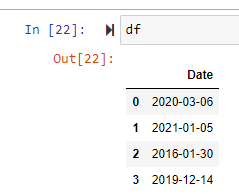

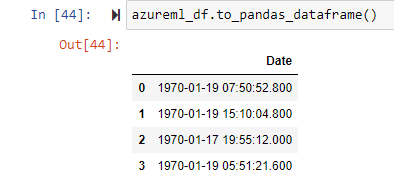

Printing the dataframe results in the following:

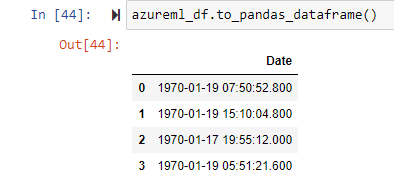

The datetime values are now different.

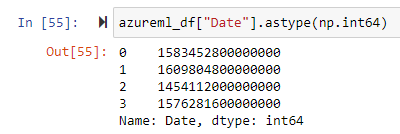

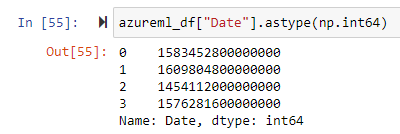

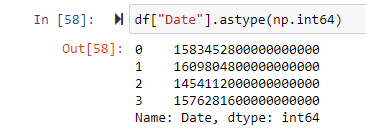

To investigate further, we can cast the datetime to int:

which gives us a 15 digit number.

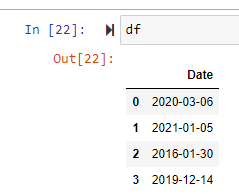

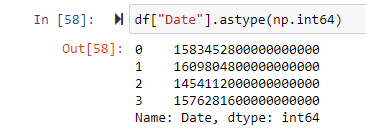

We also cast the original df to int:

which instead gives us an 18 digit number.

This 18 digit number represents the number of nanoseconds since UNIX epoch. Three trailing zeroes are stripped from the number when creating the Tabular Dataset object through azureml-sdk, resulting in an incorrect datetime being read. Keep in mind that if you were to download the parquet from Azure Blob, the values are still intact, meaning the issue is with AzureML and potentially the Dataset method, from_parquet_files. A simple workaround would be to multiply this column by 1000 then convert it back to datetime again but I would like to know if there's something I'm missing in between reading the parquet from AzureML or if the problem is on Azure's side.

Regards,

Muhammad