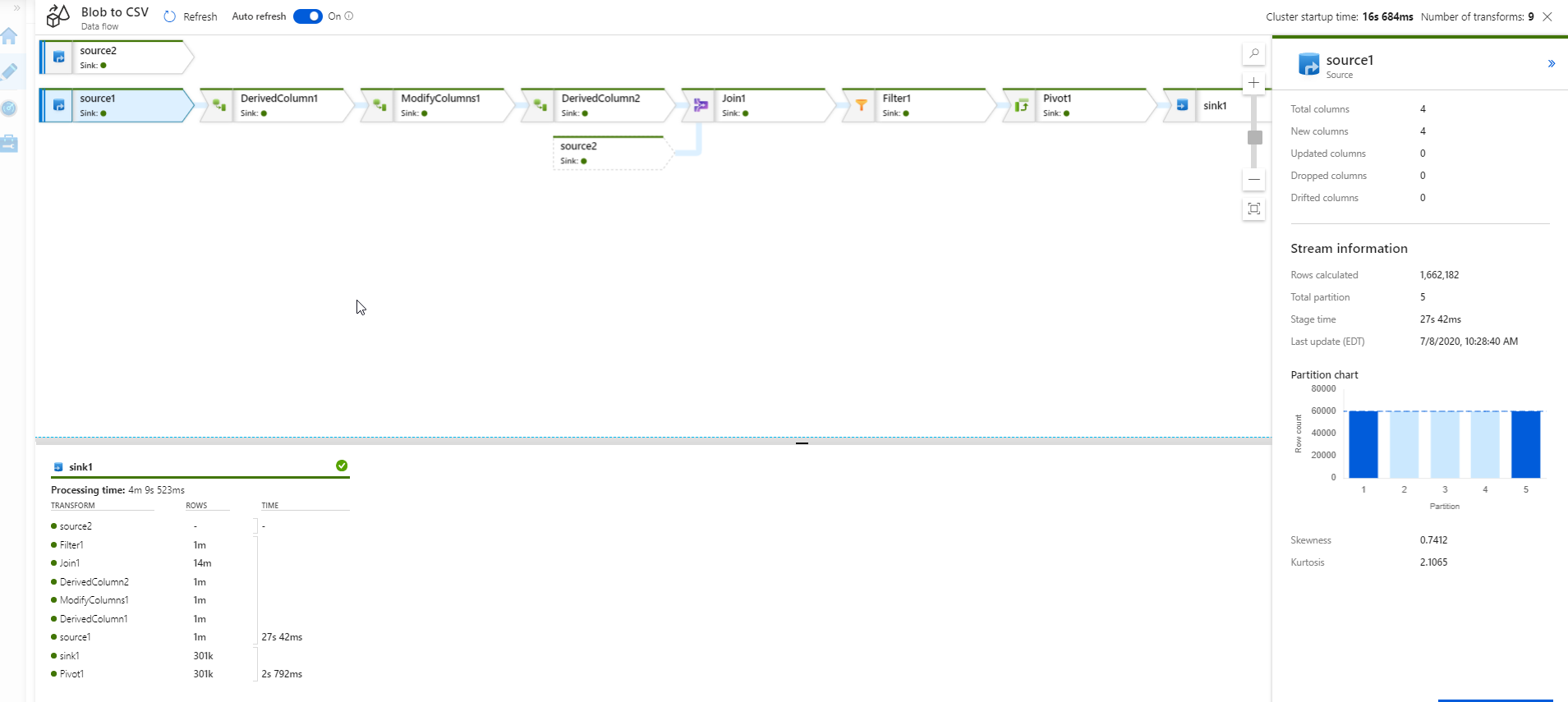

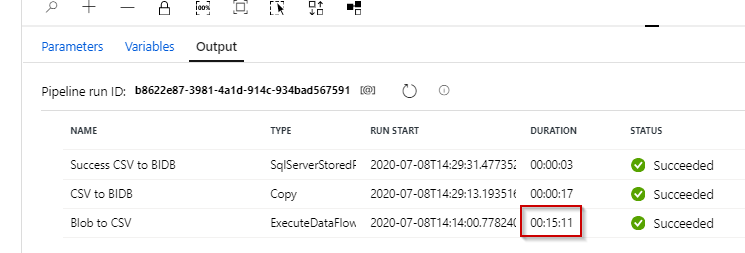

Click on the Sink in your data flow in the monitoring UI. The right-hand panel will show the total processing time at the bottom.

Now, look at the "Stage time" in that same fly-in panel. The difference between those 2 durations is the amount of time it took Databricks to write your data to your destination linked service and perform any post-execution clean-up.

And, yes, before you ask ... we can make this better and clearer and we are working on it! :)

QQ: Are you performing any post-execution scripts or sink to single file in your sink configuration?