MPI doesn't use Infiniband with CycleCloud

I created a two node Cluster with the help of the cycle Cloud GUI provided by Microsoft. I used the slurm scheduler (version: 20.11.4-1), HC44rs instances for the two nodes and a D12_v12 for the master / scheduler node. The same OpenLogicCentOS-HPC:8_1:latest OS was used on all the nodes (CentOS 8).

I then tried to run a simple MPI ping-pong program to check the connection speed. As I only got around 900 Mpbs I assume that the nodes didn't communicate over the provided Infiniband connection. (For a packet size of 8 MiB i got a latency of around 0.156 s. To run the program I used "sbatch -N2 --wrap="mpirun -n 2 my_program"". I also tried some -mca options but nothing helped to solve my problem.

Based on this two articles by Microsoft I assumed that the Infiniband drivers are installed and are ready to be used with any MPI library (https://learn.microsoft.com/en-us/azure/virtual-machines/workloads/hpc/enable-infiniband, https://learn.microsoft.com/en-us/azure/virtual-machines/workloads/hpc/setup-mpi). As suggested in the article I first tried HPC-X and then OpenMPI.

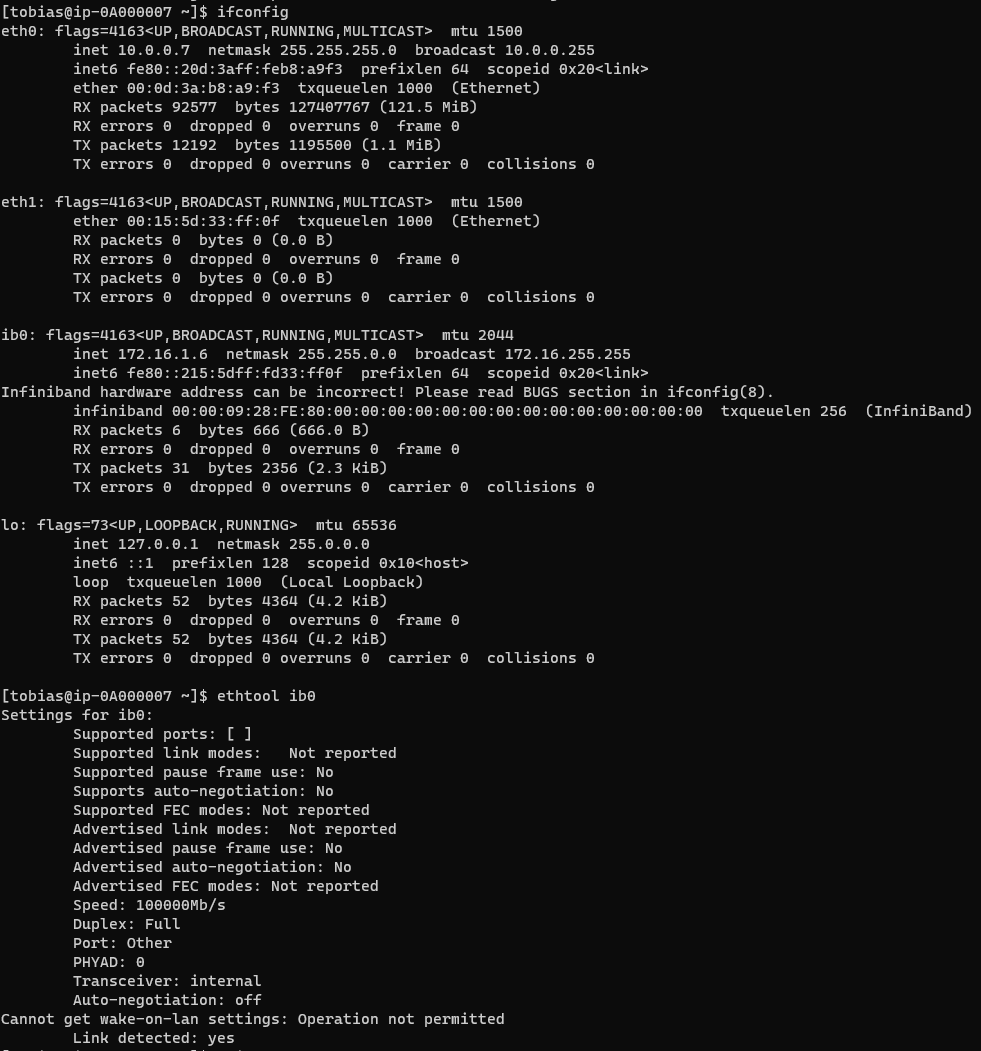

I also ran ifconfig to ensure that the Infiniband interface actaully exists and with ethtool I checked the speed it advertises. The following screenshot shows the results taken on one of the nodes.

This is the code I used:

int ping_pong(long int message_size, int repetitions, FILE *output){

int my_rank, num_procs;

MPI_Init(NULL, NULL);

MPI_Comm_size(MPI_COMM_WORLD, &num_procs);

MPI_Comm_rank(MPI_COMM_WORLD, &my_rank);

MPI_Status stat;

// allocate buffer

uint8_t *buf = (uint8_t*)malloc(message_size*sizeof(*buf));

// set values in buffer to zero

for(int i=0; i<message_size*sizeof(*buf); i++){

buf[i] = (uint8_t)0;

}

int tag1 = 42;

int tag2 = 43;

double target_time = 5*60; // 5 minutes (in secs)

double my_time = 0;

//write header of csv file

if(my_rank==0){

fprintf(output, "stress test - #repetitions: %d- message size [B]: %ld\n", repetitions, message_size);

}

double start, end, elapsed_time;

int finished = 1;

int iter = 0;

while(finished){

iter++;

if(iter%1000==0){

printf("rank: %d, time: %f\n", my_rank, my_time);

fflush(stdout);

}

start = MPI_Wtime();

if(my_rank == 0){

MPI_Send(buf, message_size*sizeof(*buf), MPI_UINT8_T, 1, tag1, MPI_COMM_WORLD);

MPI_Recv(buf, message_size*sizeof(*buf), MPI_UINT8_T, 1, tag2, MPI_COMM_WORLD, &stat);

} else{ // rank == 1

MPI_Recv(buf, message_size*sizeof(*buf), MPI_UINT8_T, 0, MPI_ANY_TAG, MPI_COMM_WORLD, &stat);

if(stat.MPI_TAG==0){

finished=0;

}else{

MPI_Send(buf, message_size*sizeof(*buf), MPI_UINT8_T, 0, tag2, MPI_COMM_WORLD);

}

}

end = MPI_Wtime();

elapsed_time = end - start;

my_time += elapsed_time;

if(my_rank==0){

fprintf(output, "%f\n", elapsed_time);

if(my_time>target_time){

finished = 0;

int tag = 0;

MPI_Send(buf, message_size*sizeof(*buf), MPI_UINT8_T, 1, tag, MPI_COMM_WORLD);

}

}

}

free(buf);

MPI_Finalize();

return 0;

}

Any help is appreciated, thanks in advance. If you need any further information or clarification I'd be happy to give it to you.