Hello @Shivank.Agarwal ,

Steps to access synapse DB from spark notebook and added the db username and password in secret vault:

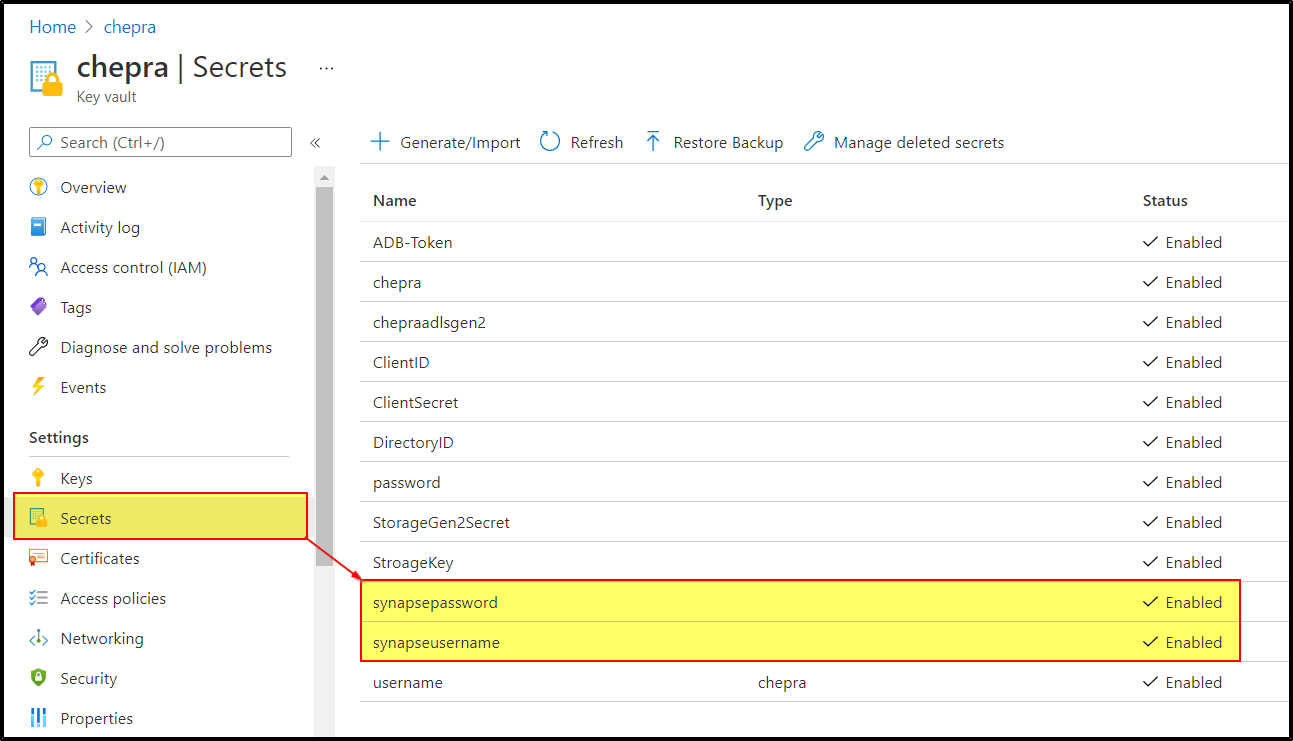

Step1: Create synapse username and password in Key vault secrets:

Note: I had created synapse username as synapseusername and synapse password as synapsepassword and my Azure Key vault name is chepra.

Step2: Using TokenLibrary function you can access the secrets from keyvault in your notebook.

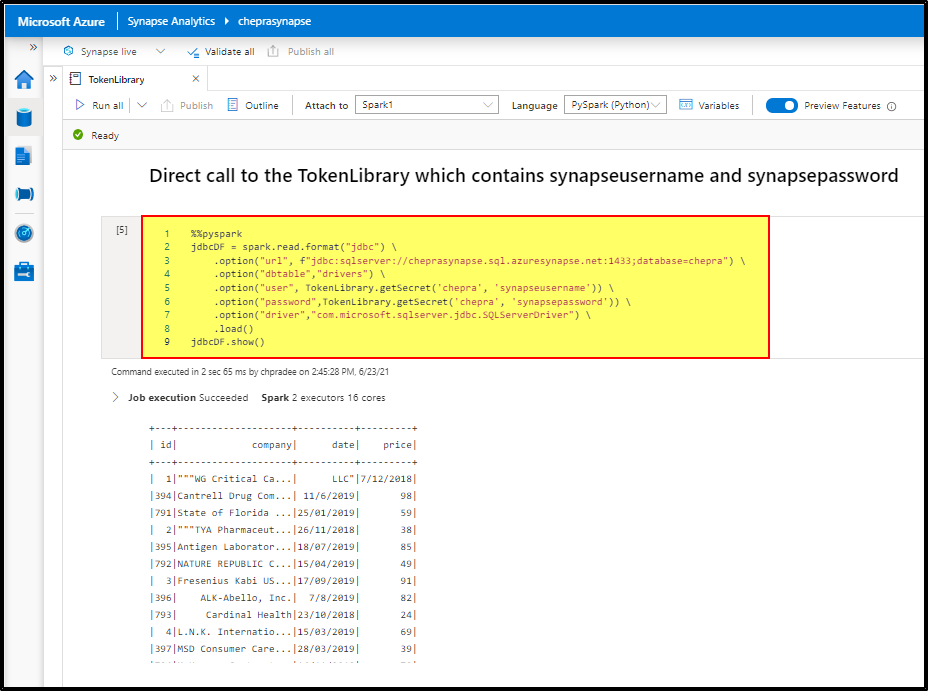

Method1: Direct call to the TokenLibrary which contains synapseusername and synapsepassword from Azure Key vault.

Note: For example: TokenLibrary.getSecret("AzureKeyvaultName", "SecretName")

%%pyspark

jdbcDF = spark.read.format("jdbc") \

.option("url", f"jdbc:sqlserver://cheprasynapse.sql.azuresynapse.net:1433;database=chepra") \

.option("dbtable","drivers") \

.option("user", TokenLibrary.getSecret('chepra', 'synapseusername')) \

.option("password",TokenLibrary.getSecret('chepra', 'synapsepassword')) \

.option("driver","com.microsoft.sqlserver.jdbc.SQLServerDriver") \

.load()

jdbcDF.show()

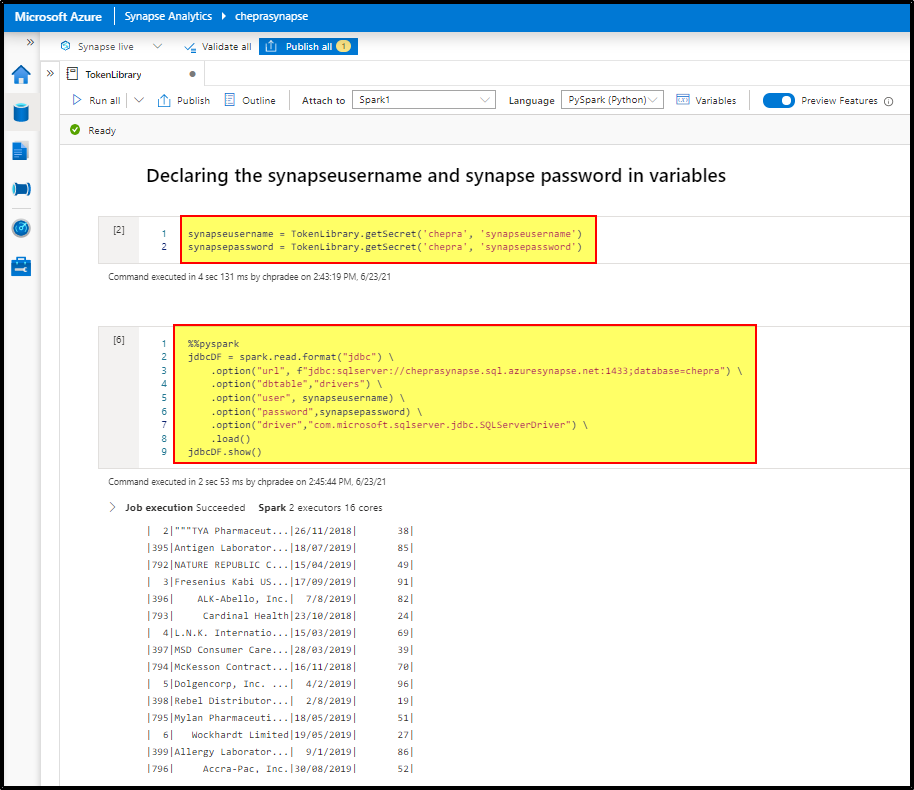

Method2: Declaring the synapseusername and synapse password in a variables.

Declaring variables:

synapseusername = TokenLibrary.getSecret('chepra', 'synapseusername')

synapsepassword = TokenLibrary.getSecret('chepra', 'synapsepassword')

Calling the variables in the connection string:

%%pyspark

jdbcDF = spark.read.format("jdbc") \

.option("url", f"jdbc:sqlserver://cheprasynapse.sql.azuresynapse.net:1433;database=chepra") \

.option("dbtable","drivers") \

.option("user", synapseusername) \

.option("password",synapsepassword) \

.option("driver","com.microsoft.sqlserver.jdbc.SQLServerDriver") \

.load()

jdbcDF.show()

Hope this helps. Do let us know if you any further queries.

---------------------------------------------------------------------------

Please "Accept the answer" if the information helped you. This will help us and others in the community as well.