There are multiple ways to get data into the pipeline and available as activity output.

Lookup activity is the easiest, but it has volume limitations.

A web activity is another method. Most Azure services have a REST API. By pointing the web activity at the REST API endpoint for the service in question, you can fetch the data. Using web activity is harder than the Lookup, because the authentication is not as in-built.

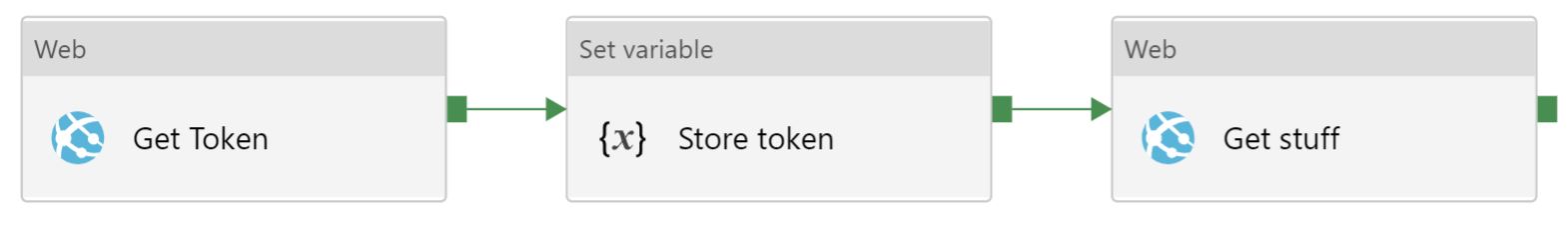

For Gen2 / Blob, the process is to get a Bearer Token, then use it in the Authorization header of subsequent calls.

The first step is identical to Step 5 in using OAuth with REST connector.

The remaining steps are similar, except using Web Activity instead of Copy Activity.

To get the data, the URL should look like

GET https://{accountName}.{dnsSuffix}/{filesystem}/{path}

You will need to add a header, 'Authorization' , and the value should use the token @concat('Bearer ',variables('token'))