Hi @June Zhu ,

Welcome to Microsoft Q&A forum and thanks for reaching out.

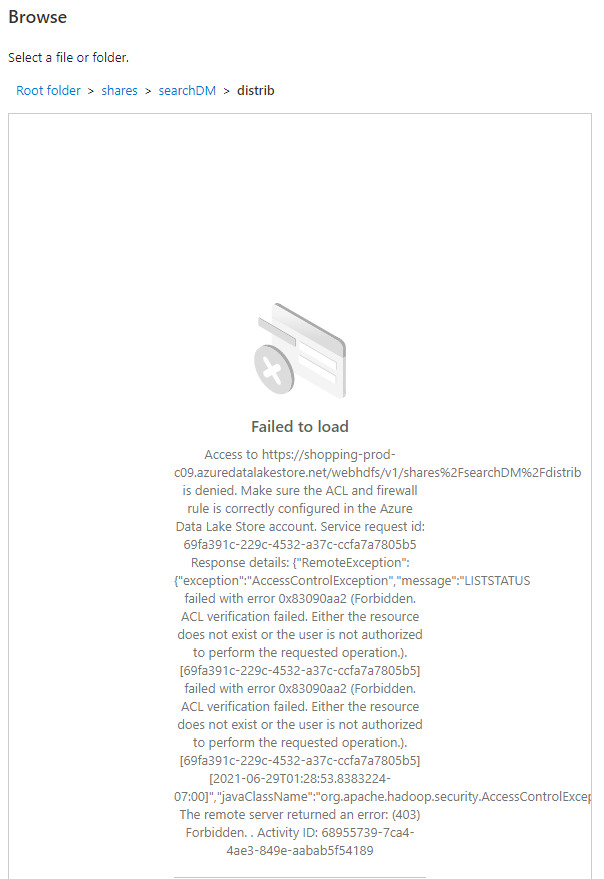

It seems to be an ACL permission issue at the child folder level (distrib).

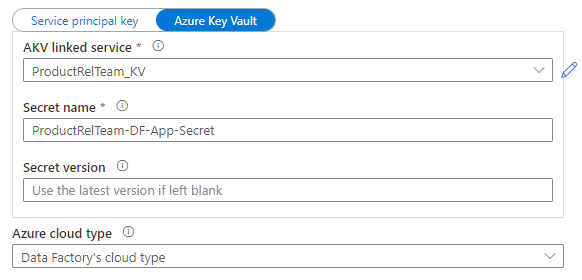

If you are using Service Principal authentication then please grant the service principal proper permission. See examples on how permission works in Data Lake Storage Gen2 from Access control lists on files and directories

- As source: In Storage Explorer, grant at least

Executepermission for ALL upstream folders and the file system, along withReadpermission for the files to copy. Alternatively, in Access control (IAM), grant at least theStorage Blob Data Readerrole. - As sink: In Storage Explorer, grant at least

Executepermission for ALL upstream folders and the file system, along withWritepermission for the sink folder. Alternatively, in Access control (IAM), grant at least theStorage Blob Data Contributorrole.

Note: If you use Data Factory UI to author and the service principal is not set with "Storage Blob Data Reader/Contributor" role in IAM, when doing test connection or browsing/navigating folders, choose "Test connection to file path" or "Browse from specified path", and specify a path with Read + Execute permission to continue.

If you are using Managed Identity authentication, then please grant the managed identity proper permission. See examples on how permission works in Data Lake Storage Gen2 from Access control lists on files and directories.

- As source: In Storage Explorer, grant at least

Executepermission for ALL upstream folders and the file system, along withReadpermission for the files to copy. Alternatively, in Access control (IAM), grant at least theStorage Blob Data Readerrole. - As sink: In Storage Explorer, grant at least

Executepermission for ALL upstream folders and the file system, along withWritepermission for the sink folder. Alternatively, in Access control (IAM), grant at least theStorage Blob Data Contributorrole.

Note: If you use Data Factory UI to author and the managed identity is not set with "Storage Blob Data Reader/Contributor" role in IAM, when doing test connection or browsing/navigating folders, choose "Test connection to file path" or "Browse from specified path", and specify a path with Read + Execute permission to continue.

Hope this info helps. Do let us know how it goes.

----------

Please don’t forget to Accept Answer and Up-Vote wherever the information provided helps you, this can be beneficial to other community members.