Here's the terraform script to show what I've configured so far.

# Configure the Azure provider

terraform {

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "~> 2.65"

}

}

required_version = ">= 0.14.9"

}

provider "azurerm" {

features {}

}

locals {

tags = {

Owner = "Me"

}

}

//--------------------------------------

// Resource Group

//

resource "azurerm_resource_group" "rg" {

name = "MyRG"

location = "australiaeast"

tags = local.tags

lifecycle {

ignore_changes = [

tags

]

}

}

//--------------------------------------

// IoT Hub

//

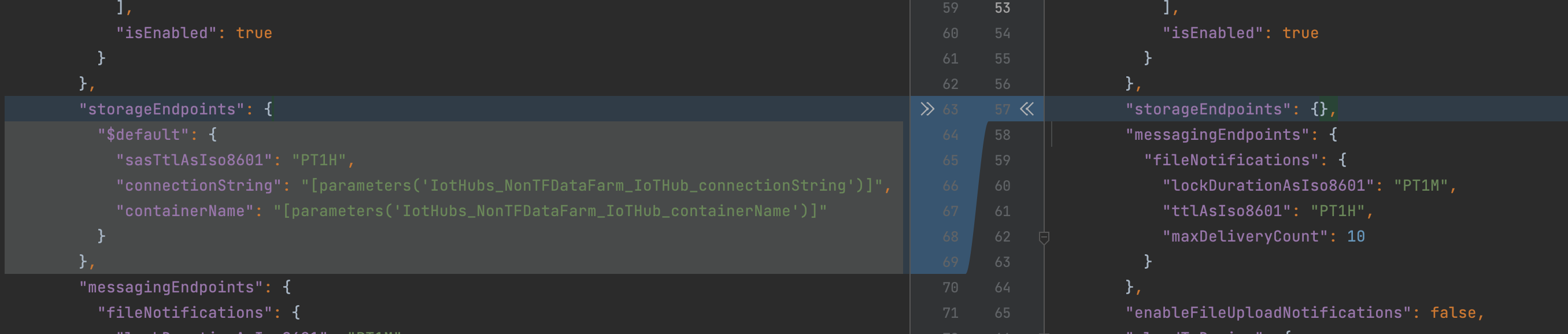

resource "azurerm_iothub" "iothub" {

name = "My-IoTHub"

resource_group_name = azurerm_resource_group.rg.name

location = azurerm_resource_group.rg.location

sku {

name = "B1"

capacity = "1"

}

tags = local.tags

lifecycle {

ignore_changes = [

tags

]

}

}

//--------------------------------------

// Database Server

//

resource "azurerm_mssql_server" "sqlserver" {

name = "my-sql-server"

resource_group_name = azurerm_resource_group.rg.name

location = azurerm_resource_group.rg.location

version = "12.0"

administrator_login = var.administrator_login

administrator_login_password = var.administrator_password

minimum_tls_version = "1.2"

tags = local.tags

lifecycle {

ignore_changes = [

tags

]

}

}

resource "azurerm_mssql_firewall_rule" "example" {

name = "FirewallRule1"

server_id = azurerm_mssql_server.sqlserver.id

start_ip_address = "0.0.0.0"

end_ip_address = "0.0.0.0"

}

//--------------------------------------

// Database

//

resource "azurerm_mssql_database" "database" {

name = "my-db"

server_id = azurerm_mssql_server.sqlserver.id

collation = "SQL_Latin1_General_CP1_CI_AS"

max_size_gb = 1

read_scale = false

sku_name = "Basic"

zone_redundant = false

tags = local.tags

lifecycle {

ignore_changes = [

tags

]

}

}

//--------------------------------------

// Streaming job - Output (to database)

//

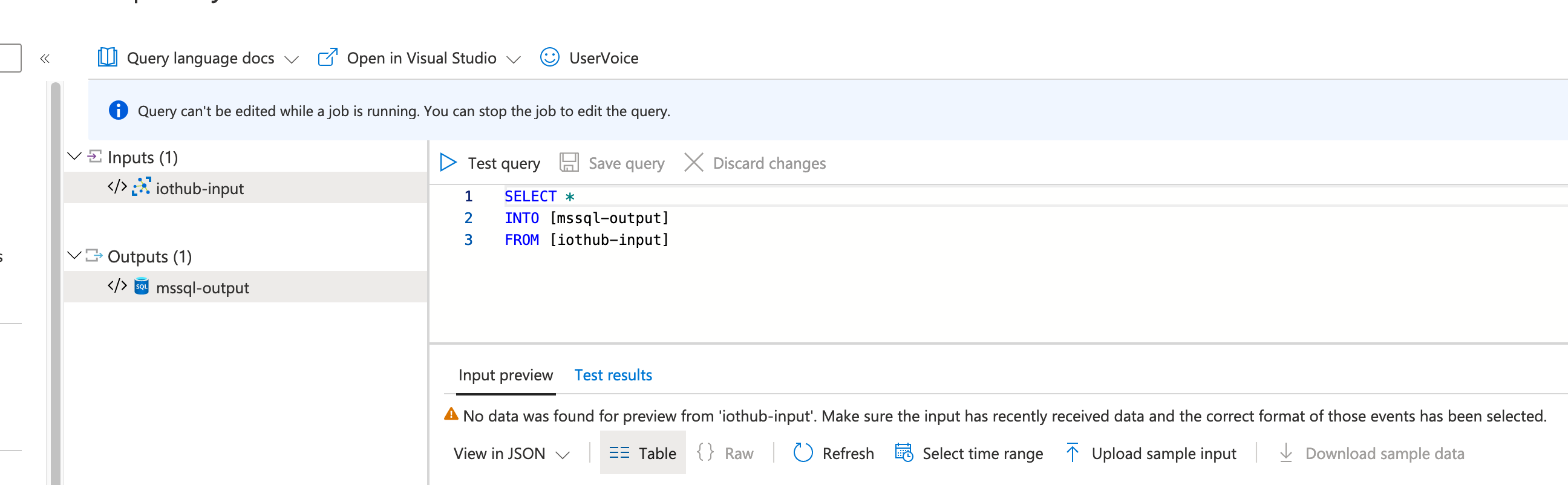

resource "azurerm_stream_analytics_output_mssql" "output" {

name = "my-job-output"

stream_analytics_job_name = azurerm_stream_analytics_job.iot-to-db.name

resource_group_name = azurerm_stream_analytics_job.iot-to-db.resource_group_name

server = azurerm_mssql_server.sqlserver.fully_qualified_domain_name

user = azurerm_mssql_server.sqlserver.administrator_login

password = azurerm_mssql_server.sqlserver.administrator_login_password

database = azurerm_mssql_database.database.name

table = "MyTable"

}

//--------------------------------------

// Streaming job - Input (from MQTT)

//

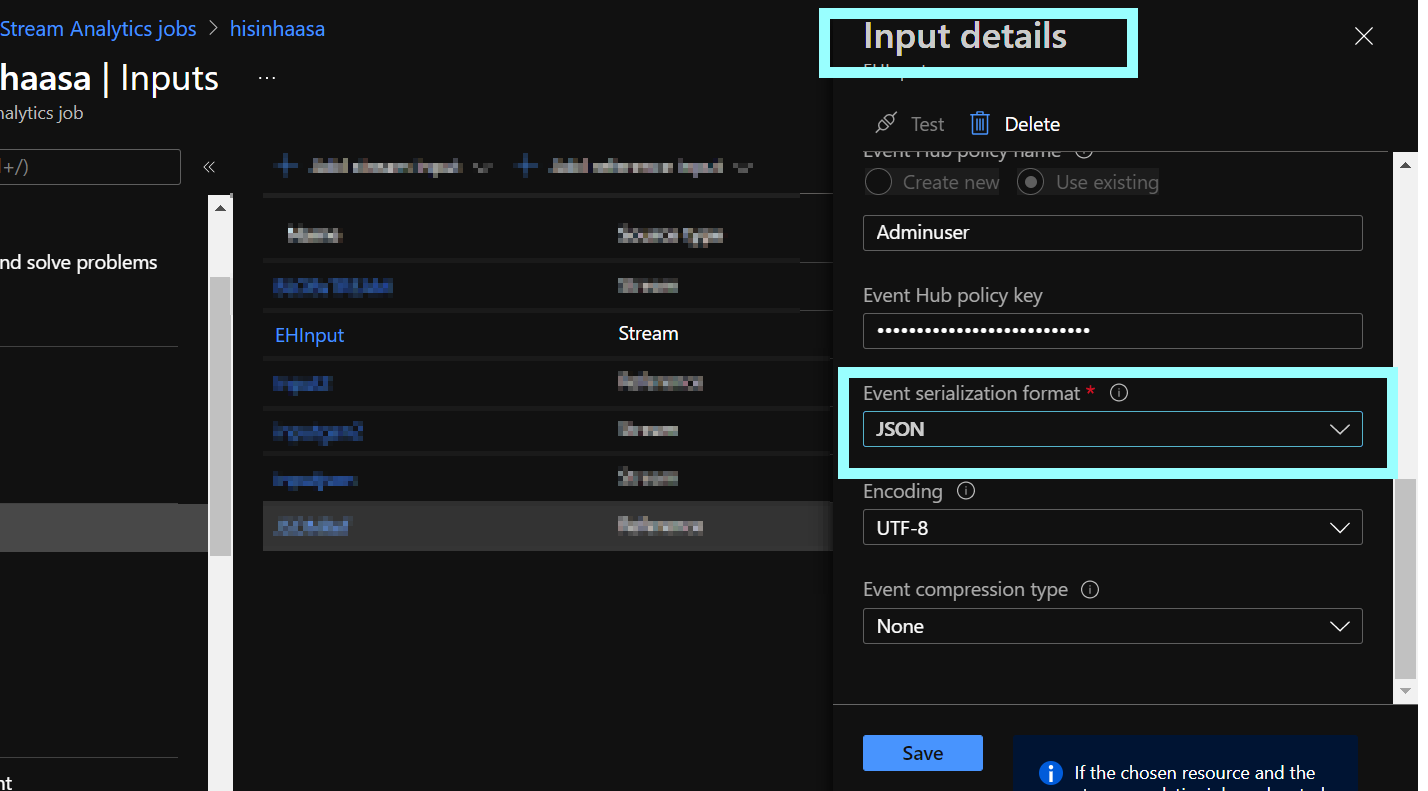

resource "azurerm_stream_analytics_stream_input_iothub" "input" {

name = "my-input"

stream_analytics_job_name = azurerm_stream_analytics_job.iot-to-db.name

resource_group_name = azurerm_stream_analytics_job.iot-to-db.resource_group_name

endpoint = "messages/events"

eventhub_consumer_group_name = "$Default"

iothub_namespace = azurerm_iothub.iothub.name

shared_access_policy_key = azurerm_iothub.iothub.shared_access_policy[0].primary_key

shared_access_policy_name = "iothubowner"

serialization {

type = "Json"

encoding = "UTF8"

}

}

//--------------------------------------

// Streaming job

//

resource "azurerm_stream_analytics_job" "my-job" {

name = "my-job"

resource_group_name = azurerm_resource_group.rg.name

location = azurerm_resource_group.rg.location

compatibility_level = "1.2"

data_locale = "en-AU"

events_late_arrival_max_delay_in_seconds = 60

events_out_of_order_max_delay_in_seconds = 50

events_out_of_order_policy = "Adjust"

output_error_policy = "Stop"

streaming_units = 1

tags = local.tags

lifecycle {

ignore_changes = [

tags

]

}

transformation_query = <<QUERY

SELECT *

INTO [output]

FROM [input]

QUERY

}