Hi @Netty ,

Thank you for posting query on Microsoft Q&A Platform.

I have implemented a sample pipeline for your requirement. Please check below detailed explanation and follow same in your requirement too.

Step1: Created a pipeline parameters called "client_id" & "dates"

client_id --> To hold your client id for which you want to run execution.

dates --> Array of your dates values for which you want to run execution.

Step2: ForEach activity. Pass your dates array in to Foreach activity.

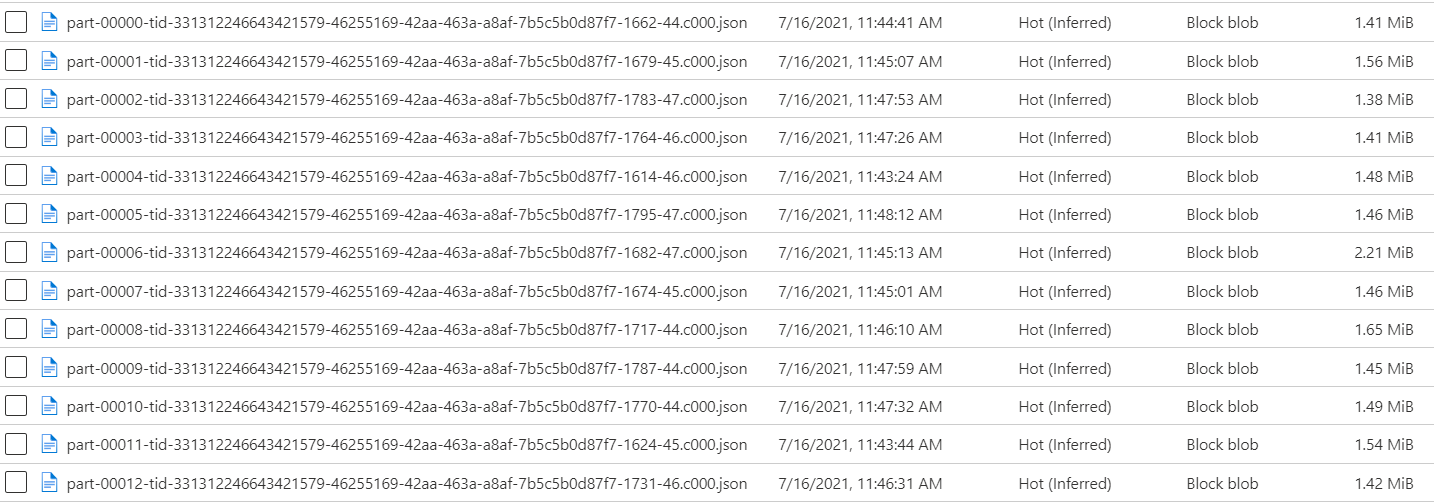

Step3: Inside ForEach activity, Use Copy activity for copy source data in your target storage. Here, on Target we want to get folder path in below format. Hence I use parameterized dataset as Sink.

my-data-lake/my-container/client_id=<clinet_id>/date=yyyy-MM-dd/*.json

Expression used for dynamic path: client_id=@{pipeline().parameters.client_id}/date=@{item()}

Hope this will help. Thank you

----------------------------------

- Please

accept an answerif correct. Original posters help the community find answers faster by identifying the correct answer. Here is how. - Want a reminder to come back and check responses? Here is how to subscribe to a notification.