Hello Experts,

I am trying to read an Excel file (247MB and total rows 549628) through databricks and converting into Paraquet file and keeping it in ADLS Gen 1(already mounted) but getting below error even while reading the file:

"The spark driver has stopped unexpectedly and is restarting. Your notebook will be automatically reattached."

below is the code:

import org.apache.spark._

val Data = spark.read.format("com.crealytics.spark.excel")

.option("header", "true")

.option("inferSchema", "true")

.load("/mnt/adls/folder/file.xlsx")

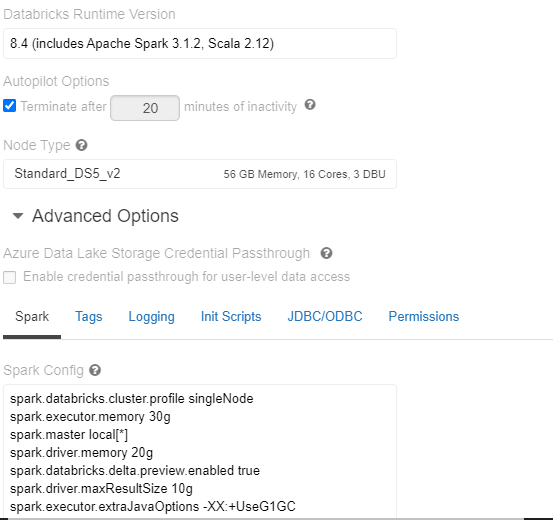

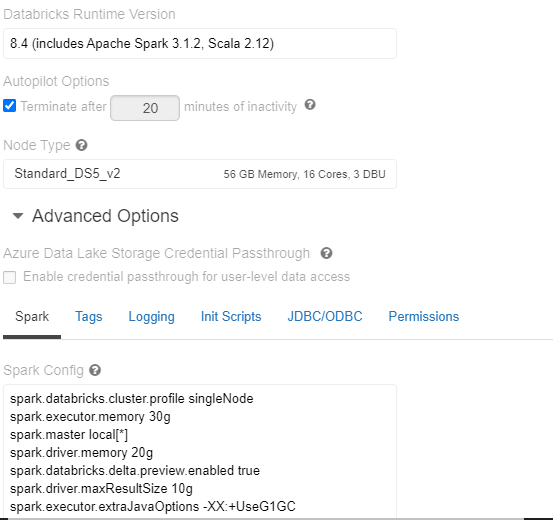

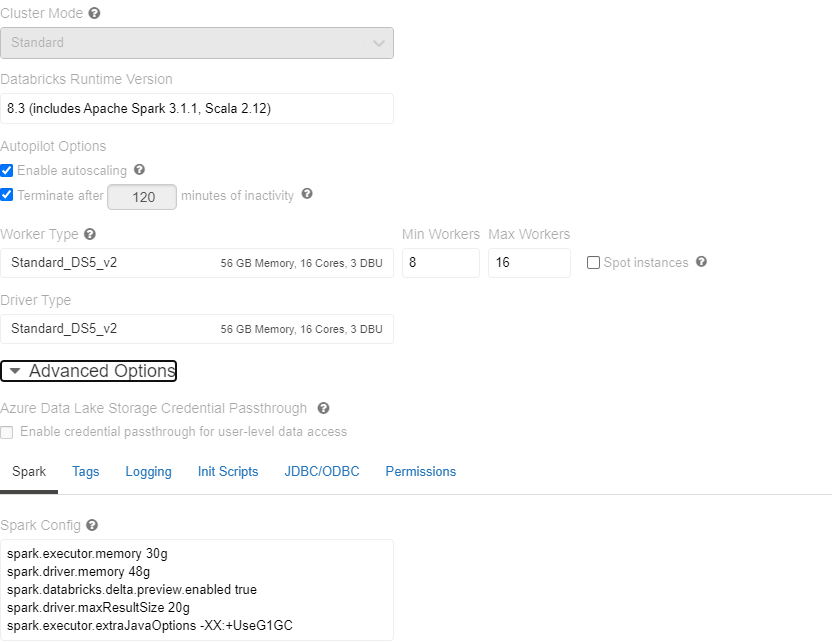

Below is the configuration of cluster:

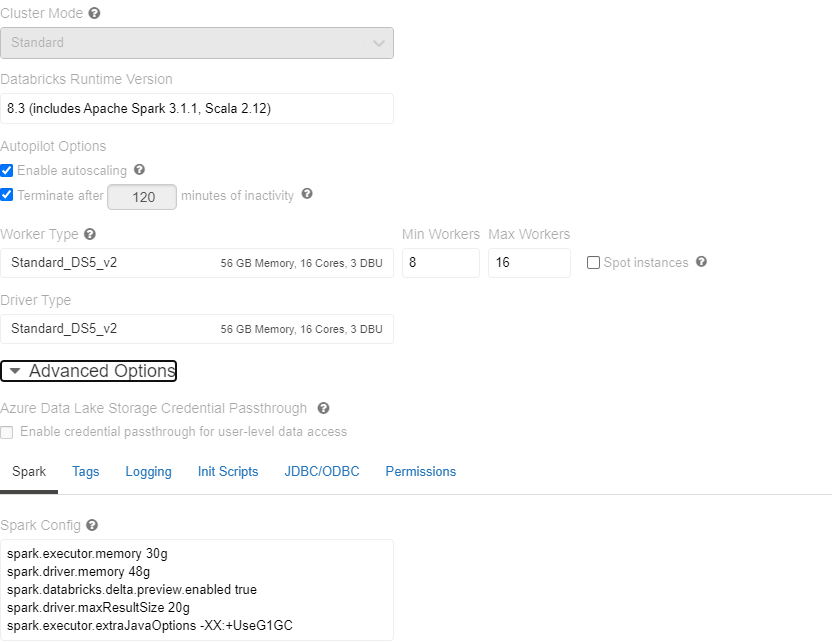

I have also tried by choosing high configuration

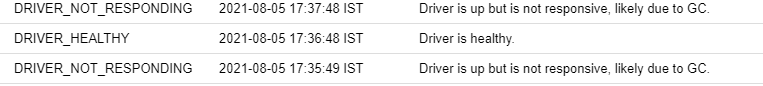

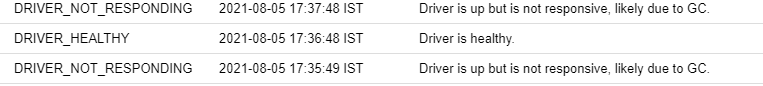

But still getting this issue and also if i check the even log i always see below details:

if i break the same file into small records like 100k in each file then able to process the file.

Please let me know if i am not choosing the optimized configuration but it seems weird since databricks should increase the processing and should be able to process 247mb file even with basic config.

Appreciate your time and effort, Thanks !!