Hi sakuraime,

the time required will be base on the size of the column ?

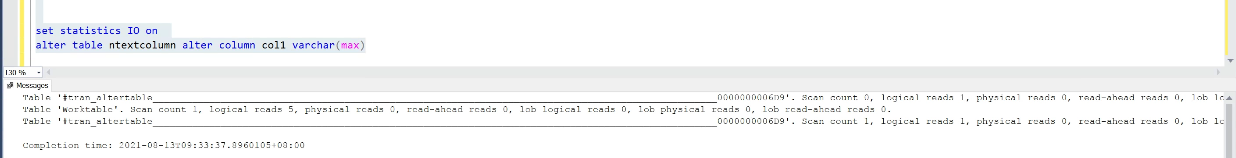

I think the time required may be related to the amount of data, and the table will be locked for a period of time when the metadata is modified.

if the original row is less than 8000b , after execute the alter command , will these rows put back to in-row-data allocation ?

SQL Server stores data in pages of 8KB and with a max. of 8060 bytes per row. If you are using varchar, nvarchar, varbinary, sql_variant datatypes then the column length will be limited to 8000 bytes each and the max. length of the row should fall below 8060 bytes. This limit is NOT checked against the varchar(max) data types.

If the row sizes exceeds 8060 bytes then there are certain performance implications as SQL Server enforces 8KB page size. SQL Server moves the max. width column in these scenarios to a separate page in the ROW_OVERFLOW_DATA allocation unit, and leaves a 24 byte pointer in the current page. Please refer to this thread which might help.

any other consideration before changing these columns types

You need to be prepared to handle potentially large transactions, so make sure you have enough log space to avoid running out of log space due to large transactions.

Best Regards,

Amelia