Currently mssparkutils doesn’t expose file modified time info to customer when calling mssparkutils.fs.ls API.

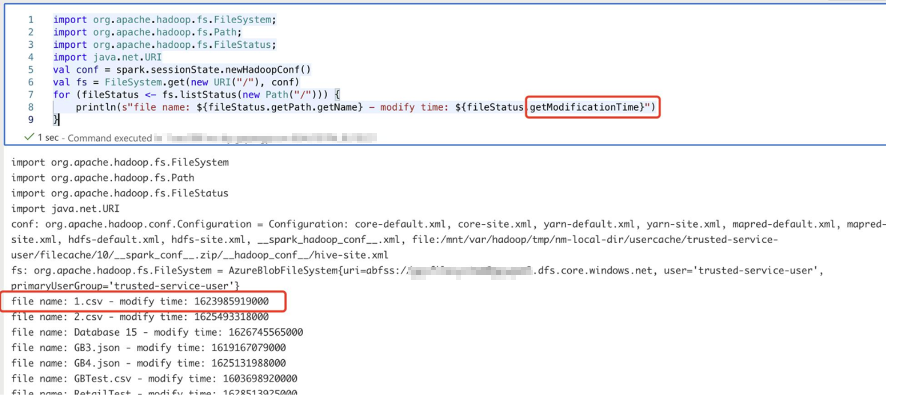

As a workaround you can directly call Hadoop filesystem APIs to get the time info.

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.fs.FileStatus;

import java.net.URI

val conf = spark.sessionState.newHadoopConf()

val fs = FileSystem.get(new URI("/"), conf)

for (fileStatus <- fs.listStatus(new Path("/"))) {

println(s"file name: ${fileStatus.getPath.getName} - modify time: ${fileStatus.getModificationTime}")

}

Additionally, products team has open an internal workitem to address the same in future releases. Please let me know if you have any questions.

Thanks

Saurabh

----------

Please do not forget to "Accept the answer" wherever the information provided helps you to help others in the community.