Each type of trigger scales a bit differently and the most likely cause is that your requests just weren't a high enough load for the scale controller to start spinning up instances. The HTTP scaling is far more aggressive than other types, so taking a bit more to get it started helps prevent it from scaling up too many instances from short traffic spikes. The history is also going to play a factor. You'll see in the results I repeated one of the tests and while the response time was around the same, the scale controller kicked in to add additional instances so future requests would be quicker to respond.

To test this hypothesis, I used your provided host.json file and created a function that let me control externally how long it would take to run the function.

[FunctionName("SlowFunction")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", "post", Route = null)] HttpRequest req,

ILogger log)

{

log.LogInformation("C# HTTP trigger function processed a request.");

var time = int.Parse(req.Query["sleeptime"]);

Thread.Sleep(time);

return new OkResult();

}

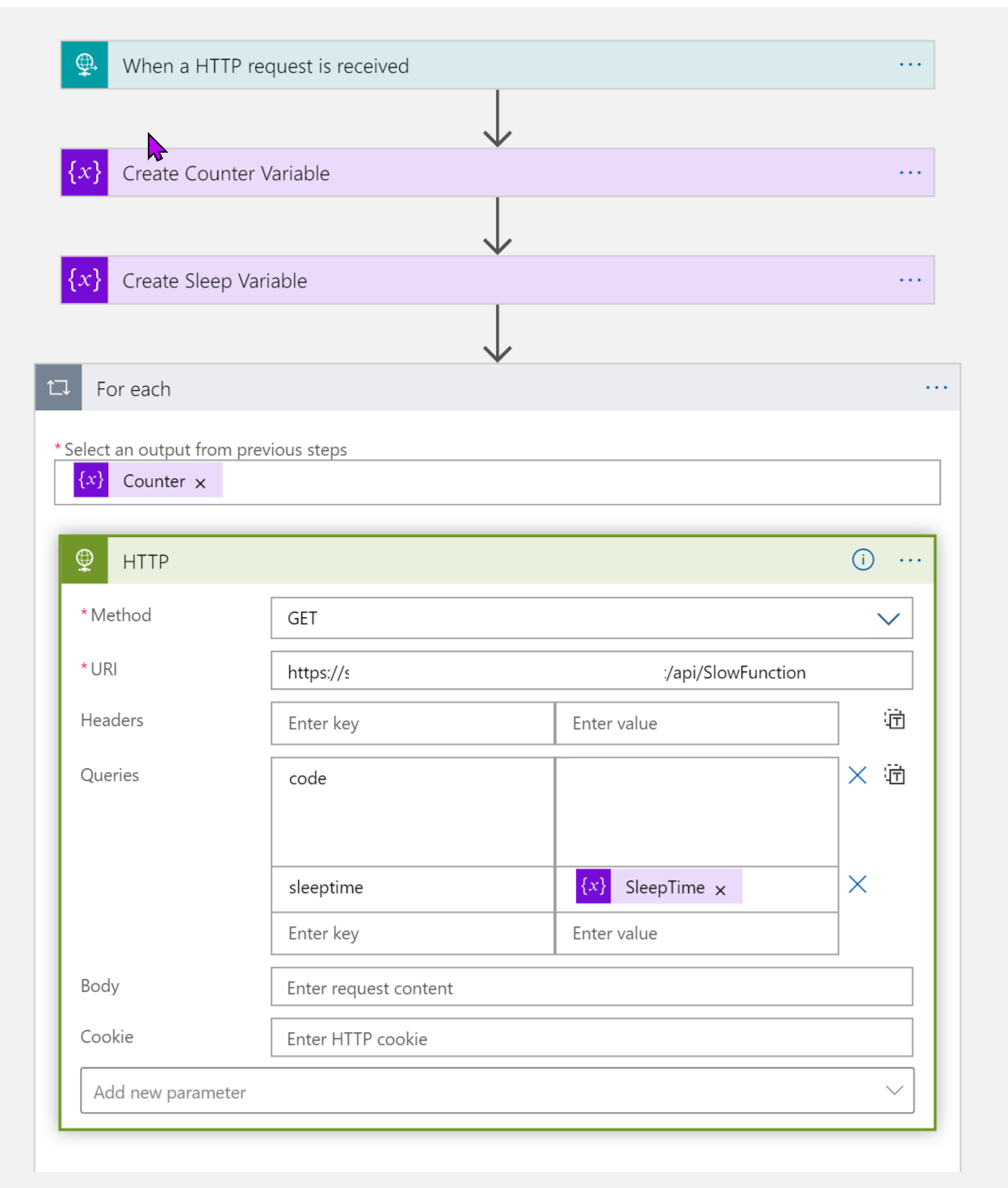

I then created a logic app that ran a series of HTTP requests in parallel by using a foreach loop. For a couple of these I adjusted the concurrency so it would run in batches. It is by no means meant to be an exact measurement of how the scale controller works, but to give you an idea of what is going on between the scenes.

Since history is a factor, I ran this series twice, deleting and recreating the Functions app in between and got similar results each time.

The process for each:

- Edit the Logic App variables and concurrency.

- Completely stop and start the Functions app so it goes back to a single instance.

- Send a warm-up ping to the app so that startup time is not a factor in total request time.

- Run the Logic App to send the configured requests.

- Record the total runtime from the Logic App and the number of active servers at the end of the test via App Insights live metrics.

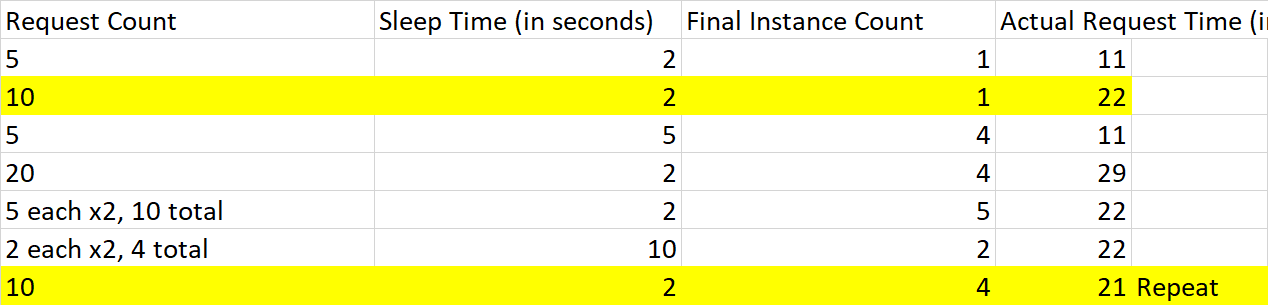

Here are the results. As you can see, the first couple tests did not create any additional instances, but after that the total jumped quickly. Because of warm up time, the total time it took to run the requests didn't reflect the benefits of the additional VMs, but a steady stream of traffic would have better results over time.