Hello @M_H ,

There will be no additional charge from Azure Databricks End.

If you are saving the data into Azure Storage Account, then you will be billed for the amount of data stored.

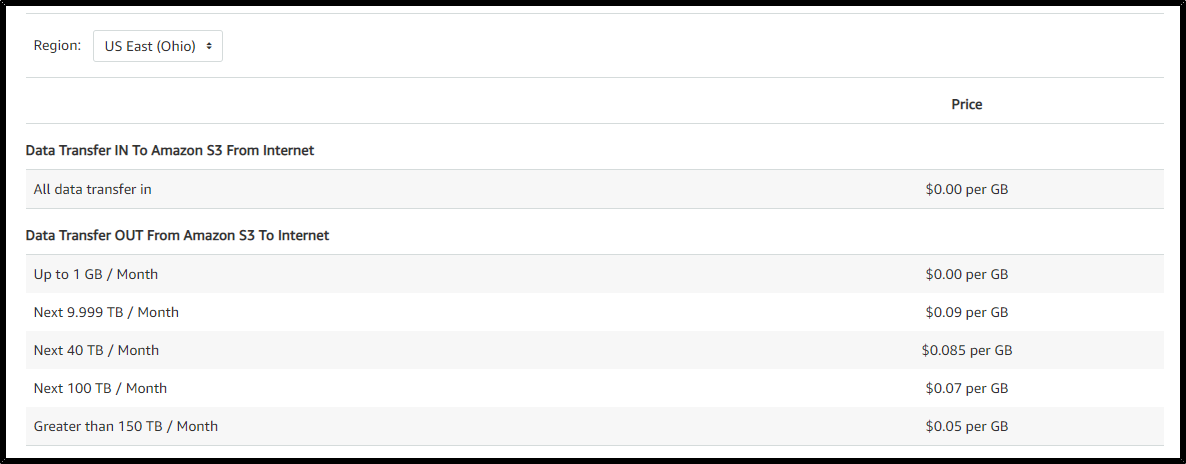

You need to pay data transfer out from Amazon S3 to internet.

From Amazon S3 pricing page, here is the data transfer cost.

Hope this helps. Do let us know if you any further queries.

----------------------------------------------------------------------------------------

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.