Set all files active to my account anonymized@USER

(Azure Data Factory) Uncompressing an archive file and uploading to blob - how to handle invalid blob names that trigger the Bad Request error

Hi everyone!

I am working on a simple Azure Data Factory pipeline that extracts a .tar.gz archive stored on a blog storage and stores the extracted files back on the blob storage. Here is my setup.

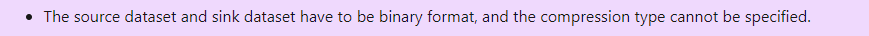

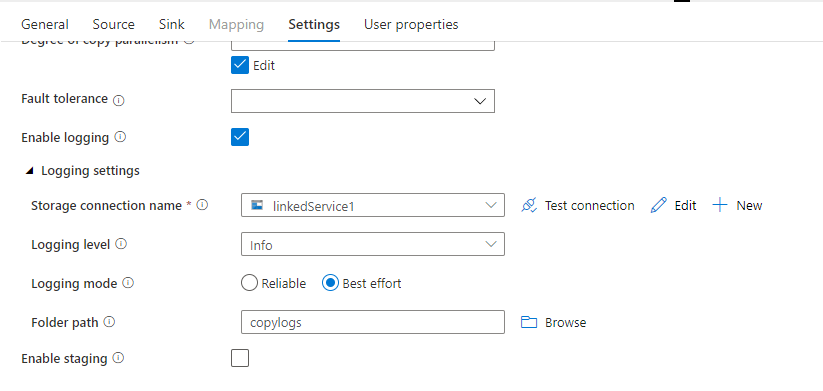

The pipeline only contains a Copy Data activity. The source of the activity is my .tar.gz blob. The sink is a blob container. I set the compression type of the source to TarGZip and set the compression type of my target blob container to None. This setup worked fine until I came across a .tar.gz that has this file inside:

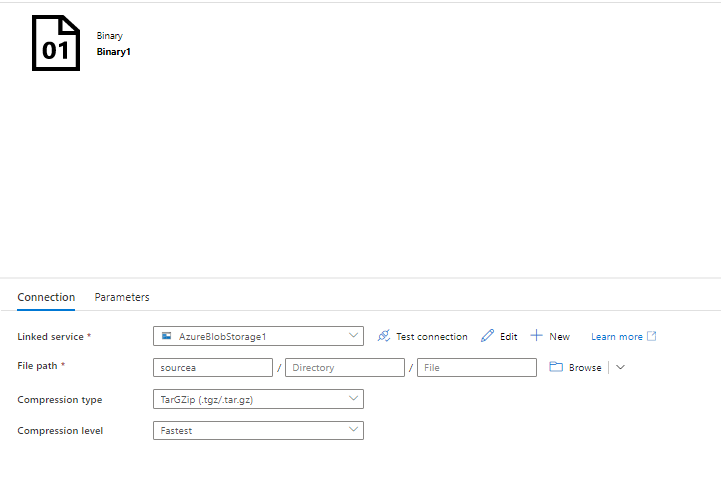

"123fa5b3-fde5-4763-8100-1b0dabf33606. ภรภภร"

I tried making a file by that name on my computer and Windows accepted it as a valid file name but failed to upload it manually through Azure Storage Explorer. Removing the unreadable part at the end will allow the upload to go through. So I really suspect this strange naming to be the root cause.

Questions:

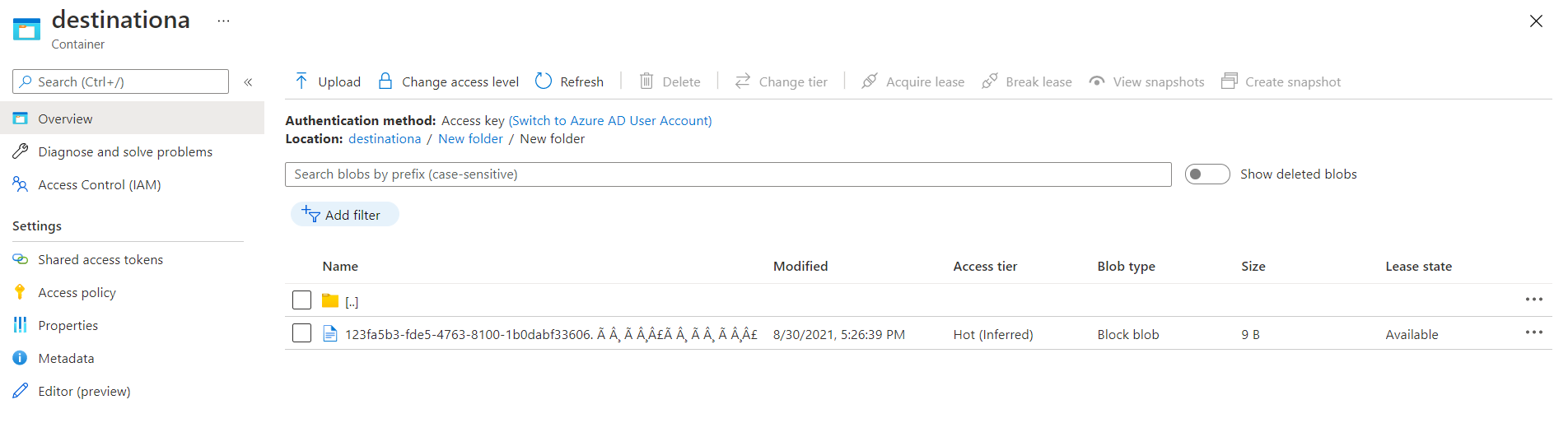

- Is there a way for the Copy Data activity to skip files that fail to upload to the target blob container? As long as it logs which files it skips, I am all for it. From the documentation it seems the 'Enable Fault Tolerance" will do the job but the option is grayed out in the adf UI.

- If anyone has a different idea on how to achieve the same things without using Azure Data Factory, please feel free to share. The reason I chose adf is that my .tar.gz is very huge (almost 1 TB in size) and others solution I can think of cannot handle files this big.

Thanks!

Jimmy B