Hello,

I have managed to pre-process the data on the files with the following code:

run = Run.get_context()

ws = run.experiment.workspace

datastore = Datastore.get(ws, 'workspaceblobstore')

data_paths = [(datastore, 'UI/08-26-2021_014718_UTC/**/*.csv')]

tabular = Dataset.Tabular.from_delimited_files(path=data_paths)

dataframe1 = tabular.to_pandas_dataframe()

And like this I can modify and clean the data as necessary, however this is the same as creating a tabular dataset that will take random rows for the training of the model (random selection per frame) while I need to train according to the csv files (random selection per well/file), which again is very simple with python but have yet to manage with azure specially since my already designed workflow is in design (where data is pre-processed, trained with tunning hyper-parameters and evaluated).

The code from python I want to recreate:

for file in listOfFile:

new_well=pd.read_csv(os.path.join(path,file))

So, I can train with new well that represents the csv files.

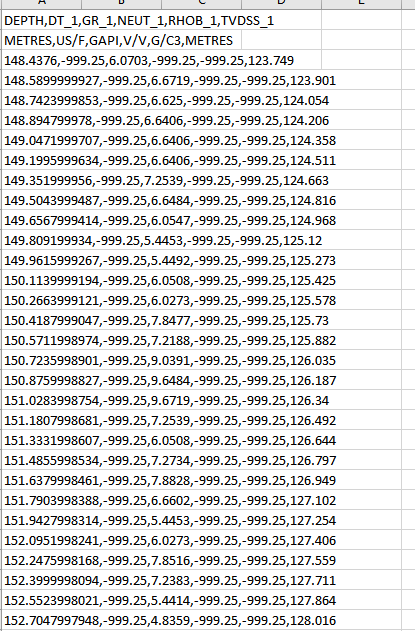

I am attaching an example of the csv files I have to processed (in total I have over 2000 documents).