Hi everyone,

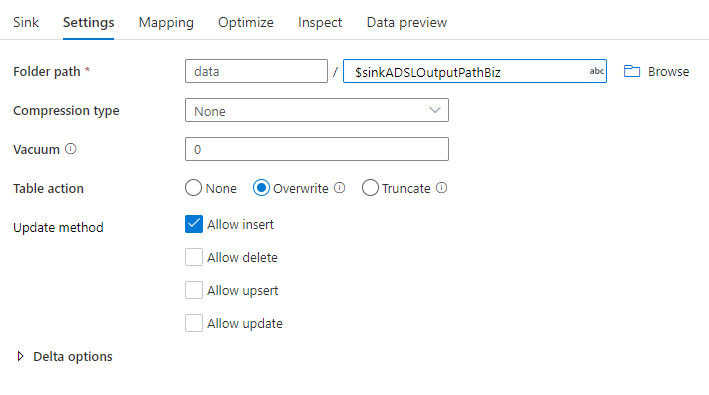

we are writing to a delta table in a synapse data flow using an inline dataset with the following settings:

With overwrite set as table action we added a new snapshot to the target table every day making a full load.

We left the default vacuum value of 0 which means 30 day.

The day after every run the files from the previous load are marked for remove in the logs:

{"remove":{"path":"part-00000-49dfde94-43b2-4444-a8b1-e683fb5552c5-c000.snappy.parquet","deletionTimestamp":1627905681270,"dataChange":true}}

However, after a month files are not getting removed from the table location.

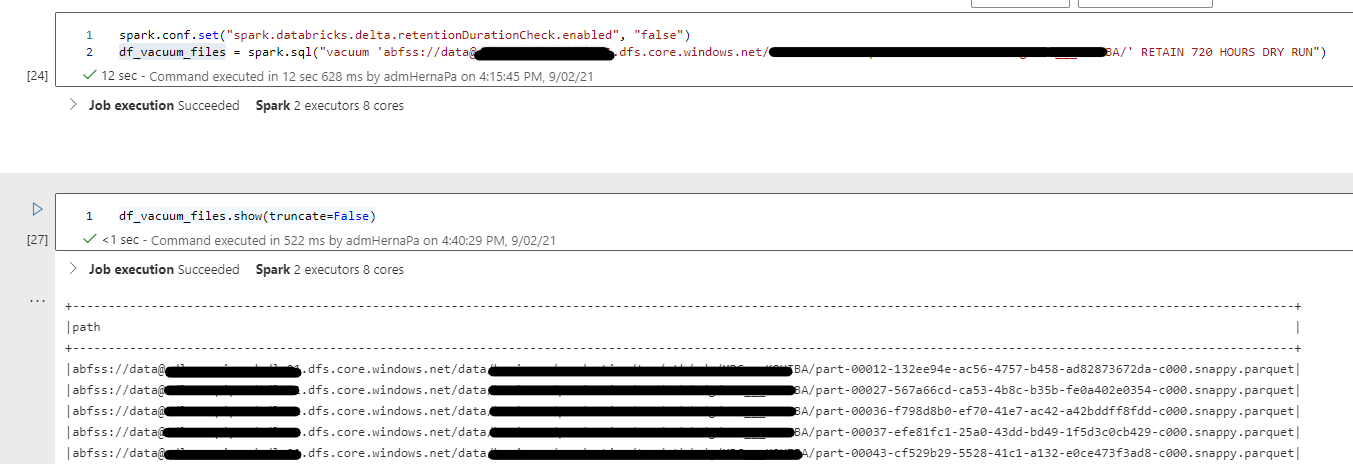

I analyzed which files should be removed using a dry run:

There are around 4000 files to be deleted.

If I execute a vacuum in a notebook then the files are removed.

I would like to know why the vacuum from the data flow is not removing the data exceeding the threshold, am I missing something or is this a bug?

Any information will be appreciated.

Best regards,

Paul Hernandez