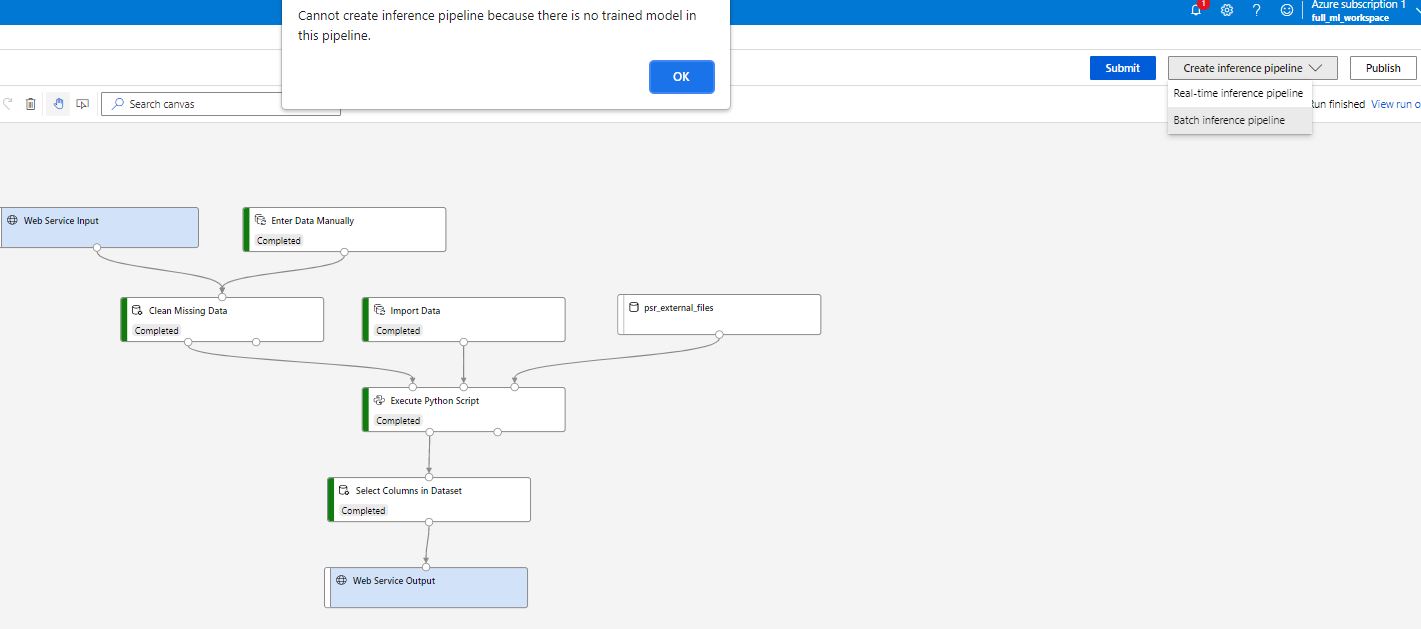

Hi, thanks for reaching out. Please review following instructions for batch inference, your pipeline should look like the pipeline in this document. Web Service input/out is used in real-time inference pipeline. Ensure to update, re-submit pipeline, and try batch inference again. Hope this helps!

Deploy Azure ML pipeline as web App: Inference Pipeline Error (No Model)

David Eeckhout

21

Reputation points

Since Azure ML Studio (classic) is ending, I want to recreate my Pipeline (see image), it works when I run it, but how can I deploy the Pipeline? I get an error when I want to create Batch Inference Pipeline ['Cannot create inference pipeline because there is no trained model in this pipeline.'].

How can I deploy this pipeline and get endpoints?

Thanks

Azure Machine Learning

Azure Machine Learning

An Azure machine learning service for building and deploying models.

3,332 questions