I'm loading some data (from a parquet file, as it happens), and after some filtering and preparation, I'm wanting to write the output back to a JSON sink.

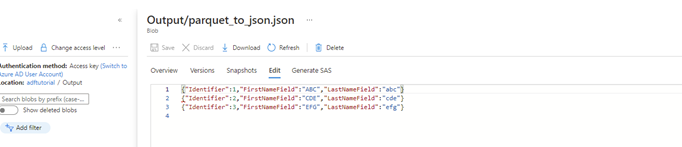

I have no issues getting the data, or even writing it. My issue is that the output it generates is as below.

{"meterId":"21IB04060","servicePointId":"47434","transmitterId":"4CAE000040606B07","rowTimestamp":"2021-09-28 13:00:00","rowType":"Index","rowValue":436574,"rowChange":2449}

{"meterId":"21IB04060","servicePointId":"47434","transmitterId":"4CAE000040606B07","rowTimestamp":"2021-09-28 14:00:00","rowType":"Index","rowValue":437234,"rowChange":660}

I cant then use this JSON file because it's written in 'Document per line' format. Essentially, this string is invalid JSON (as tested with JSONLint).

Valid JSON would be the following.

[{"meterId":"21IB04060","servicePointId":"47434","transmitterId":"4CAE000040606B07","rowTimestamp":"2021-09-28 13:00:00","rowType":"Index","rowValue":436574,"rowChange":2449},

{"meterId":"21IB04060","servicePointId":"47434","transmitterId":"4CAE000040606B07","rowTimestamp":"2021-09-28 14:00:00","rowType":"Index","rowValue":437234,"rowChange":660}]

So, my question is, how can I produce this in a sensible way? I've read around the forums that running a second flow to read the JSON output I am generating and setting the format there, I can then output the JSON in the desired format. This seems like a crazy workaround however.

Any suggestions would be awesome.

Thanks