A few seconds before the application pool error I can see a few errors in the application logs in the eventviewer:

Source: MSExchange Common

ID: 4999

Watson report about to be sent for process id: 26864, with parameters: E12IIS, c-RTL-AMD64, 15.02.0922.013, w3wp#MSExchangeMapiMailboxAppPool, M.E.Data.Storage, M.E.D.S.CoreAttachmentCollection.OnAfterAttachmentSave, System.ArgumentException, 743f-dumptidset, 15.02.0922.013.

ErrorReportingEnabled: True

Source: ASP.NET 4.0.30319.0

ID: 1325

An unhandled exception occurred and the process was terminated.

Application ID: DefaultDomain

Process ID: 26864

Exception: System.Runtime.Serialization.SerializationException

Message: Type 'Microsoft.Exchange.Diagnostics.ExWatson+CrashNowException' in Assembly 'Microsoft.Exchange.Diagnostics, Version=15.0.0.0, Culture=neutral, PublicKeyToken=31bf3856ad364e35' is not marked as serializable.

StackTrace: at System.Runtime.Serialization.Formatters.Binary.WriteObjectInfo.InitSerialize(Object obj, ISurrogateSelector surrogateSelector, StreamingContext context, SerObjectInfoInit serObjectInfoInit, IFormatterConverter converter, ObjectWriter objectWriter, SerializationBinder binder)

at System.Runtime.Serialization.Formatters.Binary.WriteObjectInfo.Serialize(Object obj, ISurrogateSelector surrogateSelector, StreamingContext context, SerObjectInfoInit serObjectInfoInit, IFormatterConverter converter, ObjectWriter objectWriter, SerializationBinder binder)

at System.Runtime.Serialization.Formatters.Binary.ObjectWriter.Serialize(Object graph, Header[] inHeaders, __BinaryWriter serWriter, Boolean fCheck)

at System.Runtime.Serialization.Formatters.Binary.BinaryFormatter.Serialize(Stream serializationStream, Object graph, Header[] headers, Boolean fCheck)

at System.Runtime.Remoting.Channels.CrossAppDomainSerializer.SerializeObject(Object obj, MemoryStream stm)

at System.AppDomain.Serialize(Object o)

at System.AppDomain.MarshalObject(Object o)

Source: .NET Runtime

ID: 1026

Application: w3wp.exe

Framework Version: v4.0.30319

Description: The process was terminated due to an unhandled exception.

Exception Info: System.ArgumentException

at System.ThrowHelper.ThrowArgumentException(System.ExceptionResource)

at System.Collections.Generic.TreeSet1[[System.Collections.Generic.KeyValuePair2[[System.Int32, mscorlib, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089],[System.__Canon, mscorlib, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089]], mscorlib, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089]].AddIfNotPresent(System.Collections.Generic.KeyValuePair2<Int32,System.__Canon>) at System.Collections.Generic.SortedDictionary2[[System.Int32, mscorlib, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089],[System.__Canon, mscorlib, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089]].Add(Int32, System.__Canon)

at Microsoft.Exchange.Data.Storage.CoreAttachmentCollection.OnAfterAttachmentSave(Int32)

at Microsoft.Exchange.Data.Storage.AttachmentPropertyBag.SaveChanges(Boolean)

at Microsoft.Exchange.Data.Storage.CoreAttachment.Save()

at Microsoft.Exchange.RpcClientAccess.Handler.Attachment.SaveChanges(Microsoft.Exchange.RpcClientAccess.Parser.SaveChangesMode)

at Microsoft.Exchange.RpcClientAccess.Handler.RopHandler+<>c__DisplayClass120_0.<SaveChangesAttachment>b__0()

at Microsoft.Exchange.RpcClientAccess.Handler.ExceptionTranslator.TryExecuteCatchAndTranslateExceptions[System.__Canon, mscorlib, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089]

at Microsoft.Exchange.RpcClientAccess.Handler.RopHandlerHelper.CallHandler[System.__Canon, mscorlib, Version=4.0.0.0, Culture=neutral, PublicKeyToken=b77a5c561934e089]

at Microsoft.Exchange.RpcClientAccess.Handler.RopHandlerHelper.CallHandler(Microsoft.Exchange.RpcClientAccess.Parser.IRopHandler, System.Func1<Microsoft.Exchange.RpcClientAccess.Parser.RopResult>, Microsoft.Exchange.RpcClientAccess.Parser.IResultFactory, System.Func4<Microsoft.Exchange.RpcClientAccess.Parser.IResultFactory,Microsoft.Exchange.RpcClientAccess.ErrorCode,System.Exception,Microsoft.Exchange.RpcClientAccess.Parser.RopResult>)

at Microsoft.Exchange.RpcClientAccess.Handler.RopHandler.GenericRopHandlerMethod(Microsoft.Exchange.RpcClientAccess.Parser.IServerObject, System.Func1<Microsoft.Exchange.RpcClientAccess.Parser.RopResult>, Microsoft.Exchange.RpcClientAccess.Parser.IResultFactory, System.Func4<Microsoft.Exchange.RpcClientAccess.Parser.IResultFactory,Microsoft.Exchange.RpcClientAccess.ErrorCode,System.Exception,Microsoft.Exchange.RpcClientAccess.Parser.RopResult>)

at Microsoft.Exchange.RpcClientAccess.Handler.RopHandler.StandardRopHandlerMethod(Microsoft.Exchange.RpcClientAccess.Parser.IServerObject, System.Func1<Microsoft.Exchange.RpcClientAccess.Parser.RopResult>, Microsoft.Exchange.RpcClientAccess.Parser.IResultFactory) at Microsoft.Exchange.RpcClientAccess.Parser.RopSaveChangesAttachment.InternalExecute(Microsoft.Exchange.RpcClientAccess.Parser.IServerObject, Microsoft.Exchange.RpcClientAccess.Parser.IRopHandler, System.ArraySegment1<Byte>)

at Microsoft.Exchange.RpcClientAccess.Parser.InputRop.Execute(Microsoft.Exchange.RpcClientAccess.Parser.IConnectionInformation, Microsoft.Exchange.RpcClientAccess.Parser.IRopDriver, Microsoft.Exchange.RpcClientAccess.Parser.ServerObjectHandleTable, System.ArraySegment1<Byte>) at Microsoft.Exchange.RpcClientAccess.Parser.RopDriver.ExecuteRops(System.Collections.Generic.List1<System.ArraySegment1<Byte>>, Microsoft.Exchange.RpcClientAccess.Parser.ServerObjectHandleTable, System.ArraySegment1<Byte>, Int32, Int32, Boolean, Int32 ByRef, Microsoft.Exchange.RpcClientAccess.Parser.AuxiliaryData, Boolean, Byte[] ByRef)

at Microsoft.Exchange.RpcClientAccess.Parser.RopDriver.ExecuteOrBackoff(System.Collections.Generic.IList1<System.ArraySegment1<Byte>>, System.ArraySegment1<Byte>, Int32 ByRef, Microsoft.Exchange.RpcClientAccess.Parser.AuxiliaryData, Boolean, Byte[] ByRef) at Microsoft.Exchange.RpcClientAccess.Parser.RopDriver.Execute(System.Collections.Generic.IList1<System.ArraySegment1<Byte>>, System.ArraySegment1<Byte>, Int32 ByRef, Microsoft.Exchange.RpcClientAccess.Parser.AuxiliaryData, Boolean, Byte[] ByRef)

at Microsoft.Exchange.RpcClientAccess.Server.RpcDispatch+<>c__DisplayClass49_0.<Execute>b__2()

at Microsoft.Exchange.RpcClientAccess.Server.RpcDispatch.ExecuteWrapper(System.Func1<ExecuteParameters>, System.Func1<Microsoft.Exchange.RpcClientAccess.RpcErrorCode>, System.Action1<System.Exception>) at Microsoft.Exchange.RpcClientAccess.Server.RpcDispatch.Execute(Microsoft.Exchange.Rpc.IProtocolRequestInfo, IntPtr ByRef, System.Collections.Generic.IList1<System.ArraySegment1<Byte>>, System.ArraySegment1<Byte>, Int32 ByRef, System.ArraySegment1<Byte>, System.ArraySegment1<Byte>, Int32 ByRef, Boolean, Byte[] ByRef)

at Microsoft.Exchange.RpcClientAccess.Server.WatsonOnUnhandledExceptionDispatch+<>c__DisplayClass32_0.<Microsoft.Exchange.RpcClientAccess.Server.IRpcDispatch.Execute>b__0()

at Microsoft.Exchange.Common.IL.ILUtil.DoTryFilterCatch(System.Action, System.Func2<System.Object,Boolean>, System.Action1<System.Object>)

Exception Info: Microsoft.Exchange.Diagnostics.ExWatson+CrashNowException

at Microsoft.Exchange.Diagnostics.ExWatson.CrashNow()

at System.Threading.ExecutionContext.RunInternal(System.Threading.ExecutionContext, System.Threading.ContextCallback, System.Object, Boolean)

at System.Threading.ExecutionContext.Run(System.Threading.ExecutionContext, System.Threading.ContextCallback, System.Object, Boolean)

at System.Threading.ExecutionContext.Run(System.Threading.ExecutionContext, System.Threading.ContextCallback, System.Object)

at System.Threading.ThreadHelper.ThreadStart()

Source: Application Error

ID: 1000

Faulting application name: w3wp.exe, version: 10.0.17763.1, time stamp: 0xcfdb13d8

Faulting module name: KERNELBASE.dll, version: 10.0.17763.2183, time stamp: 0x8e097f91

Exception code: 0xe0434352

Fault offset: 0x0000000000039329

Faulting process id: 0x68f0

Faulting application start time: 0x01d7b51c6fb1ee51

Faulting application path: c:\windows\system32\inetsrv\w3wp.exe

Faulting module path: C:\Windows\System32\KERNELBASE.dll

Report Id: 0a62472a-6a07-48b1-9b7c-a19f297900c3

Faulting package full name:

We do not need to do anything to get it working again, it recovers itself (Outlook not responding for a few minutes during this time).

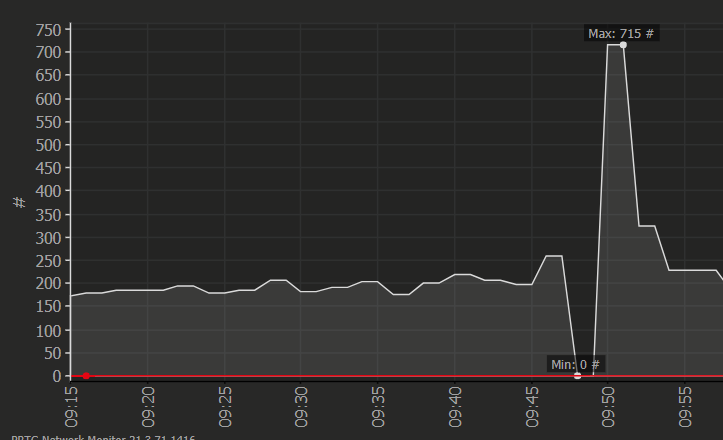

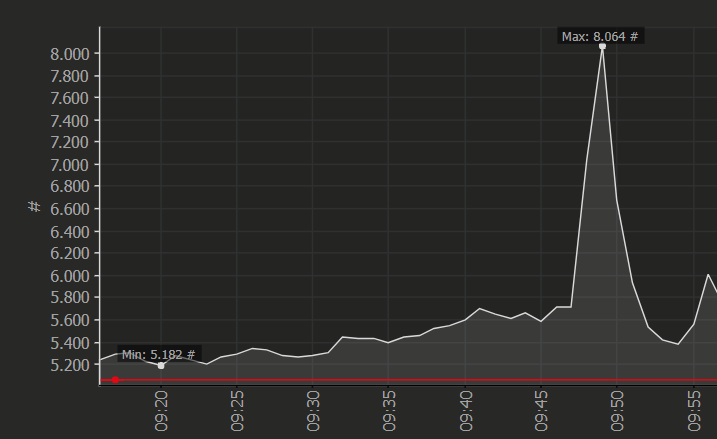

We use PRTG as monitoring and what I can see there at the time of the error:

MAPI connections drops to 0, has a spike after that and back to normal as it was before:

There is a spike in IIS Current Connections:

Additionally there is a increase of network traffic and CPU load.