Our engineering team has determined this indeed is a bug and that a fix is in the works. As a workaround, you will have to stop and start the app service as opposed to recycling, which is what restart does. From a deployment standpoint, create an app setting placeholder that is then modified by your pipeline to force a recycle of the worker.

Azure App Service is mounted to an incorrect mount upon restart

We deployed a small application to a Linux Azure App Service using a Docker Compose file. Everything works well, but since all our saved data is deleted when restarting, I decided to use an Azure File Share to store data between deploys. I mounted the drive and set it as a volume inside the compose file. The Storage account has rights to access the application. Upon restarting the application, the files are correctly saved on the File Share. When SSHing to the application and running "df -h", this is the output (the part in bold is our File Share, vrp-file-share is the name of the share). EDIT: It seems that I'm unable to bold parts of the code, it's in line 7 with the double asterisk at the beginning.

Filesystem Size Used Avail Use% Mounted on

none 48G 31G 15G 68% /

tmpfs 64M 0 64M 0% /dev

tmpfs 962M 0 962M 0% /sys/fs/cgroup

shm 62M 0 62M 0% /dev/shm

/dev/sdb1 62G 57G 5.4G 92% /var/ssl

**//vrpdata.file.core.windows.net/vrp-file-share 5.0T 384K 5.0T 1% /data**

/dev/loop0p1 48G 31G 15G 68% /etc/hosts

udev 944M 0 944M 0% /dev/tty

tmpfs 962M 0 962M 0% /proc/acpi

tmpfs 962M 0 962M 0% /proc/scsi

tmpfs 962M 0 962M 0% /sys/firmware

Now, when I next restart the application, this seems to break. It seems like another mount replaces our mounted File Share and the data is saved on it insted of on our Share. This is what the df -h command returns now:

Filesystem Size Used Avail Use% Mounted on

none 48G 31G 15G 68% /

tmpfs 64M 0 64M 0% /dev

tmpfs 962M 0 962M 0% /sys/fs/cgroup

shm 62M 0 62M 0% /dev/shm

/dev/sdb1 62G 57G 5.2G 92% /var/ssl

**default//10.0.160.16/volume-42-/3cd5eafffc987ed198a3/81e14fea9026459689e7e84d07e6a841 1000G 3.0G 998G 1% /data**

/dev/loop0p1 48G 31G 15G 68% /etc/hosts

udev 944M 0 944M 0% /dev/tty

tmpfs 962M 0 962M 0% /proc/acpi

tmpfs 962M 0 962M 0% /proc/scsi

tmpfs 962M 0 962M 0% /sys/firmware

It looks like the File Share has been replaced by the default drive, which I can't figure out why it's mounted. And this is how the application stays until I change something inside the configuration (i.e. change the mount path or the compose file) and restart it. Then it reverts to how it worked before, but another restart brings us back to the aforementioned issue. I'm completely stuck, if anyone has any idea why this is happening I'd be so thankful. I'm here for all possible questions.

Just as an aside, the application is written in Python 3.7 and we're using the /data/jobs folder to save some data. I've tried searching for the answer for hours and it's possible that I'm missing the solution because I'm unable to properly word the question...

EDIT: I've set the WEBSITES_ENABLE_APP_SERVICE_STORAGE parameter to true, but it gets removed every so often and not by my intervention. I've checked and the issue persists regardless of if I've set the parameter.

Azure Files

Azure Storage

Azure App Service

-

Ryan Hill 30,281 Reputation points Microsoft Employee Moderator

Ryan Hill 30,281 Reputation points Microsoft Employee Moderator2021-10-27T19:29:42.787+00:00

1 additional answer

Sort by: Most helpful

-

jsladovic 21 Reputation points

2021-10-04T09:47:41.337+00:00 @Ryan Hill I don't think there's anything overly confidential so I'll share all of it here. I'll just omit the container registry name from the Docker images. Please let me know if I missed anything. The service shouldn't be connected to VNET.

Docker Compose (I removed a couple of services for brevity, they are configured identically as the cost matrix engine, the VRP service is the one that uses the mount):

services: vrp: image: {container_registry_name}.azurecr.io/vrp ports: - 6000:6000 volumes: - vrp-data:/data/jobs cost-matrix-engine: image: {container_registry_name}.azurecr.io/cost-matrix-engine ports: - 3000:3000The VRP Dockerfile, the RUN commands are mostly just to enable SSHing onto the service:

FROM python:3.9.1-slim-buster RUN apt-get -y -o Acquire::Max-FutureTime=86400 update RUN apt-get install -y ssh RUN echo "root:Docker!" | chpasswd RUN mkdir /run/sshd EXPOSE 6000 2222 COPY sshd_config /etc/ssh/ RUN ln -sf python3 /usr/bin/python & python3 -m ensurepip WORKDIR /app COPY requirements.txt requirements.txt RUN pip install -r requirements.txt COPY ./GDiEnsembleVRP ./GDiEnsembleVRP COPY ./Common ./Common RUN chmod +x "GDiEnsembleVRP/docker_entrypoint.sh" ENTRYPOINT ["GDiEnsembleVRP/docker_entrypoint.sh"]The docker entrypoint:

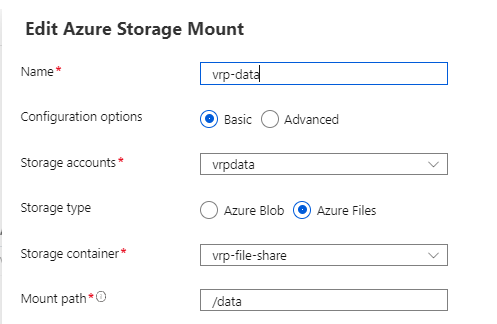

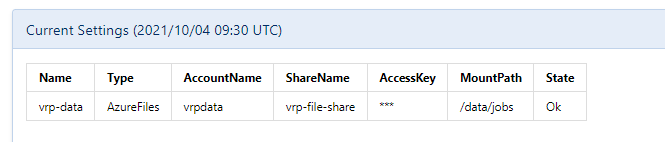

#!/bin/bash set -euo pipefail /usr/sbin/sshd gunicorn -b 0.0.0.0:6000 GDiEnsembleVRP.Apps.AsyncServerApp.wsgi:applicationThe storage mount configuration:

I'm not 100% certain what to expect on the External Storage Mount tab, but this is what I'm seeing both when it works and when it doesn't:

Just as a note, the mount path being /data or /data/jobs in different images is because I use those changes to "reset" the storage, the same issue occurs with both those settings.

EDIT: While this was a long shot, disabling the app service logs did nothing to fix the issue.