Hello @Joaquin Chemile ,

Welcome to Microsoft Q&A platform.

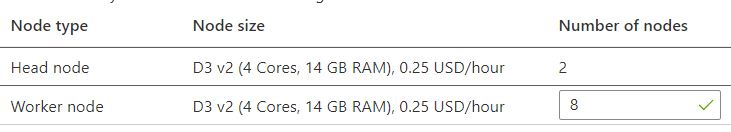

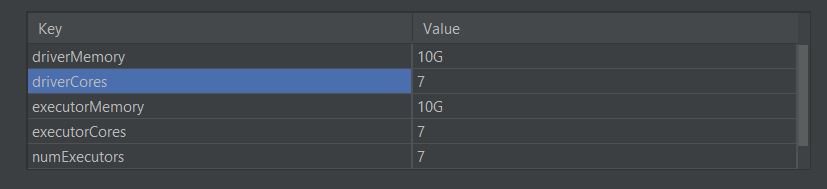

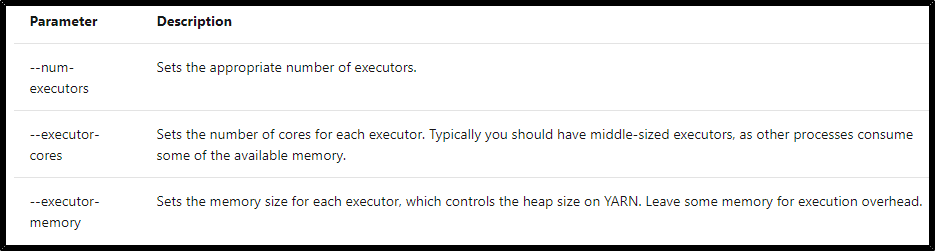

Depending on your Spark workload, you may determine that a non-default Spark configuration provides more optimized Spark job executions. Do benchmark testing with sample workloads to validate any non-default cluster configurations. Some of the common parameters that you may consider adjusting are:

Here are some common parameters you can adjust:

This article discusses how to optimize the configuration of your Apache Spark cluster for best performance on Azure HDInsight.

For more information on using Ambari to configure executors, see Apache Spark settings - Spark executors.

Hope this helps. Do let us know if you any further queries.

----------------------------------------------------------------------------------------

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.