Hi,

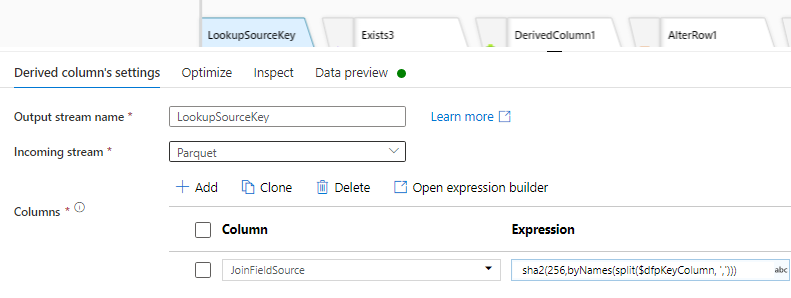

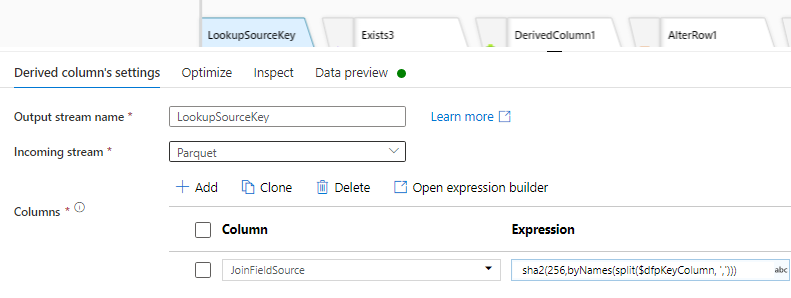

The pipeline uploads data from source to sink Delta parquet dynamically. One of the parameters passed to the dataflow is the KeyColumn.

If the object to load has only one KeyColumn, all works fine, i.e. update/insert/delete into parquet sink delta.

The problem is when the keyColumn is more than one, then the parquet file is keep being inserted with new rows for each of the Keys.

It looks like the keycolumns are not being handled correctly in the dataflow if they are more than one, i.e. "ProductID, LocationID"

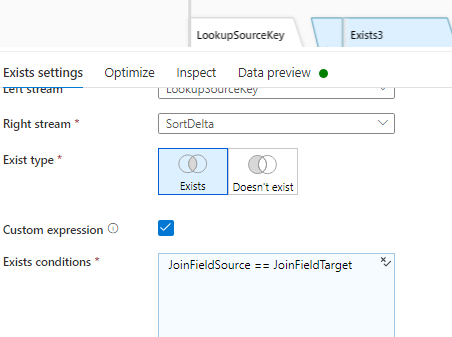

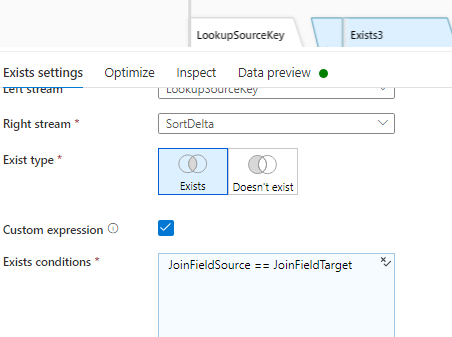

data flow has:

delta sink setting--> update

delta sink setting--> delete

delta sink setting--> insert

It looks like the issue is on the update I think.

Any suggestions?