Hi Sathyamoorthy,

Thanks for looking into this. truly appreciate it.

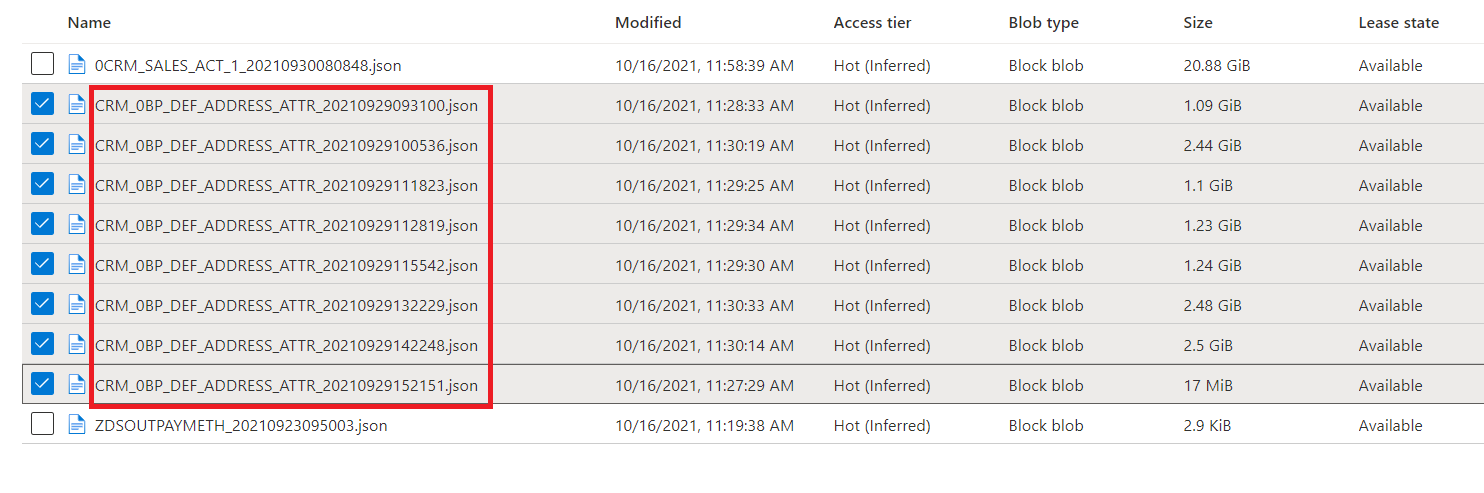

I am developing in PRE-PROD now where SAP extract jobs are failing and hence they are sending multiple copies of few files with different HHMMSS component of timestamp unfortunately.

There was no issues in DEV and QA environments and hence I did not face this issue.

In PRE-PROD, I am copying each filename-copy separately and once loaded into SQLDB at the end of the flow, I come back and lift subsequent copy of the filename and process them. Fortunately, there are only few files having multiple copies on a day, but I still need to absorb the use case in my pipeline so when I schedule them, pipelines work in all scenario.