Just got off a support call with Microsoft. A hotfix has addressed this situation. We are not longer seeing the issue.

Stream Analytics: SQL output and column order in SA query

Hi!

I have a Stream Analytics query that sends data to several tables.

Example:

select

node_info.timestamp_utc as timestamp_utc,

node_info.node_name as node_name,

props.battery_level as battery_level,

props.firmware_version as firmware_version,

props.humidity as humidity,

props.node_id as node_id,

props.reserve_byte as reserve_byte,

props.rssi as rssi,

props.temperature as temperature,

props.transmission_count as transmission_count,

props.type as type,

props.Voltage as voltage,

props.pressure as pressure

into [output-raw]

from node_info

The order in which the columns are in the SELECT clause, and actual order of columns in the SQL table are not matching, but the names in the SA query and the SQL Table columns are matching.

SA job was sending all data to the respective columns correctly before yesterday when I restarted the job.

I started getting data conversion errors in some tables and found out that the query was trying to send data to SQL table in that exact order as it is in the SELECT clause ignoring the column names.

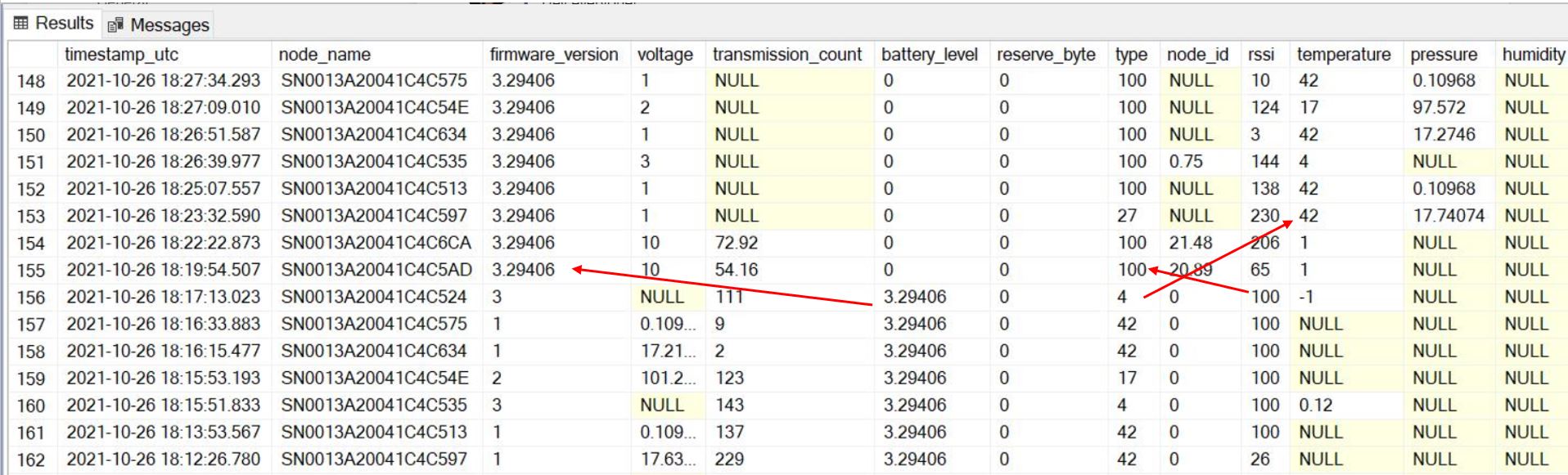

Where there was no data type conflict, the data just mixed up, you can see it in the example below:

Now I have an issue:

In one table, values in first 2 columns of the SQL table have default values generated automatically and I don't need to send them through SA job.

Let's say the columns are as follows:

EntityId - unique ID, generated by SQL

DateAdded - timestamp, generated by SQL

EventTimestamp - event timestamp that I generate in SA query

AlarmText - String that I generate in SA query

And the SA query for it looks like that

select

System.Timestamp() as EventTimestamp,

"Some alarms generated based on rules" as AlarmText

into [output-notifications]

from formatted_data

So before all data was getting to the respective table columns with no problems, but now it's trying to send EventTimestamp to the very first column of the output table (EntityId) as it is first in SELECT statement of the querty. And it's trying to send AlarmBody to the second (DateAdded ).

I understand that this change was made on backend recently and I haven't found anything on it in the documentation.

How do I handle skipping columns in this case?

Or how can I make the query send the data to the specified columns by column name as it was before?

Thank you!

1 additional answer

Sort by: Most helpful

-

Sasho Zahariev 36 Reputation points

Sasho Zahariev 36 Reputation points2021-10-28T13:07:29.13+00:00 Hello. Since Monday, we are having exactly the same issue - instead of inserting the mapped column to the respective mapped table column, the query tries to insert the first column from the output into the first column of the table.

Currently, there is no resolution and we plan to switch the technologies used, despite being in the middle of the project.