Originally posted on Stack Overflow: https://stackoverflow.com/questions/69745331/hdf5-usage-in-azure-blob

We store some data in HDF5 formats on Azure blob. I have noticed higher than expected ingress traffic and used capacity when overwriting and modifying H5.

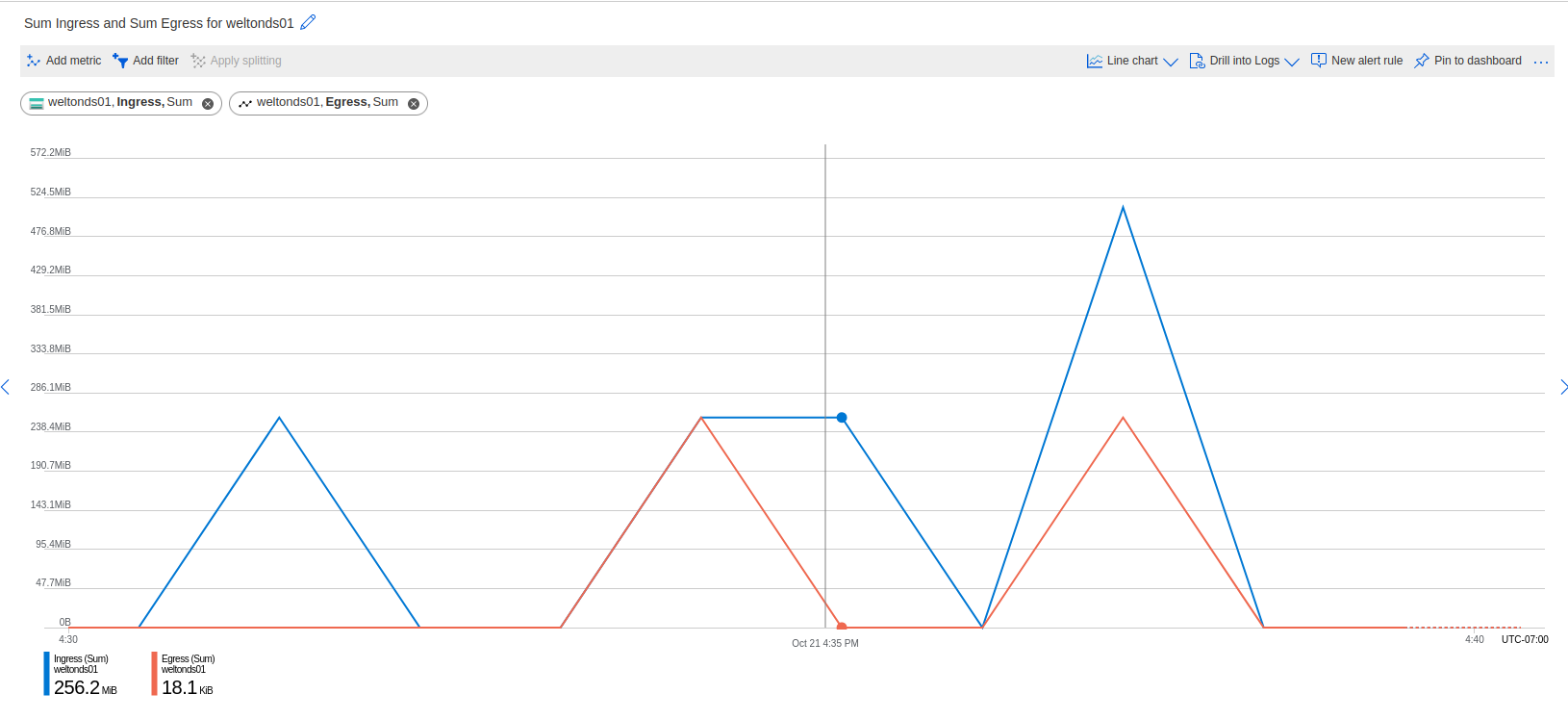

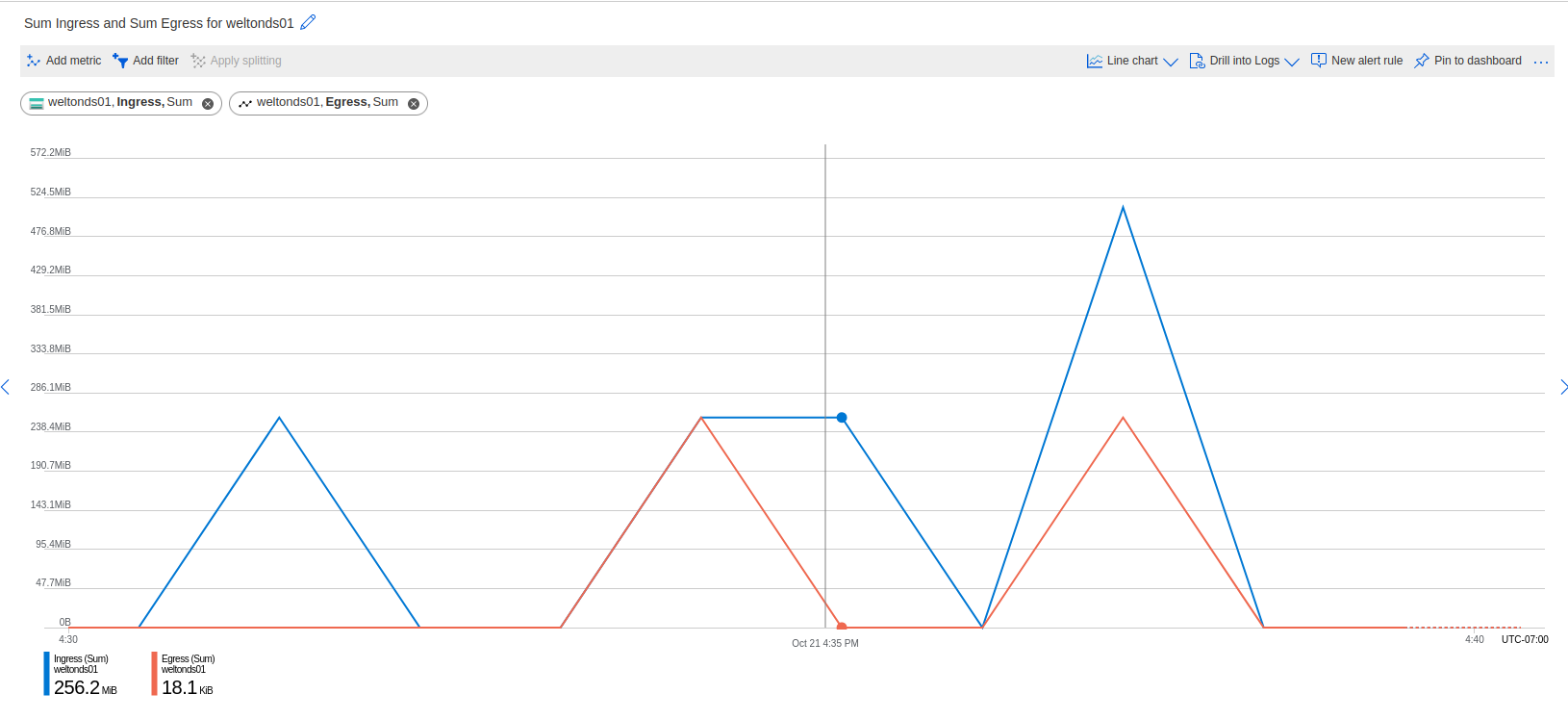

To test out the usage, I use a Python script to generate a H5 file that is exactly 256MB in size. The attached plot from Azure portal shows usage during the experiments:

- The first peak is the initial creation of the H5. Ingress traffic is 256MB and there's no egress, as expected.

- The second peak is when I ran the same script again without deleting the file created from the first run. It shows egress traffic of 256MB and also total ingress of 512MB. The resulting file is still 256MB.

- Ran it again for a third time without deleting the file, and third peak shows the same usage as the second.

The used capacity seems to be calculated based on ingress traffic, so we are being charged for 512MB even though we are only using 256MB. I would like to note that if I were to delete the original file and re-run the script again, we would have no egress traffic from the deletion and only 256MB ingress from creating the file again. I did similar experiments with csv and Python pickles and found no such odd behaviors in usage calculation. All tests are carried out on a Azure VM in the same region as the blob, with the blob storage mounted using blobfuse.

I would like to understand how Azure counts the traffic when modifying existing files. For those of you who uses H5 on Azure blob, is there a way to avoid the additional charge?

Python script I used to generate H5:

import tables

import numpy as np

db = 'test.h5'

class TestTable(tables.IsDescription):

col0 = tables.Float64Col(pos=0)

col1 = tables.Float64Col(pos=1)

col2 = tables.Float64Col(pos=2)

col3 = tables.Float64Col(pos=3)

data = np.zeros((1024*1024, 4))

tablenames = ['Test'+str(i) for i in range(8)]

mdb = tables.open_file(db, mode="w")

# Create tables

for name in tablenames:

mdb.create_table('/', name, TestTable)

table = eval('mdb.root.'+name)

table.append(list(map(tuple, data)))

table.flush()

mdb.close()