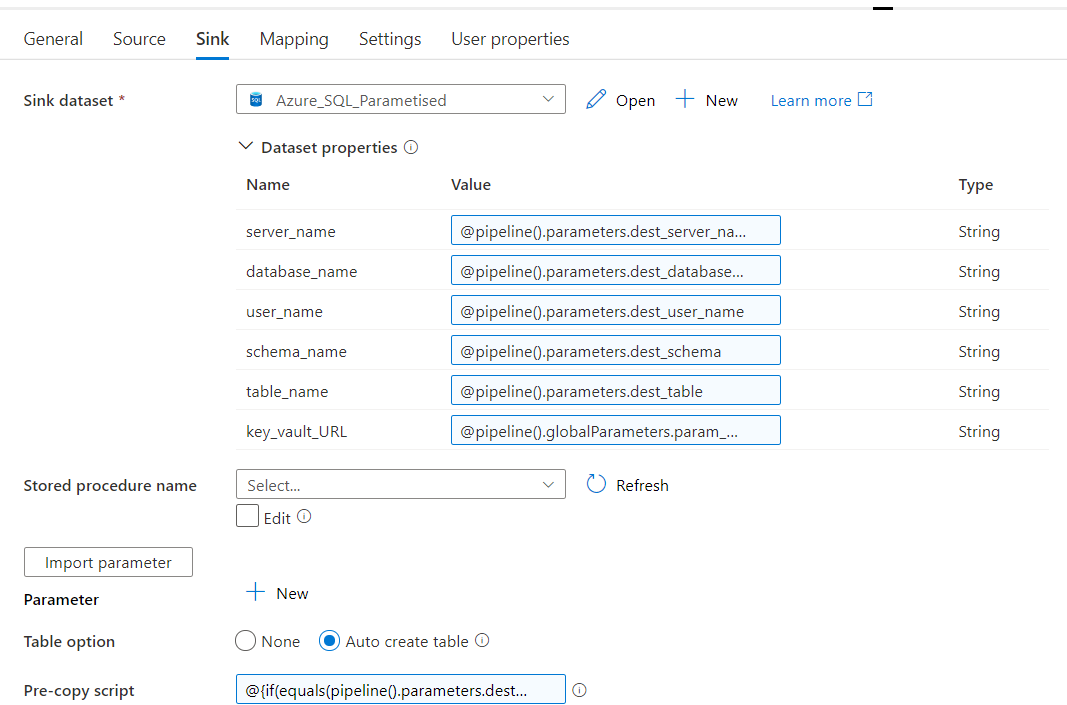

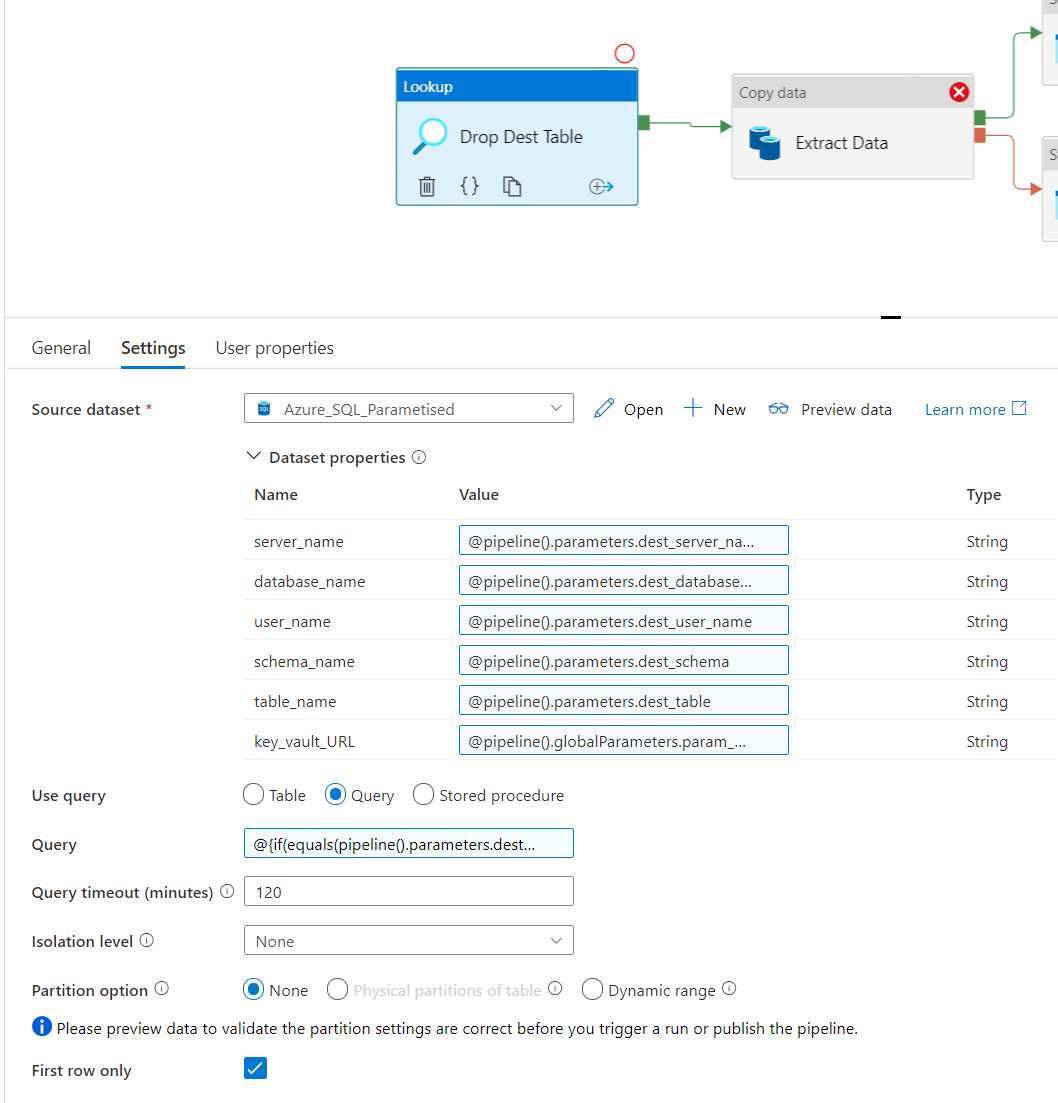

OK, I actually figured out a workaround. Drop the destination table before running the Copy Data activity (which it should do anyway, but obviously doesn't). So I create a Lookup activity, set the dynamic values to the destination server, then run a query with some dynamic settings, the Dynamic Query value looks like:

@{concat('DROP TABLE IF EXISTS [',pipeline().parameters.dest_schema,'].[',pipeline().parameters.dest_table,']; SELECT 1 as Col')}

Would much rather this extra step doesn't need to be done, anyone on the Data Factory team confirm this is a bug and will be fixed?