Hello @Raj D ,

Welcome to the Microsoft Q&A platform.

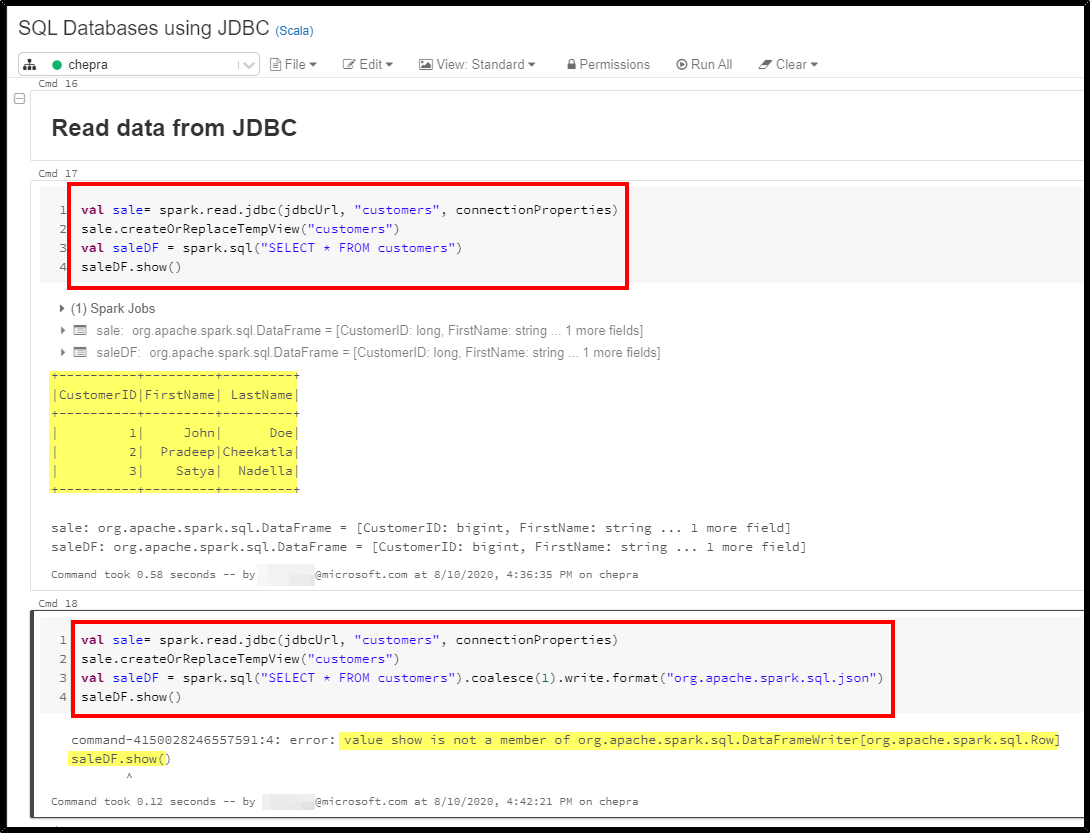

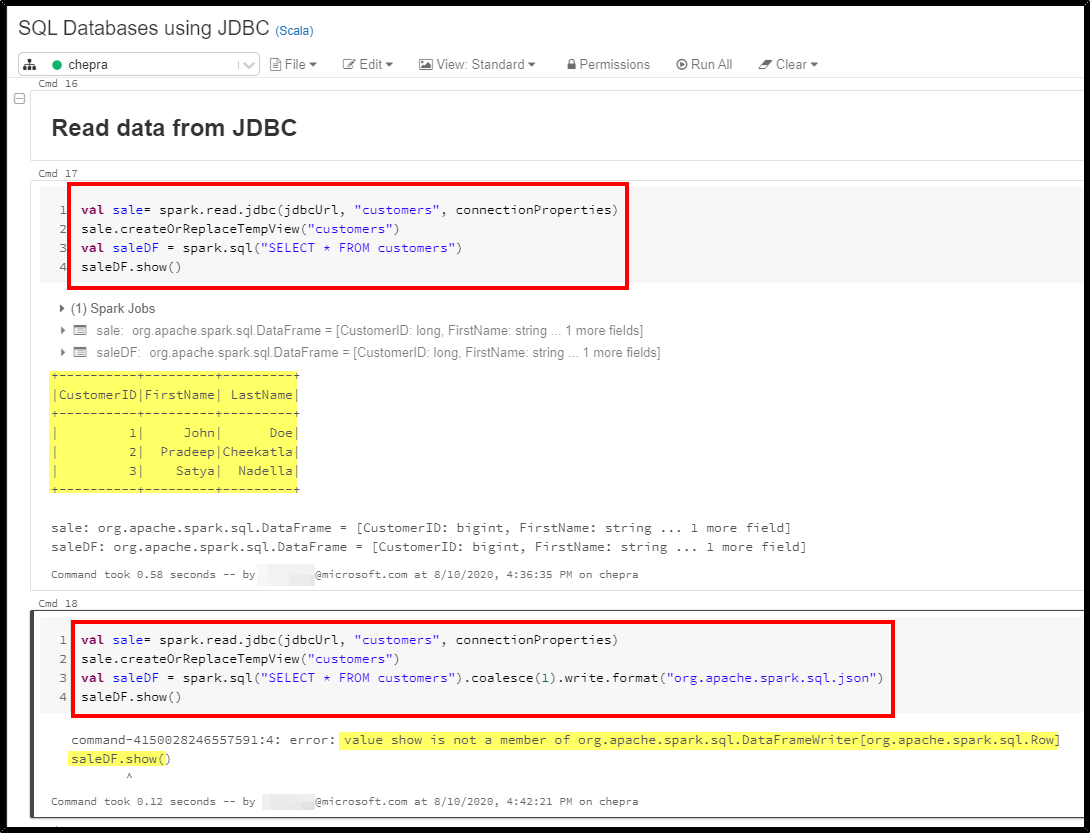

You are experiencing this error message “value show is not a member of org.apache.spark.sql.DataFrameWriter[org.apache.spark.sql.Row] saleDF.show()”, because it expecting the path of the json file to read the data from.

You don't create a JSON string before you save; Spark takes care of writing it out to JSON at the point of saving. Until such time as you actually write it to storage, the DataFrame is a logical construction without a public physical representation.

The write property returns a DataFrameWriter, not a DataFrame. (and a writer doesn't have the show method, only an actual DF does)

Also also, the terminal methods of a DataFrameWriter chain (e.g. json) don't return anything. Their purpose is to have the side effect of performing the actual write operation to storage, not to return a new DataFrame.

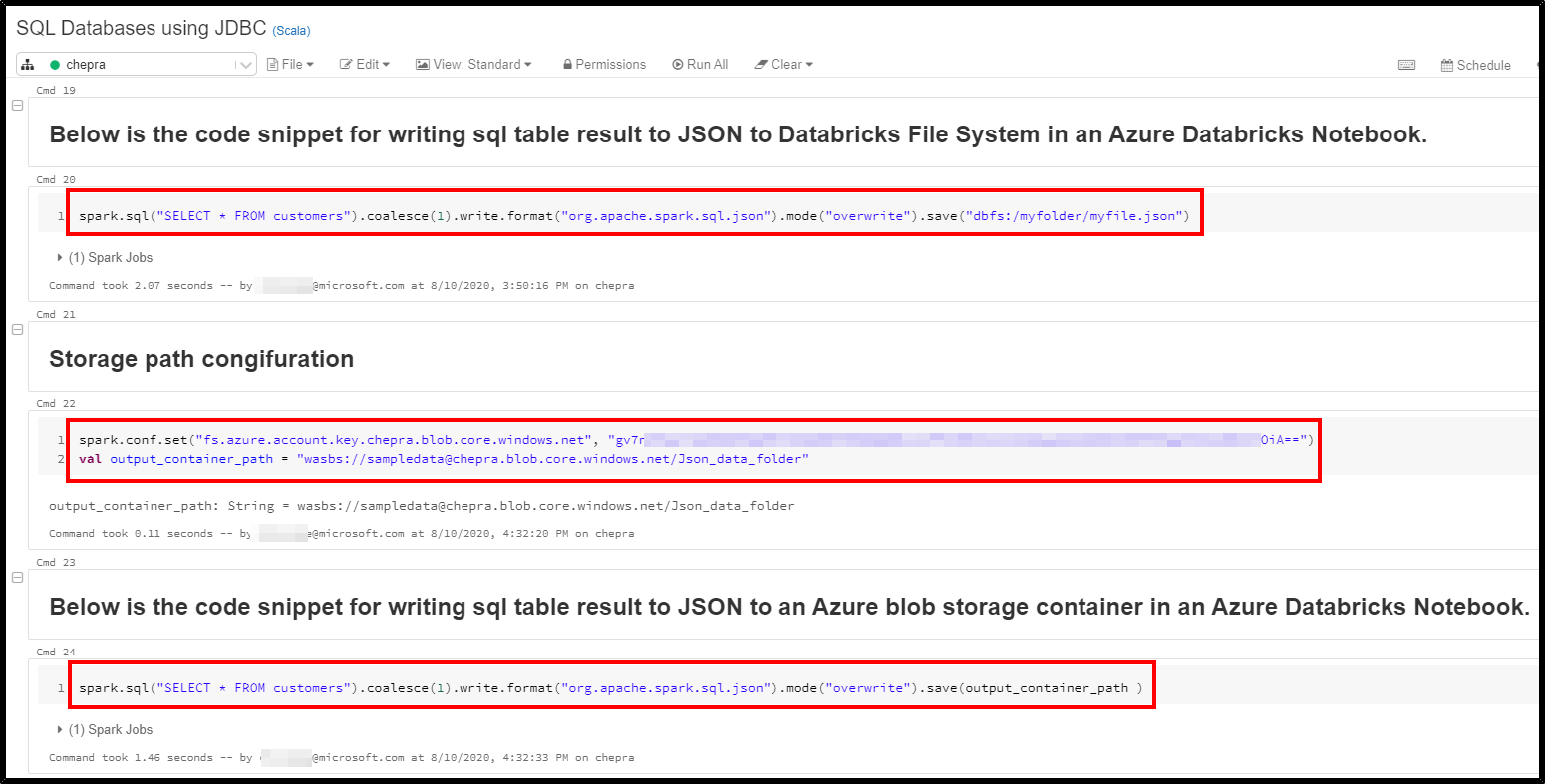

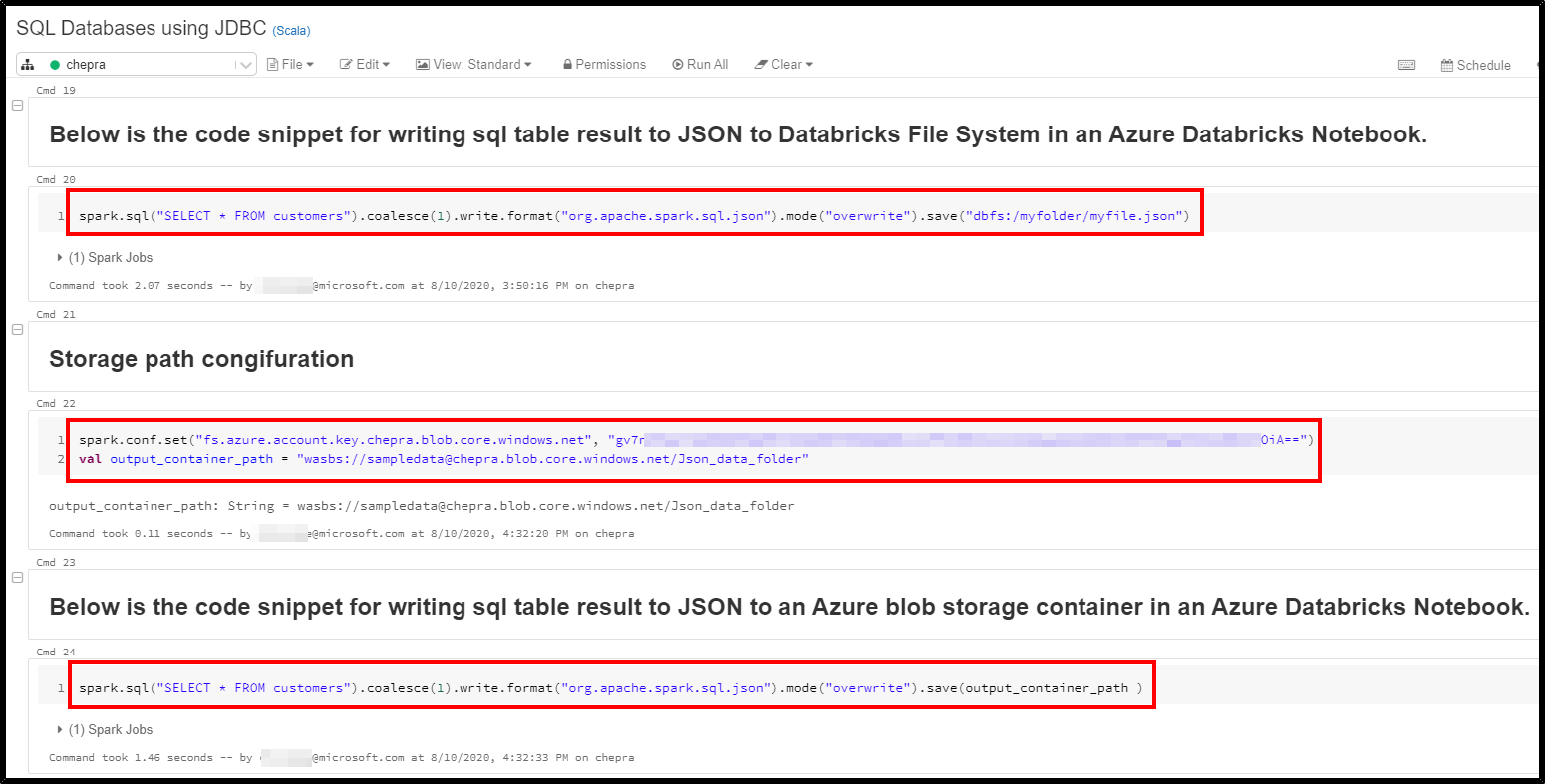

Below is the code snippet for writing sql table result to JSON to Databricks File System in an Azure Databricks Notebook.

spark.sql("SELECT * FROM customers").coalesce(1).write.format("org.apache.spark.sql.json").mode("overwrite").save("dbfs:/myfolder/myfile.json")

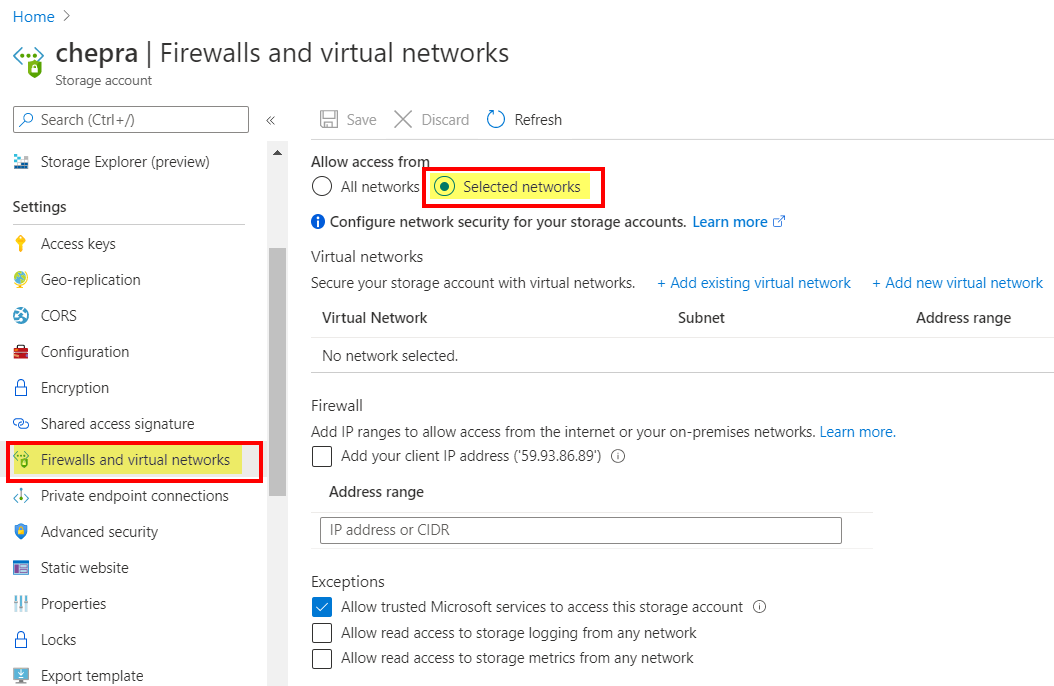

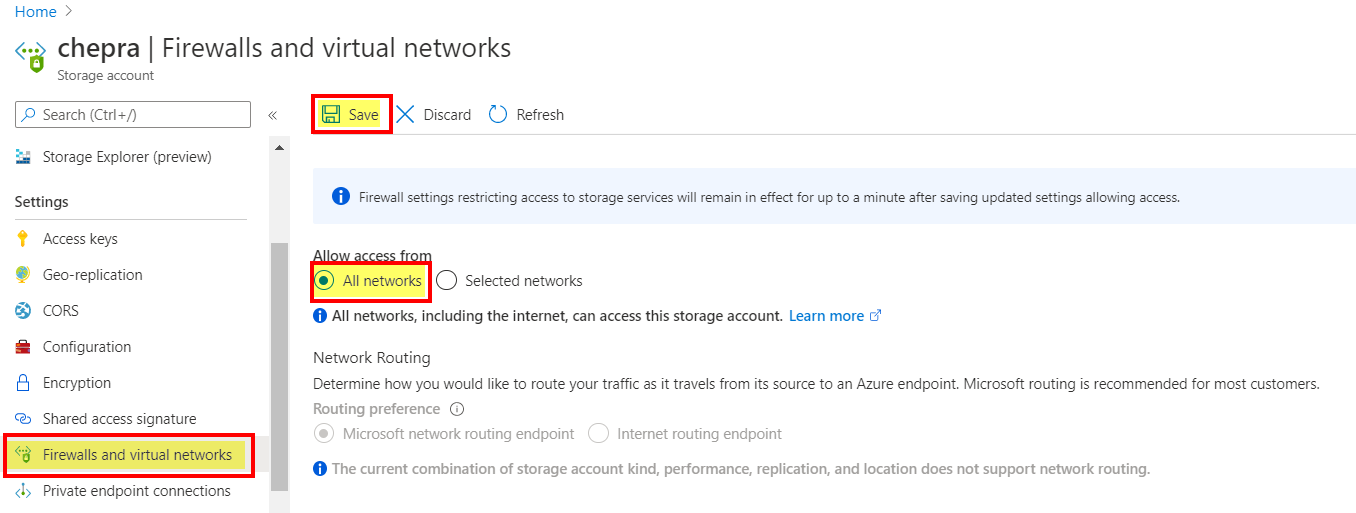

For Blob Storage: You need to configure the Storage path.

spark.conf.set("fs.azure.account.key.chepra.blob.core.windows.net", "gv7nVxxxxxxxxxxxxxxxxxxxxxOiA==")

val output_container_path = "wasbs://******@chepra.blob.core.windows.net/Json_data_folder"

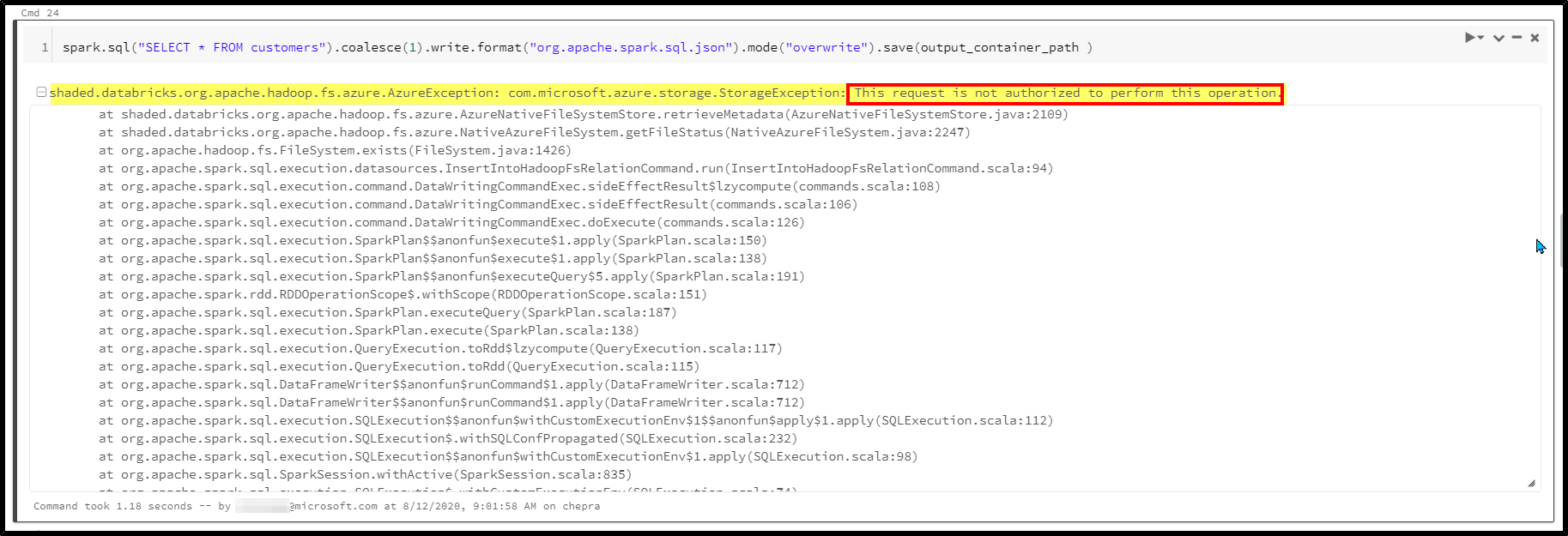

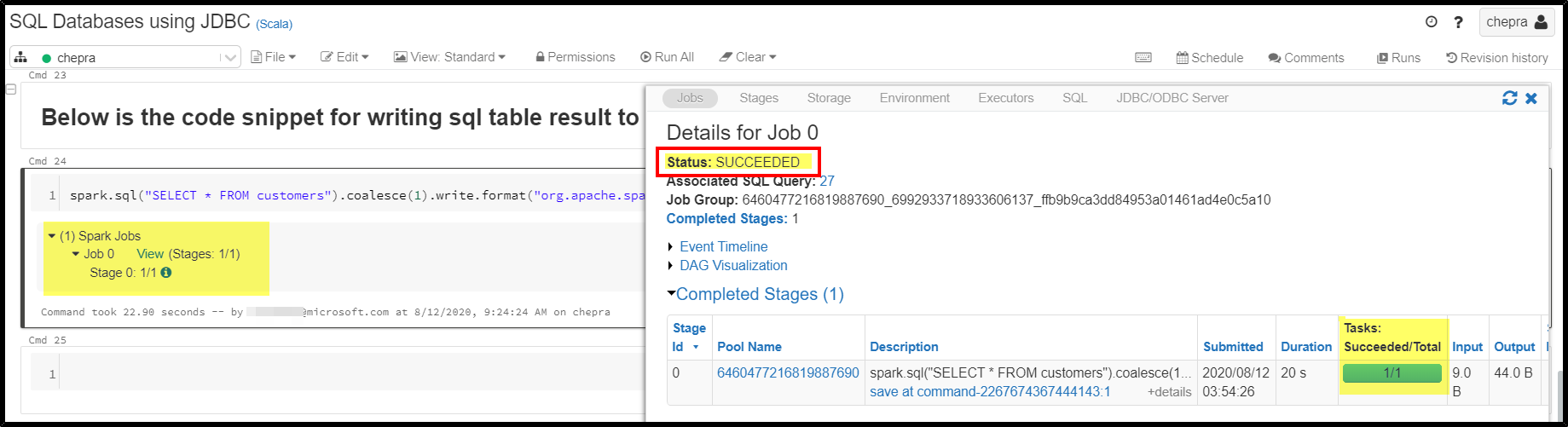

Below is the code snippet for writing sql table result to JSON to an Azure blob storage container in an Azure Databricks Notebook.

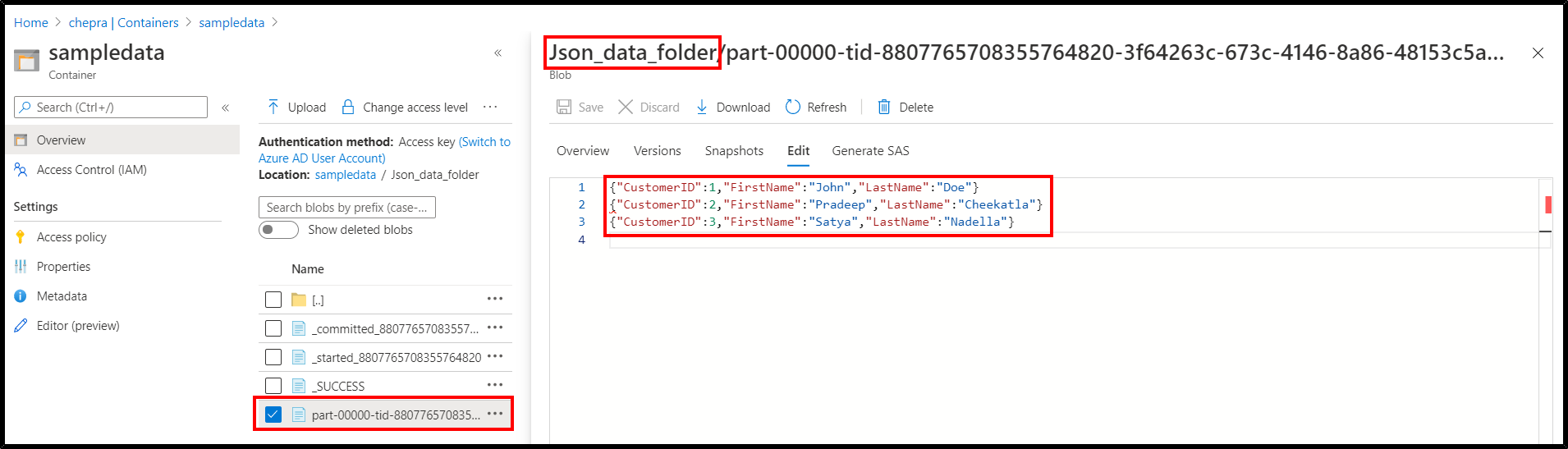

spark.sql("SELECT * FROM customers").coalesce(1).write.format("org.apache.spark.sql.json").mode("overwrite").save(output_container_path )

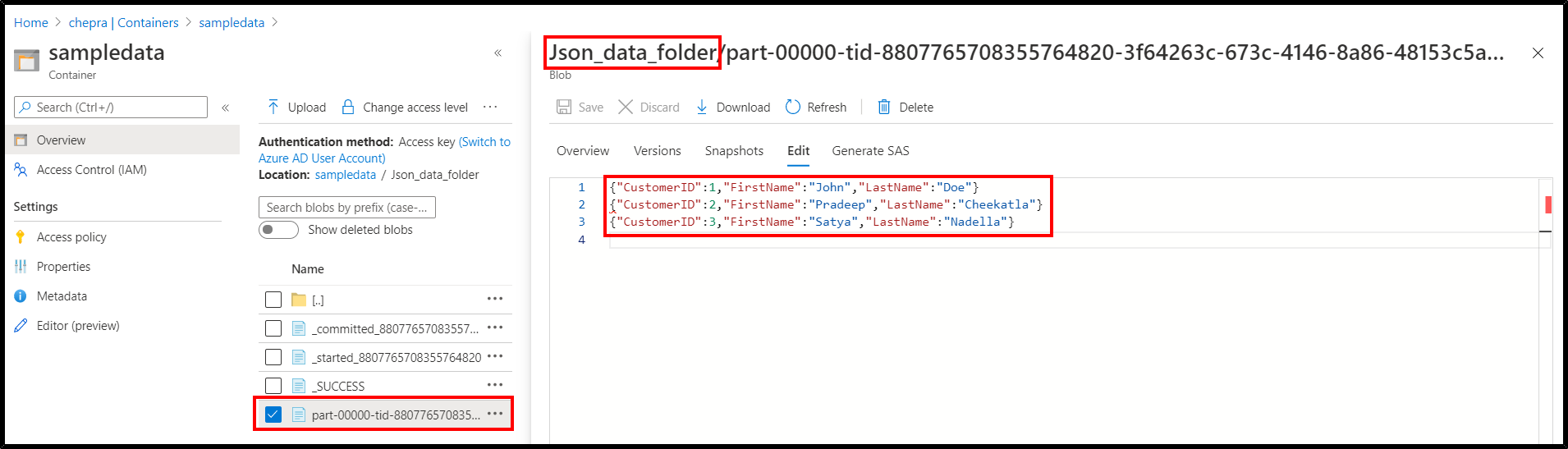

Successfully transformed table results to json in azure databricks.

Hope this helps. Do let us know if you any further queries.

----------------------------------------------------------------------------------------

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.