Hi I guess I am having some problem with azure core DNS. I am following this link dns-debugging-resolution

According this doc I have deployed sample pod to test. kubectl apply -f https://k8s.io/examples/admin/dns/dnsutils.yaml

And then executed this command kubectl exec -i -t dnsutils -- nslookup kubernetes.default but I am getting the following error

kubectl exec -i -t dnsutils -- nslookup kubernetes.default

;; connection timed out; no servers could be reached

command terminated with exit code 1

so I guess there is some problem with core DNS. I checked whether coreDNS pod and svc is running or not , from below command it shows it is running fine

kubectl get pods --namespace=kube-system -l k8s-app=kube-dns

NAME READY STATUS RESTARTS AGE

coredns-84d976c568-jhbvw 1/1 Running 0 47h

coredns-84d976c568-wdkgg 1/1 Running 0 47h

kubectl get svc --namespace=kube-system | grep dns

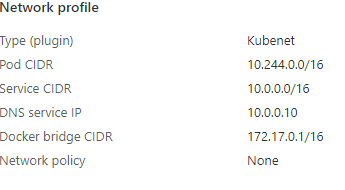

kube-dns ClusterIP 10.0.0.10 <none> 53/UDP,53/TCP 135d

Further checking the logs of core dns pod I am getting the below warnings which is quite suspicious and unexpected in the logs. Hence which makes more evident that core dns has some issue.

kubectl logs --namespace=kube-system -l k8s-app=kube-dns

[WARNING] No files matching import glob pattern: custom/*.override

[WARNING] No files matching import glob pattern: custom/*.server

[WARNING] No files matching import glob pattern: custom/*.override

[WARNING] No files matching import glob pattern: custom/*.server

[WARNING] No files matching import glob pattern: custom/*.override

[WARNING] No files matching import glob pattern: custom/*.server

[WARNING] No files matching import glob pattern: custom/*.override

[WARNING] No files matching import glob pattern: custom/*.server

[WARNING] No files matching import glob pattern: custom/*.override

[WARNING] No files matching import glob pattern: custom/*.server

[WARNING] No files matching import glob pattern: custom/*.override

[WARNING] No files matching import glob pattern: custom/*.server

[WARNING] No files matching import glob pattern: custom/*.override

[WARNING] No files matching import glob pattern: custom/*.server

[WARNING] No files matching import glob pattern: custom/*.override

[WARNING] No files matching import glob pattern: custom/*.server

[WARNING] No files matching import glob pattern: custom/*.override

[WARNING] No files matching import glob pattern: custom/*.server

[WARNING] No files matching import glob pattern: custom/*.override

[WARNING] No files matching import glob pattern: custom/*.server

Due to this DNS issue our istio pods are facing problem in DNS resolution and getting timeout error.

2021-11-11T06:02:17.996723Z error citadelclient Failed to create certificate: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial tcp: lookup istiod.istio-system.svc on 10.X.X.X:53: read udp 10.X.X.X:46002->10.X.X.X:53: i/o timeout"

2021-11-11T06:02:17.996737Z error cache resource:default request:b7411448-02b9-48bb-aab8-a36966e829fb CSR retrial timed out: rpc error: code = Unavailable desc= connection error: desc = "transport: Error while dialing dial tcp: lookup istiod.istio-system.svc on 10.X.X.X:53: read udp 10.X.X.X:46002->10.X.X.X:53: i/o timeout"

2021-11-11T06:02:17.996751Z error cache resource:default failed to generate secret for proxy: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial tcp: lookup istiod.istio-system.svc on 10.X.X.X:53: read udp 10.X.X.X:46002->10.X.X.X:53: i/o timeout"

2021-11-11T06:02:17.996759Z error sds resource:default Close connection. Failed to get secret for proxy "sidecar~10.X.X.X~sleep-78c656c8ff-bhx5k.foo~foo.svc.cluster.local" from secret cache: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial tcp: lookup istiod.istio-system.svc on 10.X.X.X:53: read udp 10.X.X.X:46002->10.X.X.X:53: i/o timeout"

2021-11-11T06:02:17.996842Z info sds resource:default connection is terminated: rpc error: code = Canceled desc = context canceled

We have another AKS cluster and followed the same steps as above and we got expected output when we executed the below command

kubectl exec -i -t dnsutils -- nslookup kubernetes.default

Server: 10.0.0.10

Address: 10.0.0.10#53

Name: kubernetes.default.svc.cluster.local

Address: 10.0.0.1

Can anyone please help me to resolve this issue?

Thanks in advance

@SRIJIT-BOSE-MSFT can you please help me to resolve this issue?