I am facing an error while trying to parse the Json file from Blob Storage using a data set within ADF.

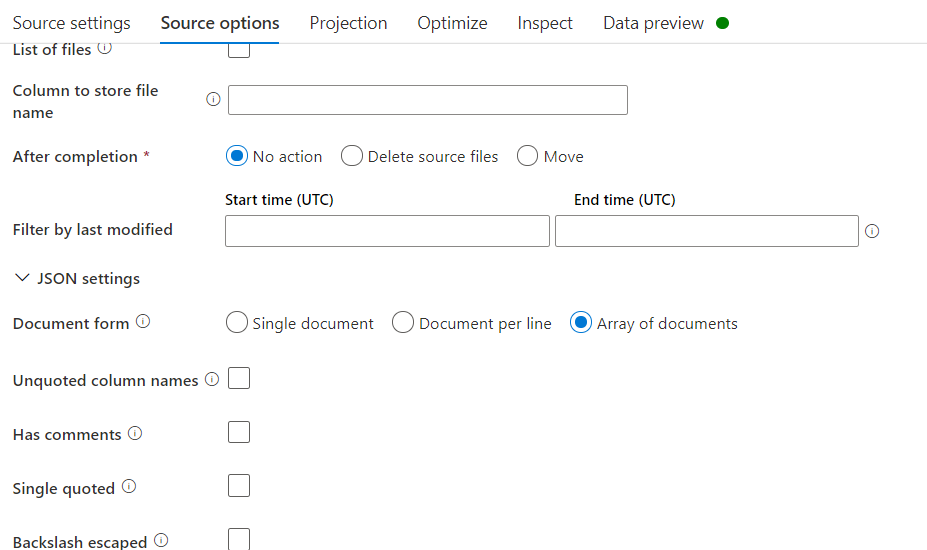

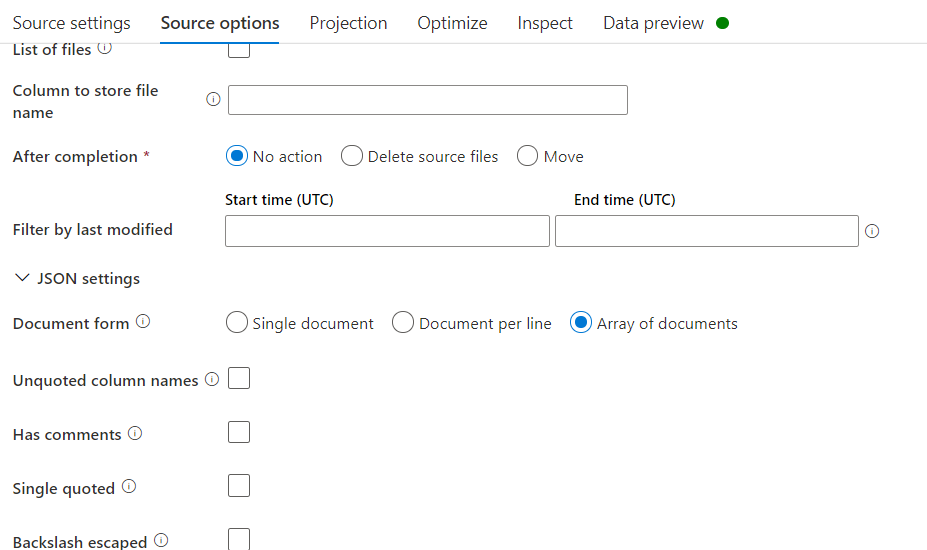

The json file is the list of objects and I have selected the appropriate option in the data flow, and I have even tried with all the three available options, yet don't know the reason behind the issue.

I have tried changing the encoding but it resulted in a change in data.

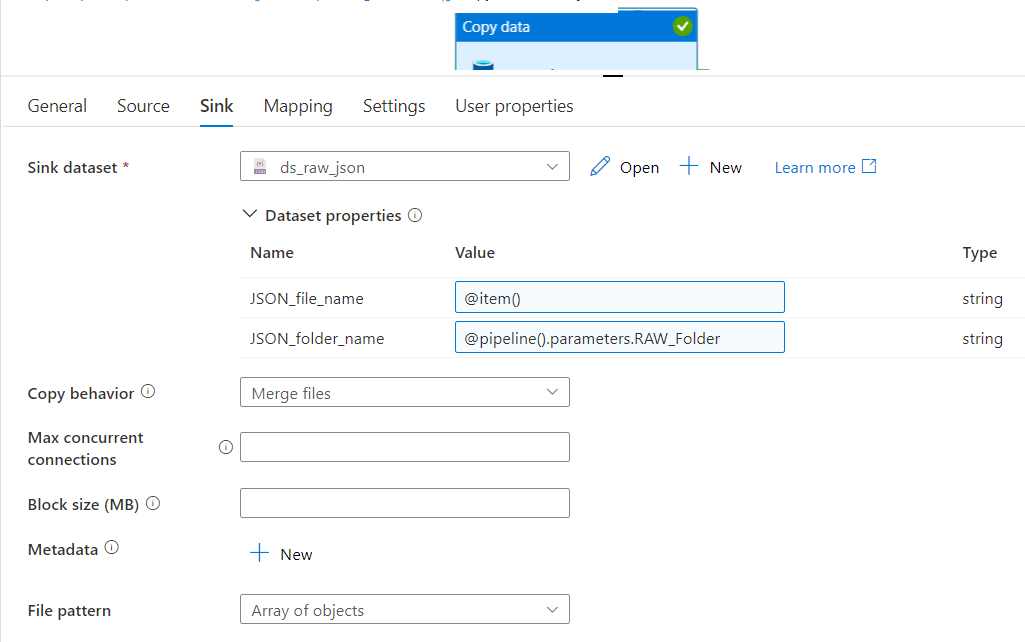

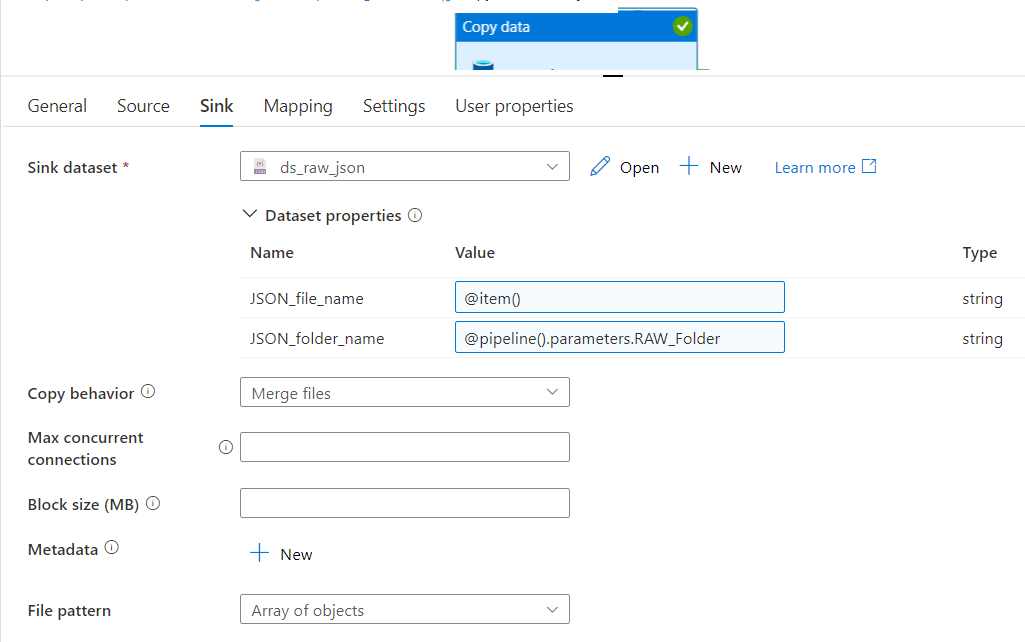

The json file is created by merging more than one json file with one record in it, using a copy activity as below

I have verified the output merged json file and it looks appropriate and used the online json viewer to verify the structure of the file, everything looks good.

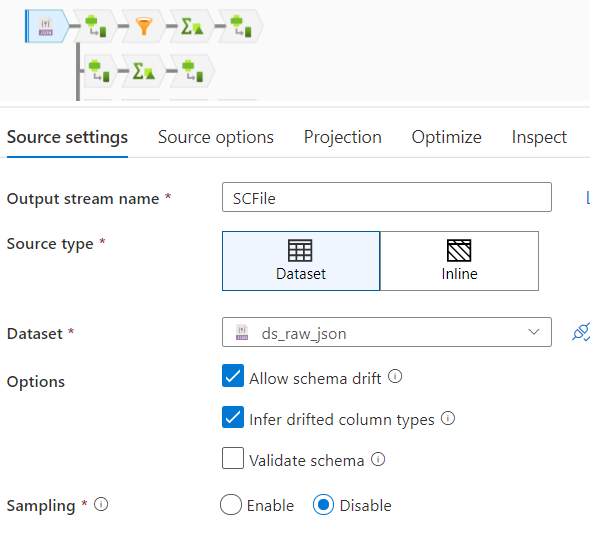

But when this file is used in dataflow to read the data as shown in the below snip, I am facing an issue with the error message as:

{"StatusCode":"DF-JSON-WrongDocumentForm","Message":"Job failed due to reason: Malformed records are detected in schema inference. Parse Mode: FAILFAST","Details":"org.apache.spark.SparkException: Job aborted due to stage failure: Task 0 in stage 0.0 failed 1 times, most recent failure: Lost task 0.0 in stage 0.0 (TID 0, 10.90.0.5, executor 0): org.apache.spark.SparkException: Malformed records are detected in schema inference. Parse Mode: FAILFAST.\n\tat org.apache.spark.sql.catalyst.json.JsonInferSchema$$anonfun$1$$anonfun$apply$1.apply(JsonInferSchema.scala:66)\n\tat org.apache.spark.sql.catalyst.json.JsonInferSchema$$anonfun$1$$anonfun$apply$1.apply(JsonInferSchema.scala:53)\n\tat scala.collection.Iterator$$anon$12.nextCur(Iterator.scala:435)\n\tat scala.collection.Iterator$$anon$12.hasNext(Iterator.scala:441)\n\tat scala.collection.Iterator$class.isEmpty(Iterator.scala:331)\n\tat scala.collection.AbstractIterator.isEmpty(Iterator.scala:1334)\n\tat scala.collection.TraversableOnce$class.reduceLeftOption(TraversableOnce.scala:203)\n\tat scala.collection.AbstractIterator.reduceLeftOption(Iterator.scala:1334)\n\tat scala.collection.TraversableOnce$class.reduceOption(TraversableOnce.scal"}