Hi @Dheeraj ,

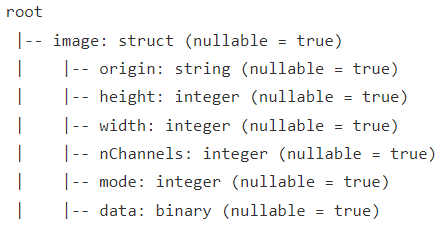

I'm not sure about the second error at the moment, but as far as the first error I believe the table created is a round robin table with a clustered columnstore index. The issue is there being that the varbinary data type is not supported in a clustered columnstore index. You would need the table to be a HEAP, but unfortunately as far as I am aware you do not get the option with the built-in connector to set any options on the final table.

A workaround would be to create the table ahead of time (manually, through some code inside your notebook, or a call to a T-SQL script that would create it possibly) ensuring it is a HEAP then load the data using a different method like JDBC. Unfortunately, that will be a slower data transfer. If the volume of data is causing the process to be too slow you may consider alternative approaches that would require some additional work like performing your data processing in the notebook, write to data lake, then use PolyBase/COPY INTO to load the table. If using PolyBase, the table would not need to exist ahead of time (similar to your current Scala code). If using the COPY statement, the table would need to exist ahead of time.

I hope this helps get you going in the right direction.