Hello @reddy ,

Welcome to the Microsoft Q&A platform.

You can simply move data from aws s3 to Azure Storage account and then mount azure storage account to databricks and convert parquet file to csv file using Scala or Python.

Why The files are in this format part-00000-bdo894h-fkji-8766-jjab-988f8d8b9877-c000.snappy.parquet

By default, the underlying data files for a Parquet table are compressed with Snappy. The combination of fast compression and decompression makes it a good choice for many data sets.

Using Spark, you can convert Parquet files to CSV format as shown below.

df = spark.read.parquet("/path/to/infile.parquet")

df.write.csv("/path/to/outfile.csv")

For more details, refer “Spark Parquet file to CSV format”.

File is not a valid parquet file.

I would suggest you to checkout the file format. Make sure you are passing valid parquet file format.

Parquet file contained column 'XXX', which is of a non-primitive, unsupported type?

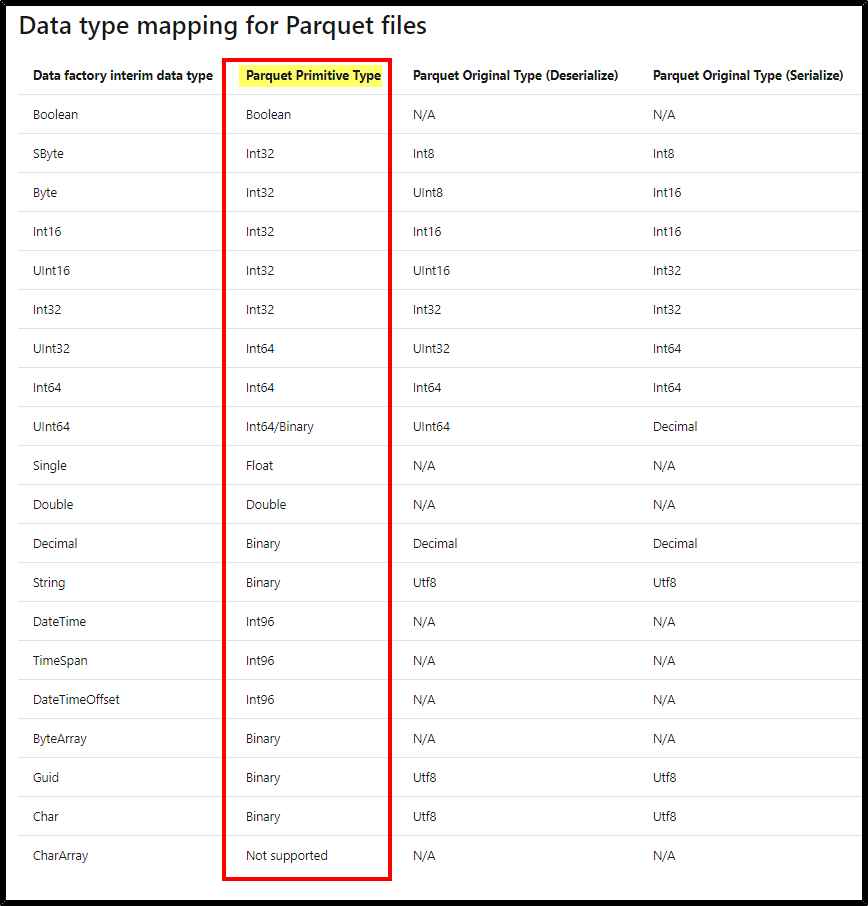

You may experience this error message, when you pass the columns which are unsupported data type in parquet files.

You may checkout the file contained column “XXX” data type and make sure you are using the supported data type.

These are the supported data type mappings for parquet files.

For more details, refer “ADF – Supported file formats - Parquet”.

Hope this helps. Do let us know if you any further queries.

----------------------------------------------------------------------------------------

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.