Hello @Müller, André and welcome to Microsoft Q&A. If I understand your ask correctly, it is possible to write a parameter into a sink column. However your need to go to the Copy Activity source options first, that is where the feature is.

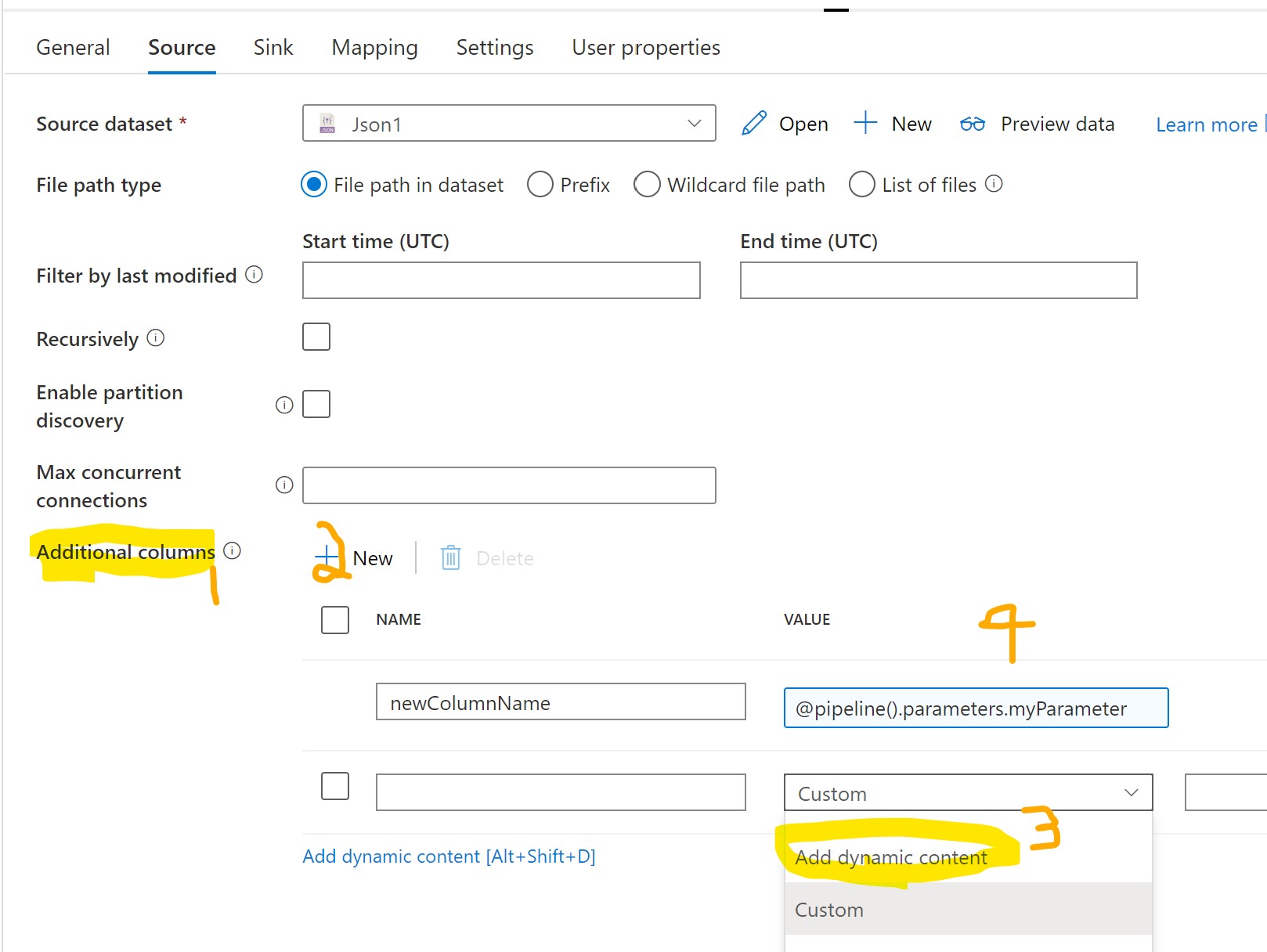

The feature you are looking for is called "Additional columns". You will want to click + to add a new one. After naming your new (source) column, select "Dynamic Content" in the middle drop-down menu. Then you will be prompted to enter the reference to the parameter. See image below.

Once this is done, go to the Copy Activity mapping, and re-do the "import schemas" to make the new column show up on the source (left) side. Probably at the bottom of the list. Then you can set what it should map to in sink (right).

It is possible to add the new mapping without re-importing schemas, but it is easy to make a typo when adding manually.