Hello @Christopher Mühl and welcome to Microsoft Q&A!

I have some good news and bad news. The good news is that a work-around to accomplish getting all the sub-files is possible. The bad news is the work-around is a bit clunky and awkward, unless you want to do some work outside of Azure Data Factory.

I can explain why Get Metadata doesn't work here, or why you can't wildcard HTTP sources, but I do not know if you want to read the explanation. Let me know if you do.

So the high level view of the work around is to:

- Use Web Activity to fetch the contents of your Base URL.

- Use Set Variable activity to split the output of Web Activity into individual entries, 1 per file, and store into an Array type variable

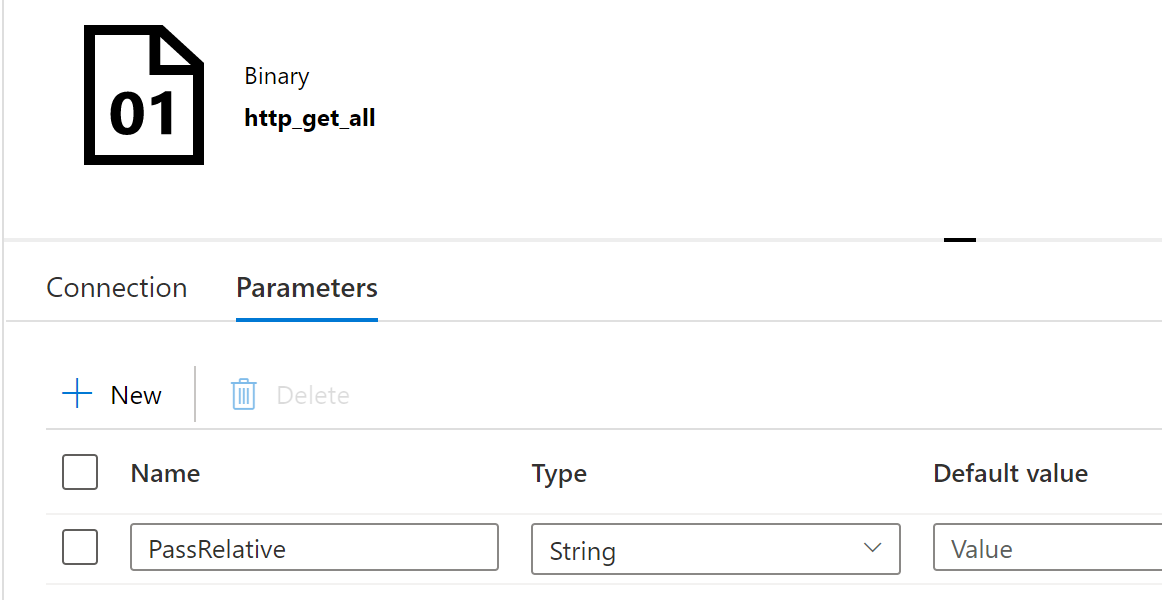

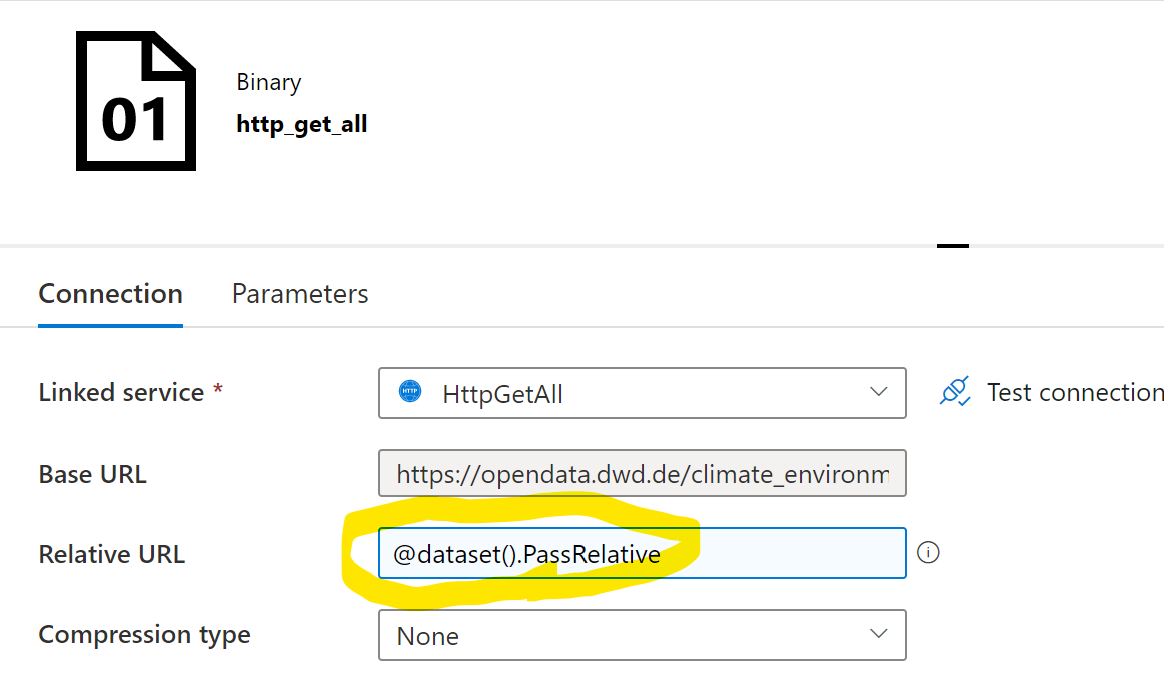

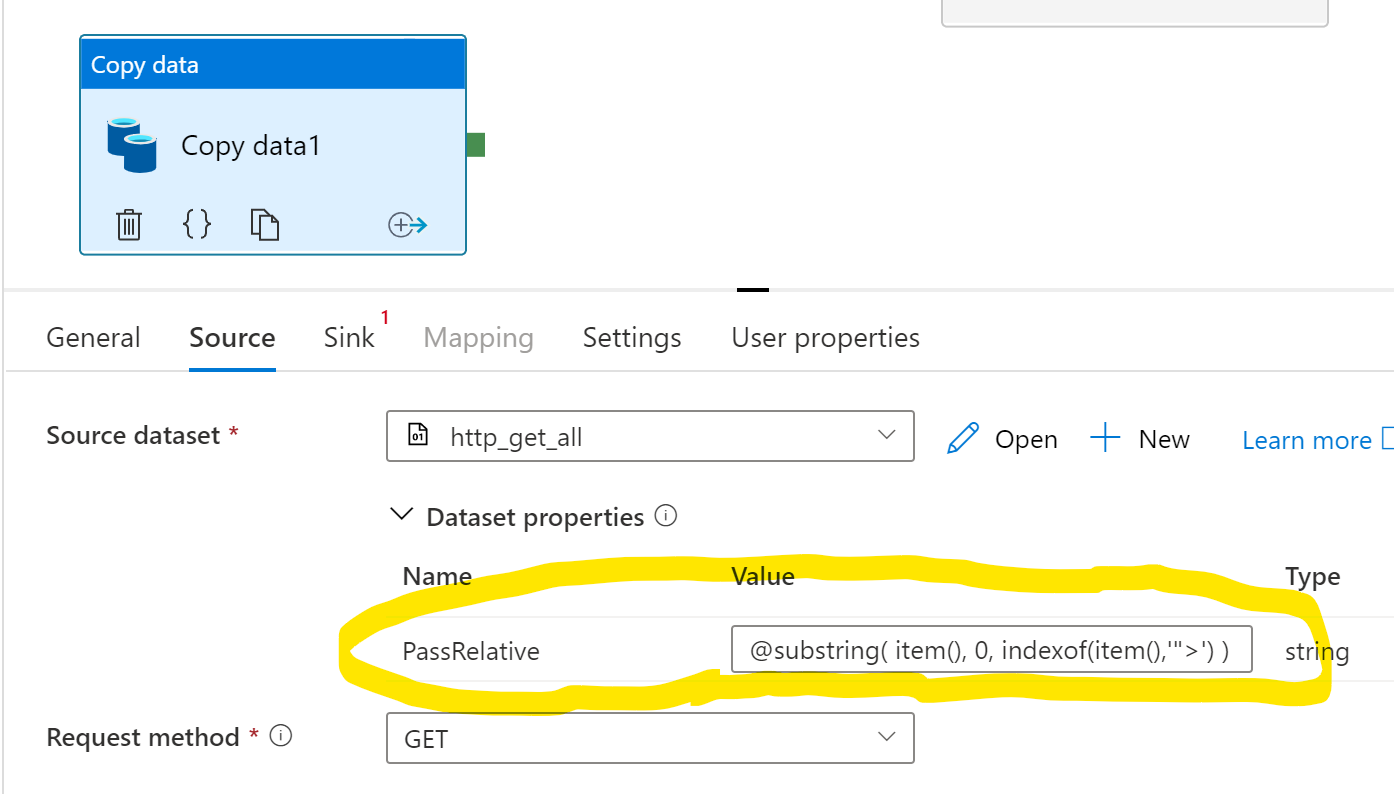

- Clean up the entries (stored in the array variable) so they are usable as Relative URL

- Iterate over the entires, passing each one into a Copy activity

I am currently working out the implementation details right now. My progress as of writing this is between 2 and 3.