Hello anonymous user,

Welcome to the Microsoft Q&A platform.

If you haven’t configured the disaster recovery for Azure Databricks clusters, you will not able to access the data and visualize, when there is an outage in the region.

If you have configured the disaster recovery for Azure Databricks clusters, you can access the data and visualize using secondary region.

The persisted data is available in your storage account, which can be Azure Blob Storage or Azure Data Lake Storage. Once the cluster is created, you can run jobs via notebooks, REST APIs, ODBC/JDBC endpoints by attaching them to a specific cluster.

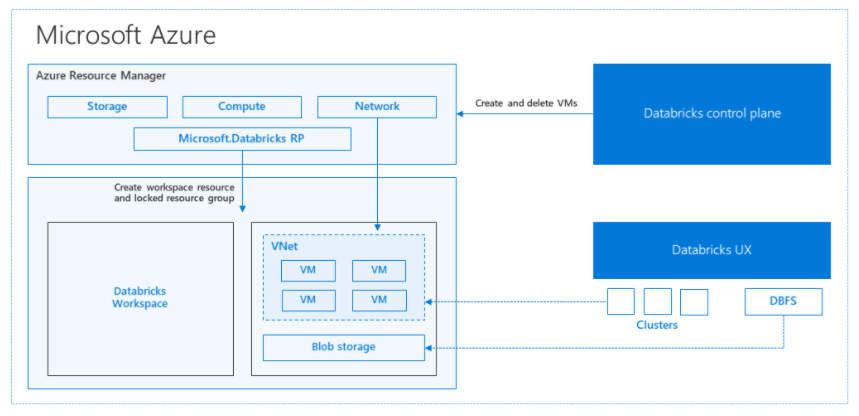

The Databricks control plane manages and monitors the Databricks workspace environment. Any management operation such as create cluster will be initiated from the control plane. All metadata, such as scheduled jobs, is stored in an Azure Database with geo-replication for fault tolerance.

One of the advantages of this architecture is that users can connect Azure Databricks to any storage resource in their account. A key benefit is that both compute (Azure Databricks) and storage can be scaled independently of each other.

This article describes a disaster recovery architecture useful for Azure Databricks clusters, and the steps to accomplish that design.

Hope this helps. Do let us know if you any further queries.

----------------------------------------------------------------------------------------

Do click on "Accept Answer" and Upvote on the post that helps you, this can be beneficial to other community members.