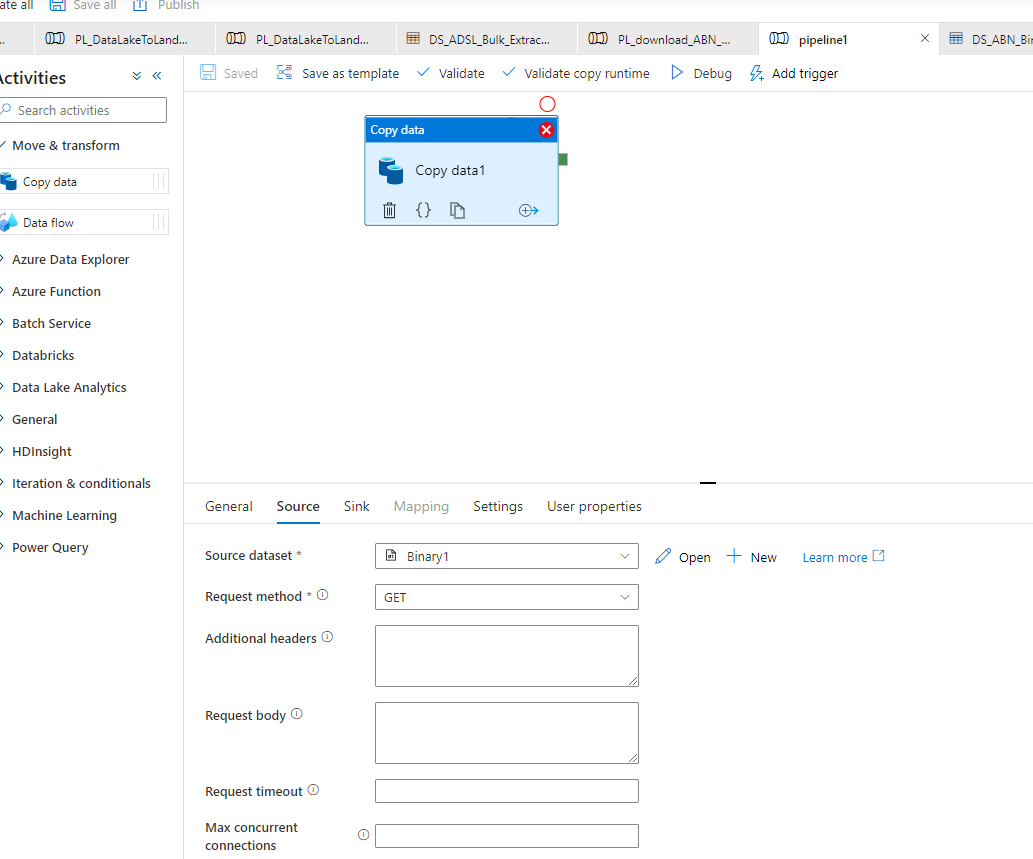

Thanks @svijay-MSFT for your reply, it was definitely working for us, above is test copy activity screen shots, but I did develop a solution having URL parametrized and doing in single copy activity, another evidence is please see the link by another MSFT "https://learn.microsoft.com/en-us/answers/questions/544541/how-to-copy-http-zip-file-to-azure-blob.html" doing exactly in one copy activity, in this link another youtube presentation doing same steps what I did and working for everyone, its important I get to know the answer what has changes in past 3 to 4 weeks so that I can share with my team.

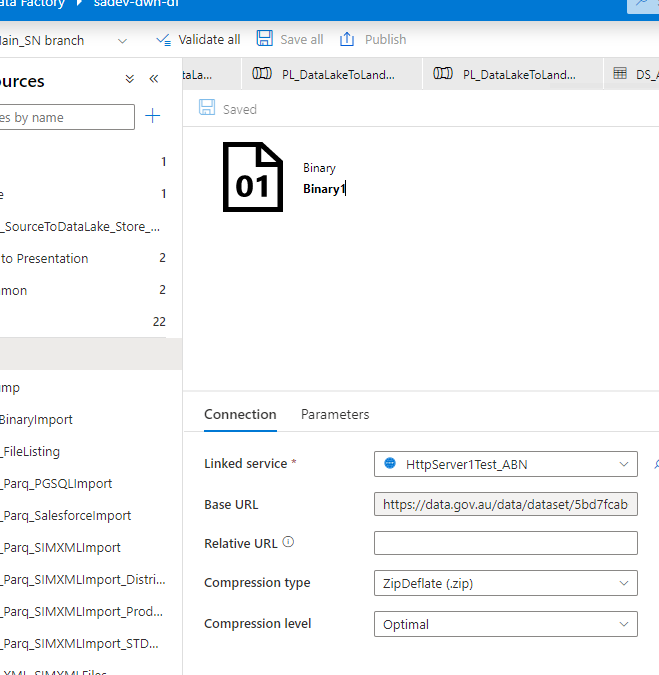

With regard to your advise solution, since I was not clear with compression type in HTTP I tried following

Step 1:

Source HTTP , as Binary and using compression Type "None"

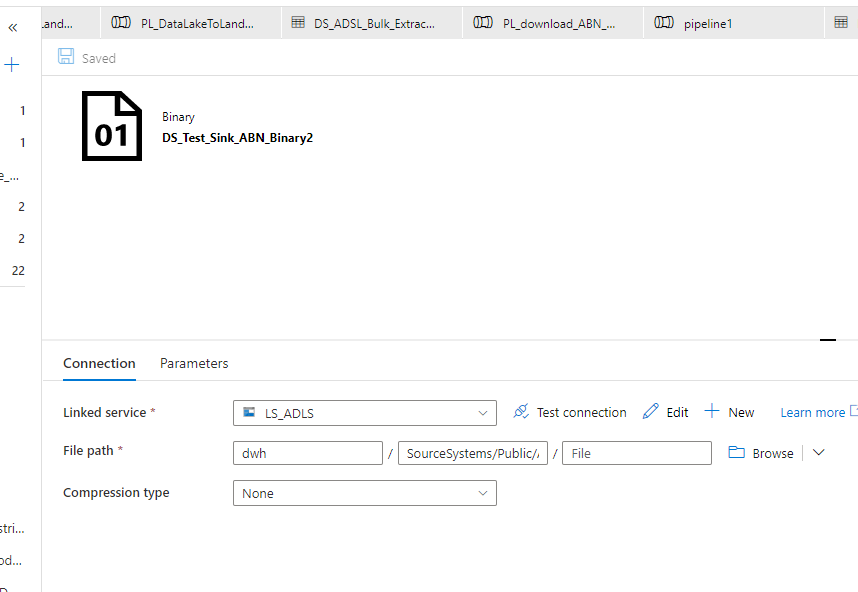

Sink : Azure Data Lake Gen2, as Binary Compression type "None"

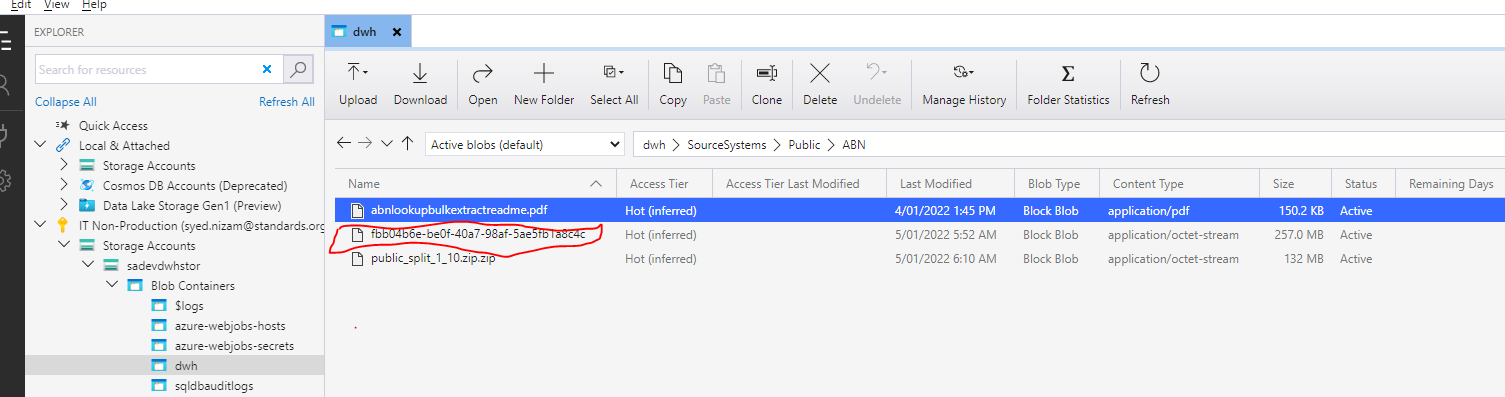

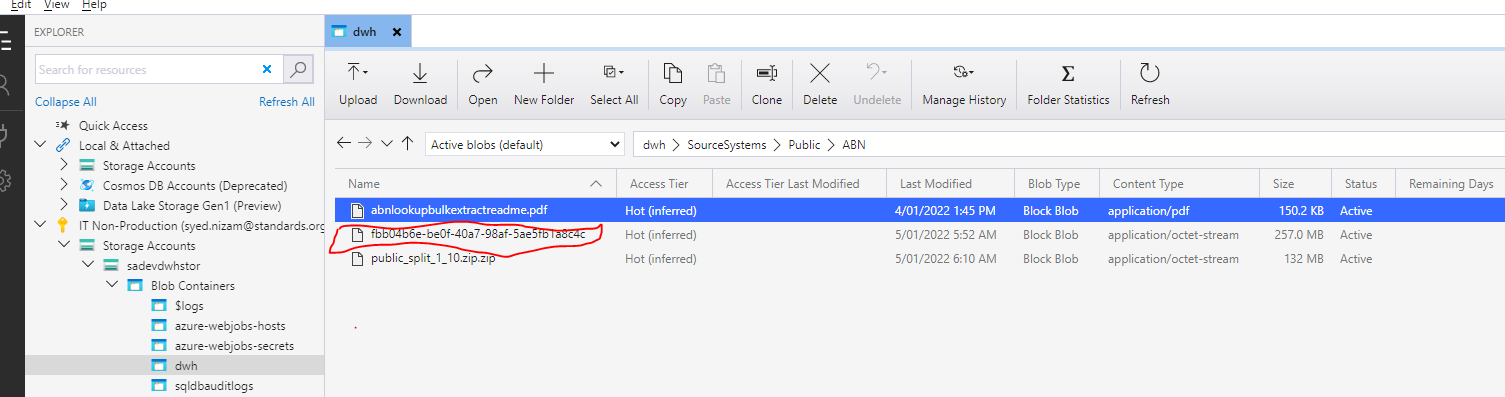

it creates a file with random name and without extension, not actual name "public_split_1_10.zip"when you download file as normal process see the screen shot below, file name in red

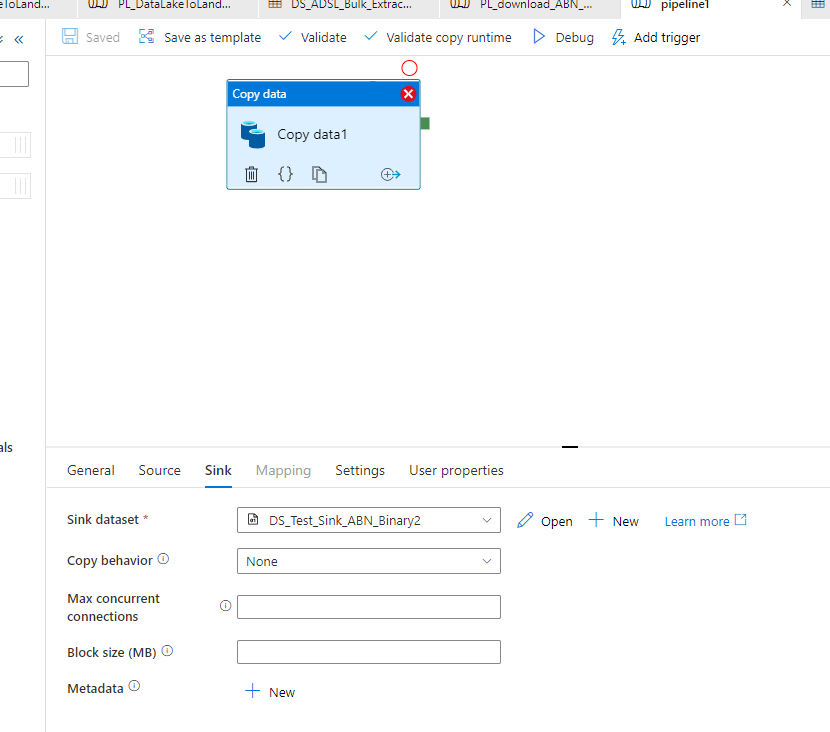

trying step 1 in another way

Step 1:

Source HTTP , as Binary and using compression Type "None"

Sink : Azure Data Lake Gen2, as Binary Compression type "ZipDeflate"

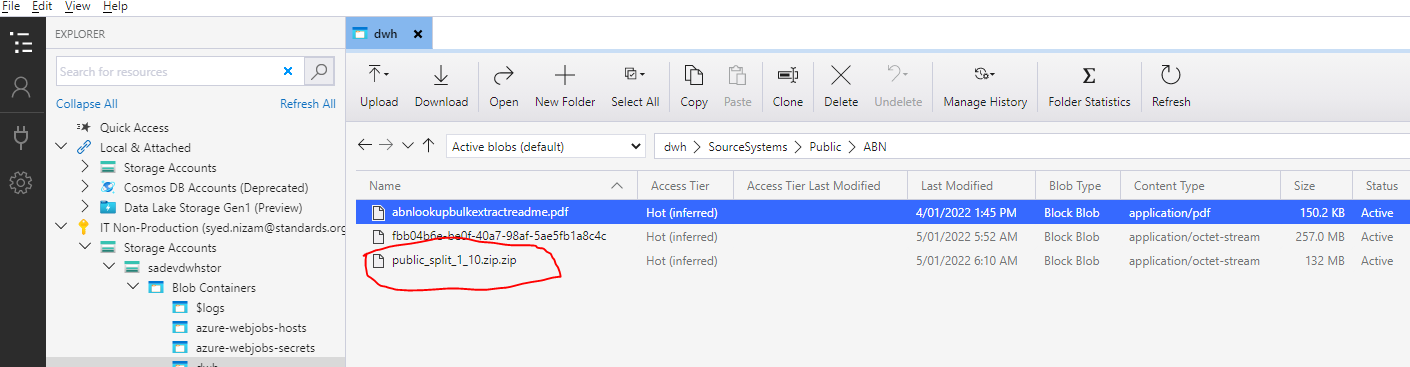

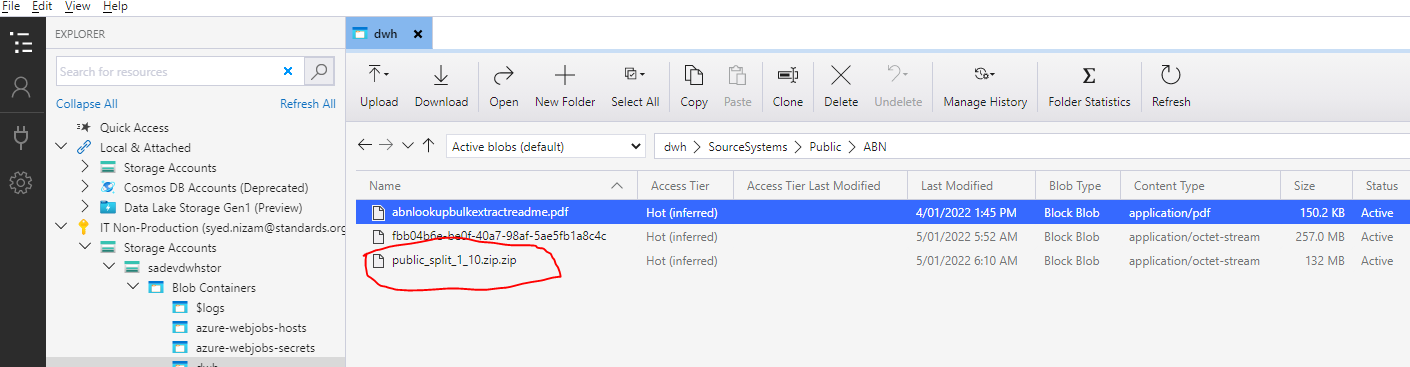

it creates file with bit proper name but add .zip again due to compression type, I need proper name, not Public_Split_1_10.zip.zip, see the red part in screen shot below. otherwise it creates issue in next copy activtly name where I would like to create wild card path *.zip

The URL I have given is public you can try at your end let me know the what works for you.

HTTP Source URL "https://data.gov.au/data/dataset/5bd7fcab-e315-42cb-8daf-50b7efc2027e/resource/0ae4d427-6fa8-4d40-8e76-c6909b5a071b/download/public_split_1_10.zip"

More importantly I need to understand why there has been a change in Data Factory, if something that was working for me and others is now having a issue, this is important for our organisation to know the answer.