The short answer is: no, you can't but Databricks has extra layer of protection

Scope creation:

When you create key vault-based databricks secret scope the following entry will be added into key vault's access policy list:

{

"objectId": "c836dda9-16de-4b63-95ad-42da15a6055a",

"permissions": {

"secrets": [

"get",

"list"

]

},

"tenantId": "902e9b63-xxxx-xxxx-xxxx-3aafbc9e0fb8"

}

where c836dda9-16de-4b63-95ad-42da15a6055a is well-known UUID for tenant-wide AzureDatabricks enterprise application.

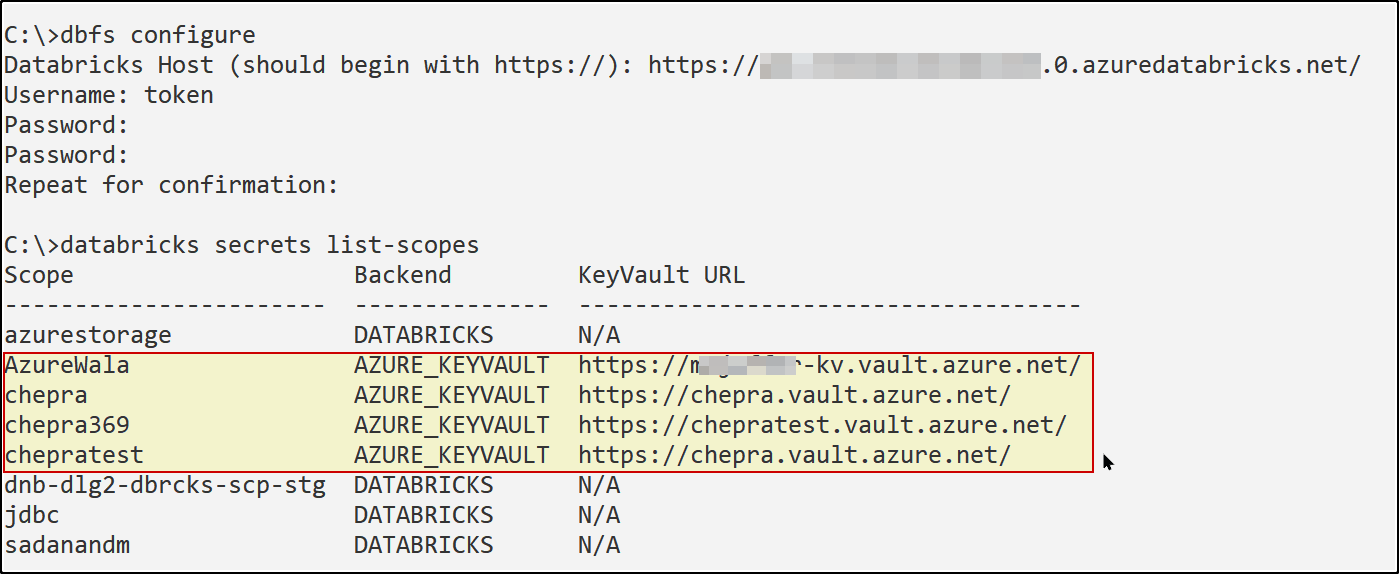

Effectively the first scope creation per key vault gives all databricks instances on tenant rights to list and read all secrets from the given keyvault.

This is the main issue in this thread. This is in my opinion HUGE problem because documentation does not explain mention anything about scope creation changing key vault properties or access policy list

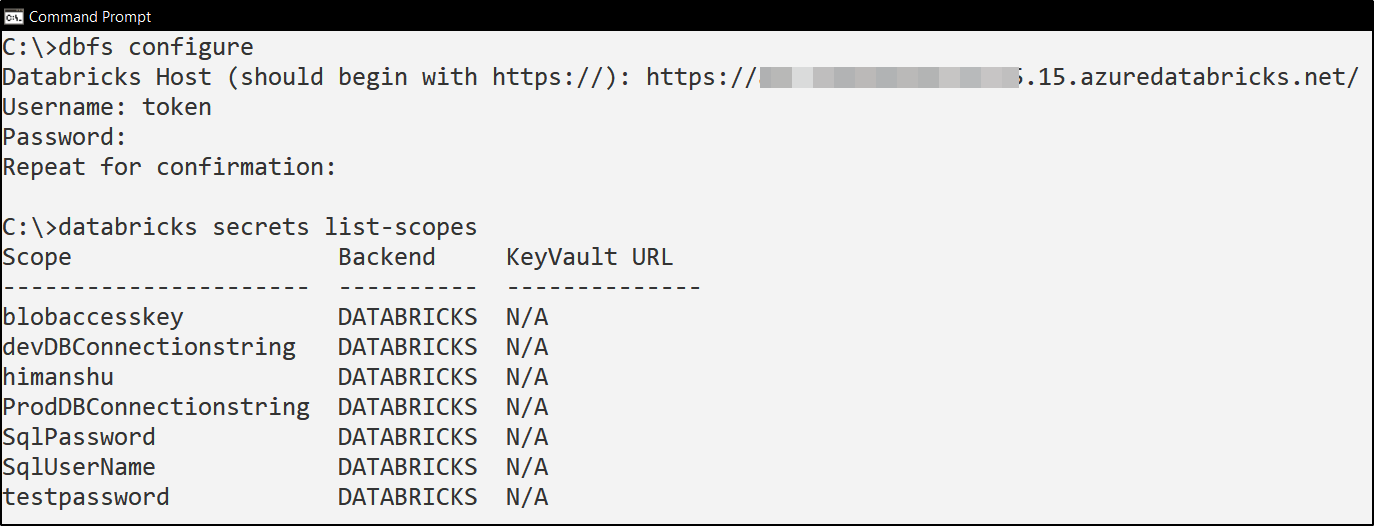

Scope creation permission checks:

Databricks key vault-backed scope creation will fail if user or identity creating scope does not have proper rights on the given key vault.

Scope creation process requires write access for key vault control layer: Key Vault Contributor, Contributor or Owner role. Also suitable custom roles will be sufficient.

If role to update regular key vault access policy list is not available scope creation will fail:

Unable to grant read/list permission to Databricks service principal to KeyVault: https://xxx.vault.azure.net/

Other mentions::

Scope creation ignores RBAC-based key vault permission management. Regular key vault's access policy addition will be done even if RBAC-based permission management is turned on.

Should we trust databricks scope creation key vault permission check

In my opinion no. Reasoning:

- Usage of tenant-wide enterprise app access policy addition should be documented. Doing this kind of access policy addition silently is not acceptable

- Permission check should be documented. Giving an error message when check/policy addition fails is not proper documentation.

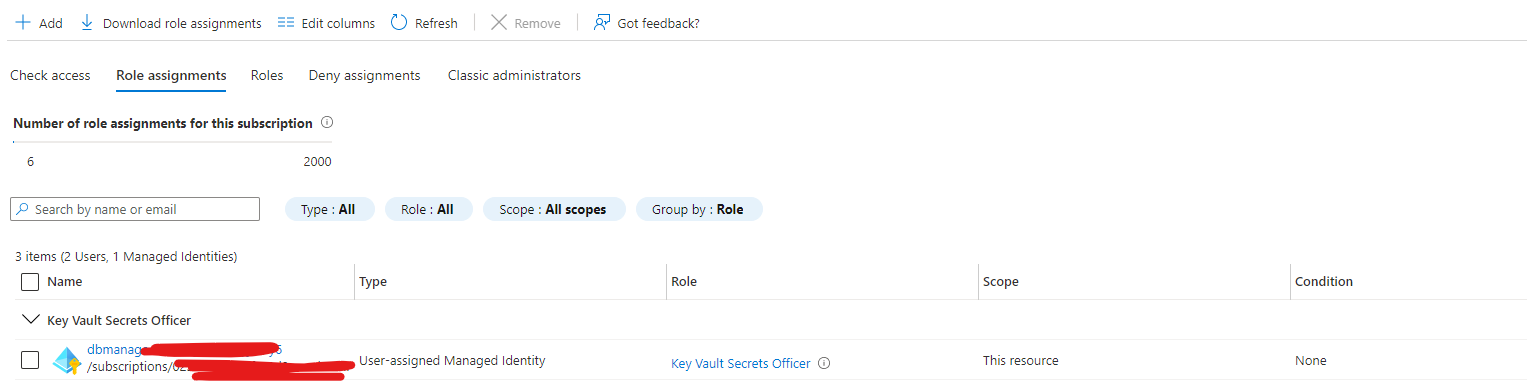

RBAC-based key vault access policy:

If you use RBAC-based key vault access policy you need to add authorizations manually because databricks scope creation supports only regular access policy.

Note that you can give access on secret level if need that.

TLDR:

- No, you cannot give key vault access for only specific databricks accounts

- but databricks scope creation has internal checks that blocks scope creation if creating user has no key vault access

Related issues: