Hi @Keith Miller ,

Welcome to Microsoft Q&A Platform and thanks for the query.

As per MS documentation, Cosmos DB limits single request's size to 2MB. The formula is Request Size = Single Document Size * Write Batch Size.

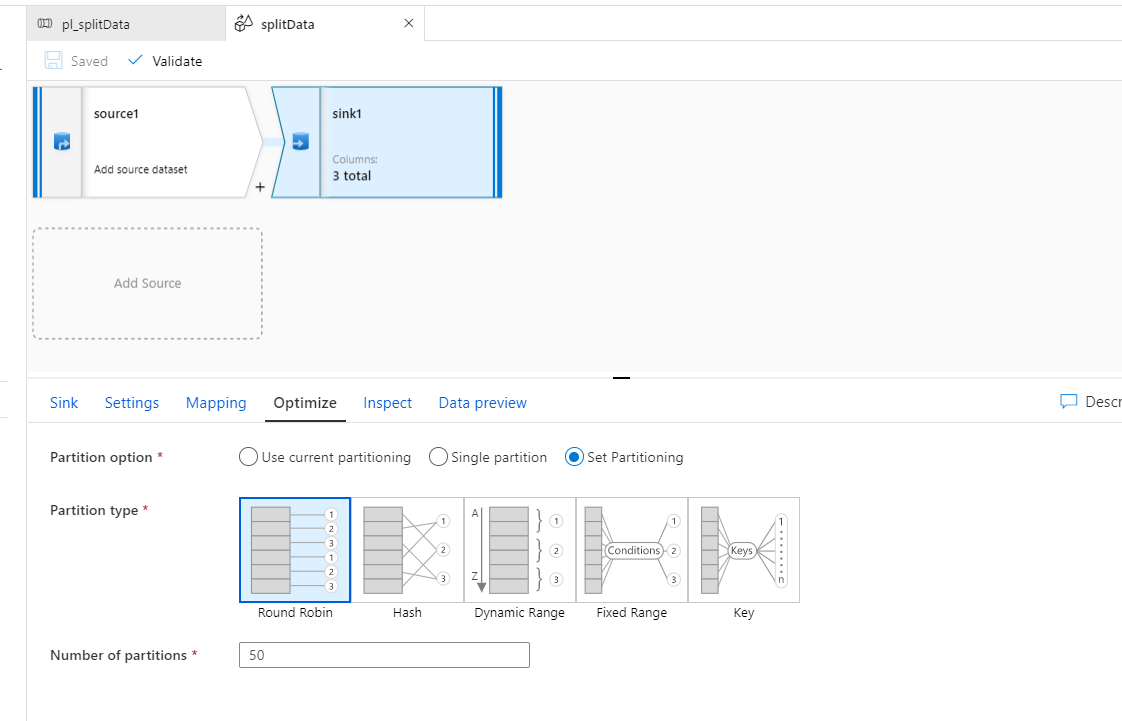

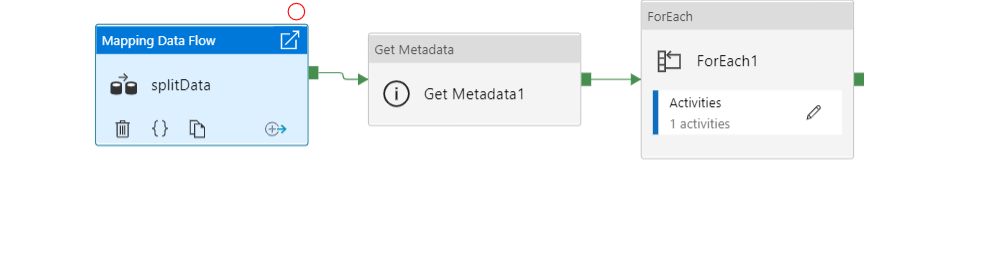

The workaround suggested if it exceeds this is to split the document into multiple partitions, after that use the For Each activity with a Copy Activity to write every file into Cosmos DB as shown below.

Adding the JSONs as requested:

Pipeline JSON - pl_splitData

{

"name": "pl_splitData",

"properties": {

"activities": [

{

"name": "splitData",

"type": "ExecuteDataFlow",

"dependsOn": [],

"policy": {

"timeout": "7.00:00:00",

"retry": 0,

"retryIntervalInSeconds": 30,

"secureOutput": false,

"secureInput": false

},

"userProperties": [],

"typeProperties": {

"compute": {

"coreCount": 8,

"computeType": "General"

}

}

},

{

"name": "ForEach1",

"type": "ForEach",

"dependsOn": [

{

"activity": "Get Metadata1",

"dependencyConditions": [

"Succeeded"

]

}

],

"userProperties": [],

"typeProperties": {

"items": {

"value": "@activity('Get Metadata1').output.childItems",

"type": "Expression"

},

"isSequential": true,

"activities": [

{

"name": "Copy data1",

"type": "Copy",

"dependsOn": [],

"policy": {

"timeout": "7.00:00:00",

"retry": 0,

"retryIntervalInSeconds": 30,

"secureOutput": false,

"secureInput": false

},

"userProperties": [],

"typeProperties": {

"source": {

"type": "JsonSource",

"storeSettings": {

"type": "AzureBlobStorageReadSettings",

"recursive": true,

"wildcardFolderPath": "toCosmos",

"wildcardFileName": {

"value": "@item().name",

"type": "Expression"

},

"enablePartitionDiscovery": false

},

"formatSettings": {

"type": "JsonReadSettings"

}

},

"sink": {

"type": "CosmosDbSqlApiSink",

"writeBehavior": "upsert",

"disableMetricsCollection": false

},

"enableStaging": false

},

"outputs": [

{

"referenceName": "CosmosDbSqlApiCollection1",

"type": "DatasetReference"

}

]

}

]

}

},

{

"name": "Get Metadata1",

"type": "GetMetadata",

"dependsOn": [

{

"activity": "splitData",

"dependencyConditions": [

"Succeeded"

]

}

],

"policy": {

"timeout": "7.00:00:00",

"retry": 0,

"retryIntervalInSeconds": 30,

"secureOutput": false,

"secureInput": false

},

"userProperties": [],

"typeProperties": {

"fieldList": [

"childItems"

],

"storeSettings": {

"type": "AzureBlobStorageReadSettings",

"recursive": true

},

"formatSettings": {

"type": "JsonReadSettings"

}

}

}

],

"annotations": []

}

}

DataFlow JSON - splitData

{

"name": "splitData",

"properties": {

"type": "MappingDataFlow",

"typeProperties": {

"sources": [

{

"dataset": {

"referenceName": "input_json_59MB",

"type": "DatasetReference"

},

"name": "source1"

}

],

"sinks": [

{

"dataset": {

"referenceName": "ds_OutputJSON",

"type": "DatasetReference"

},

"name": "sink1"

}

],

"transformations": [],

"script": "source(output(\n\t\ttype as string,\n\t\tcrs as (type as string, properties as (name as string)),\n\t\tfeatures as (type as string, properties as (pid as string, lat_max as double, lat_min as double, long_max as double, long_min as double), geometry as (type as string, coordinates as double[][][][]))[]\n\t),\n\tallowSchemaDrift: true,\n\tvalidateSchema: false,\n\tsingleDocument: true) ~> source1\nsource1 sink(input(\n\t\ttype as string,\n\t\tcrs as (type as string, properties as (name as string)),\n\t\tfeatures as (type as string, properties as (pid as string, lat_max as double, lat_min as double, long_max as double, long_min as double), geometry as (type as string, coordinates as double[][][][]))[]\n\t),\n\tallowSchemaDrift: true,\n\tvalidateSchema: false,\n\tfilePattern:'file[n].json',\n\ttruncate: true,\n\tpartitionBy('roundRobin', 50),\n\tskipDuplicateMapInputs: true,\n\tskipDuplicateMapOutputs: true) ~> sink1"

}

}

}

Hope this helps! Please let us know if the issue persists and we will be glad to assist.