Hi @Ryan Abbey ,

Thank you for posting query in Microsoft Q&A Platform.

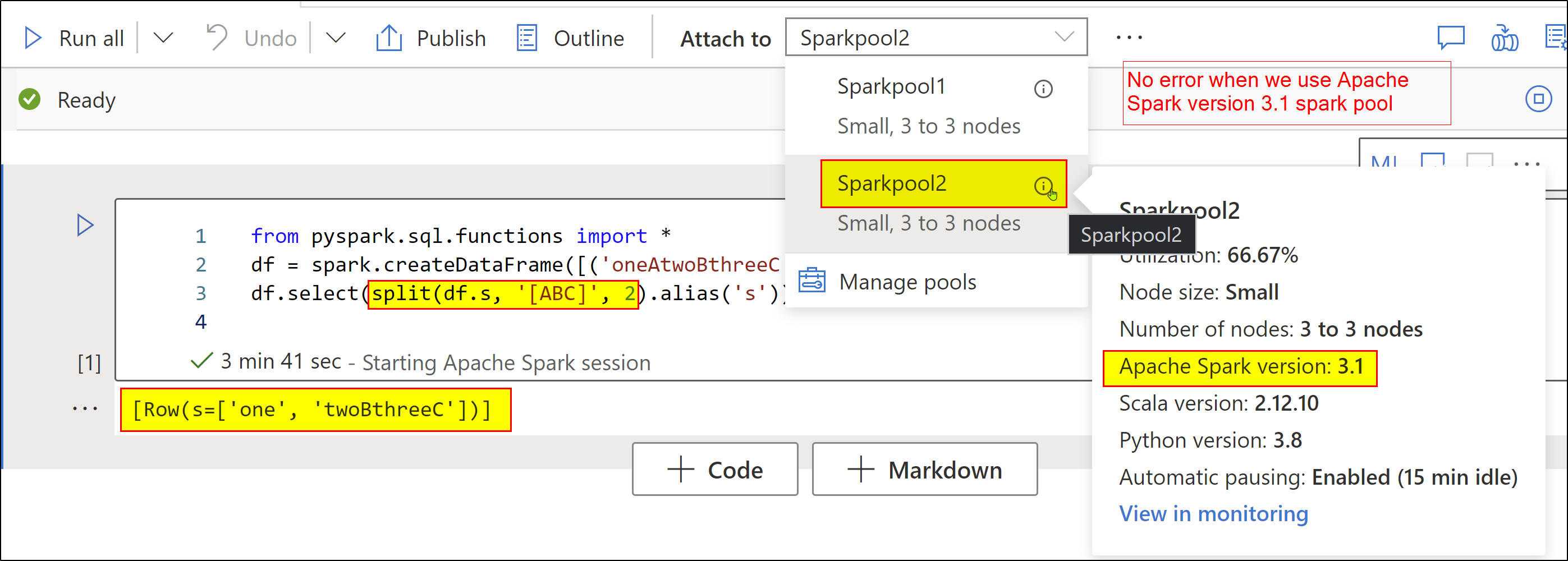

Consider using Spark pool with Apache Spark version 3.1 to run your notebook. This runtime will have split() function which takes all 3 parameters as you mentioned. Kindly check below screenshot.

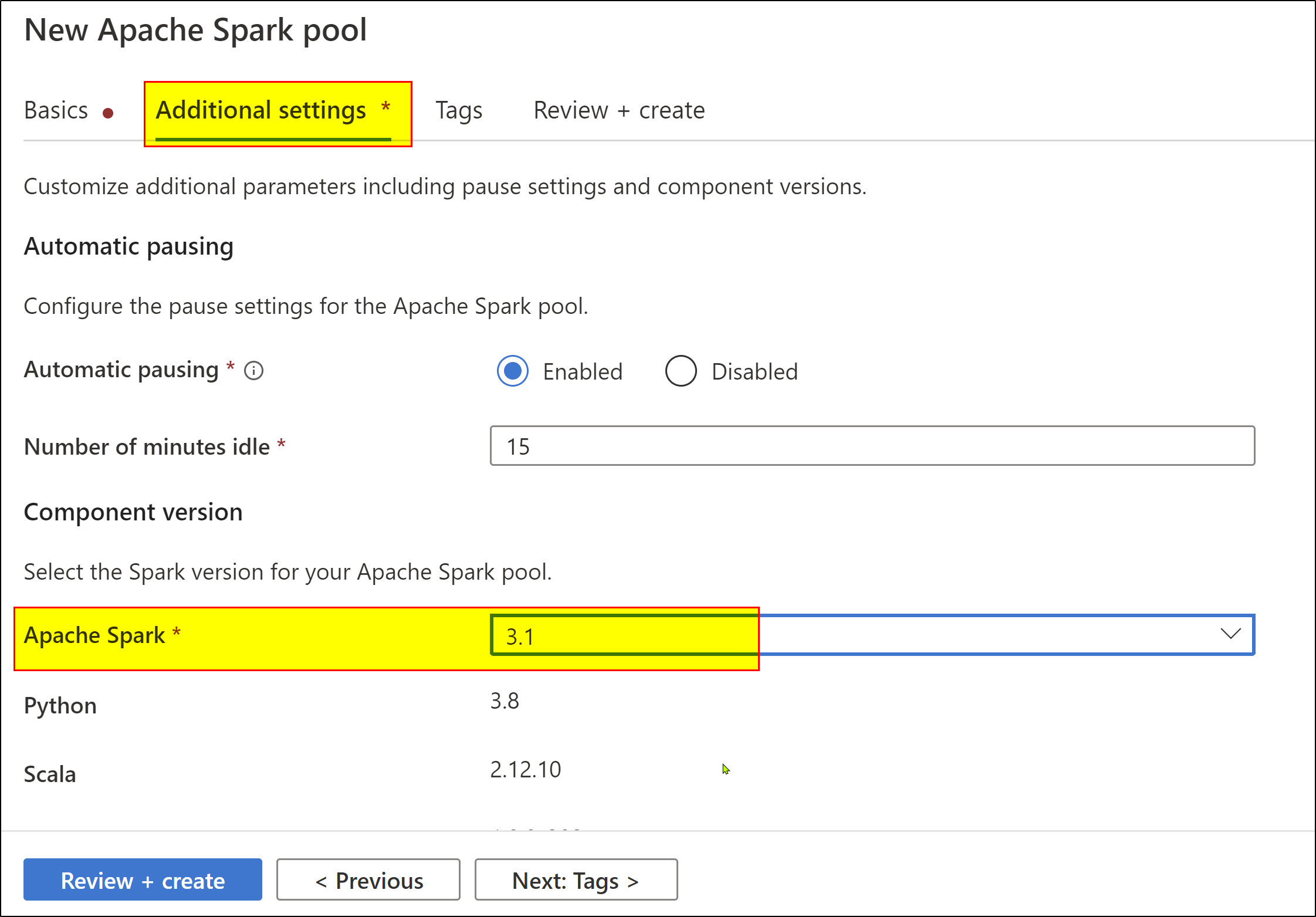

While creating spark pool make sure under additional settings select Apache spark version 3.1

Hope this will help. Please let us know if any further queries.

Please consider hitting Accept Answer. Accepted answers help community as well.