i'm trying to read a table created in synapse, this is my configuration

spark.conf.set("fs.azure.account.auth.type", "OAuth")

spark.conf.set("fs.azure.account.oauth.provider.type", "org.apache.hadoop.fs.azurebfs.oauth2.ClientCredsTokenProvider")

spark.conf.set("fs.azure.account.oauth2.client.id", "<<APPLICATION CLIENT ID>>")

spark.conf.set("fs.azure.account.oauth2.client.secret", "<<CLIENT SECRET VALUE>>")

spark.conf.set("fs.azure.account.oauth2.client.endpoint", "https://login.microsoftonline.com/<<DIRECTORY TENANT ID>>/oauth2/token")

# Defining a separate set of service principal credentials for Azure Synapse Analytics (If not defined, the connector will use the Azure storage account credentials)

spark.conf.set("spark.databricks.sqldw.jdbc.service.principal.client.id", "<<APPLICATION CLIENT ID>>")

spark.conf.set("spark.databricks.sqldw.jdbc.service.principal.client.secret", "<<CLIENT SECRET VALUE>>")

storageAccount = "<<STORAGE-NAME>>"

containerName = "<<CONTAINER-NAME>>"

accessKey = "<<KEY>>"

jdbcURI = "<<jdbc:sqlserver://...>>"

spark.conf.set(f"fs.azure.account.key.{storageAccount}.blob.core.windows.net", accessKey)

tableDF = (spark.read.format("com.databricks.spark.sqldw").option("url", jdbcURI).option("tempDir", "abfss://******@WORKSPACE.dfs.core.windows.net/temp").option("enableServicePrincipalAuth", "true").option("dbTable", tableName).load())

tableDF.createOrReplaceTempView("TBL")

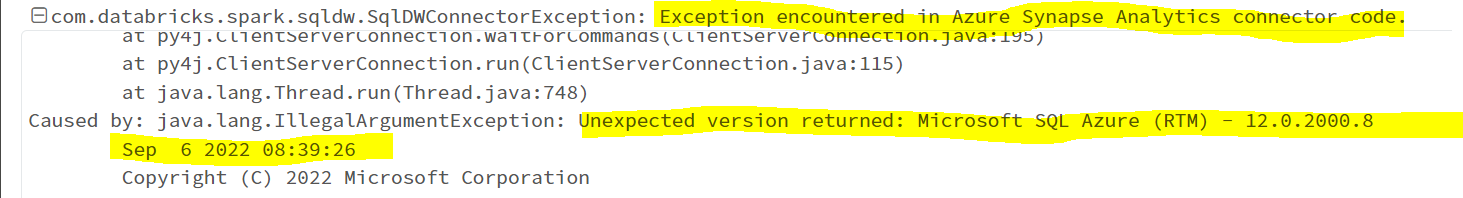

But, when I run the code, I have this error: com.databricks.spark.sqldw.SqlDWConnectorException: Exception encountered in Azure Synapse Analytics connector code

Are there any settings I am missing?