Thank you for posting query in Microsoft Q&A Platform.

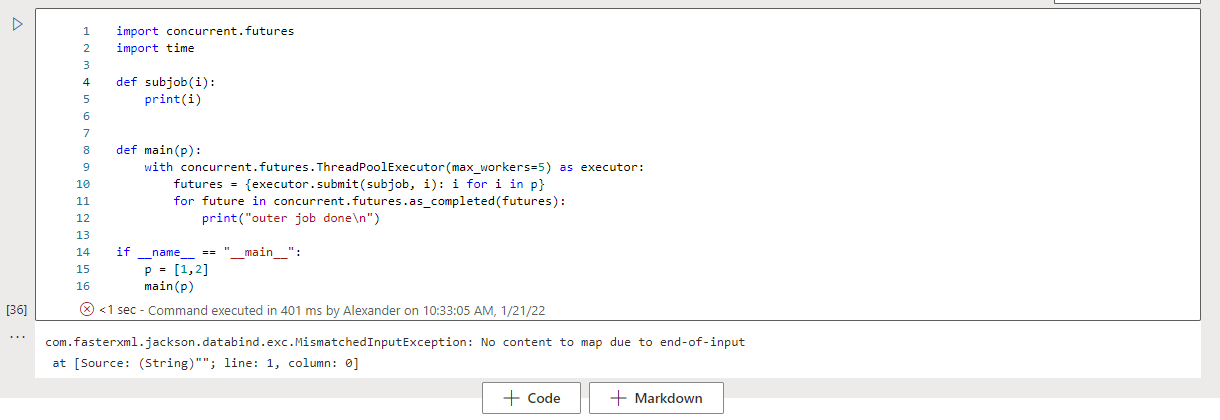

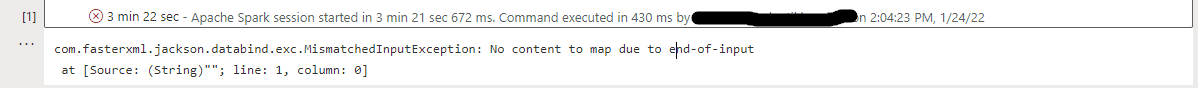

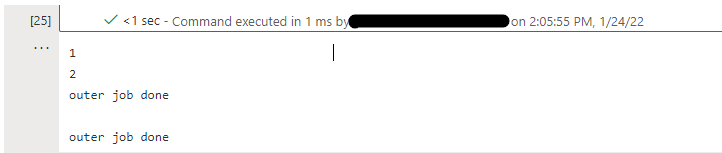

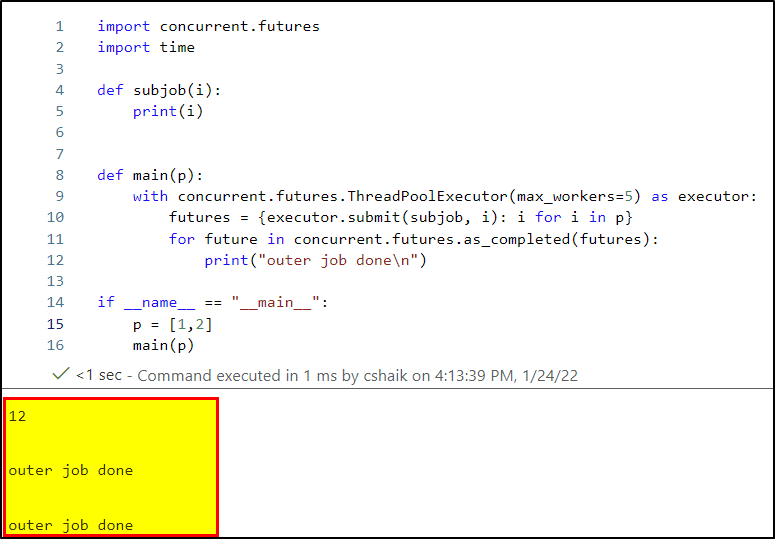

I used same code which shared you. In my case, I am not seeing errors. Please check below screenshot.

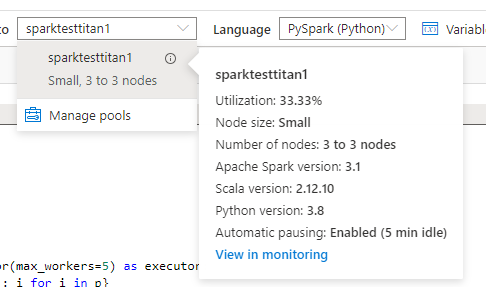

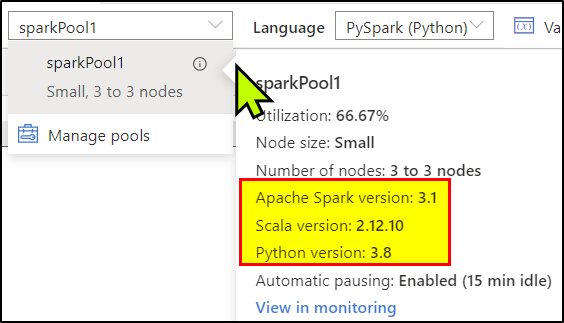

Please check my Spark pool configurations.

Could you please cross verify your Spark Pool configurations with above and see if that helps?

Hope this helps. Please let us know if any further queries.

---------------

Please consider hitting Accept Answer. Accepted answers helps community as well.