Hi @Bajaj, Smita ,

Thank you for posting query in Microsoft Q&A Platform.

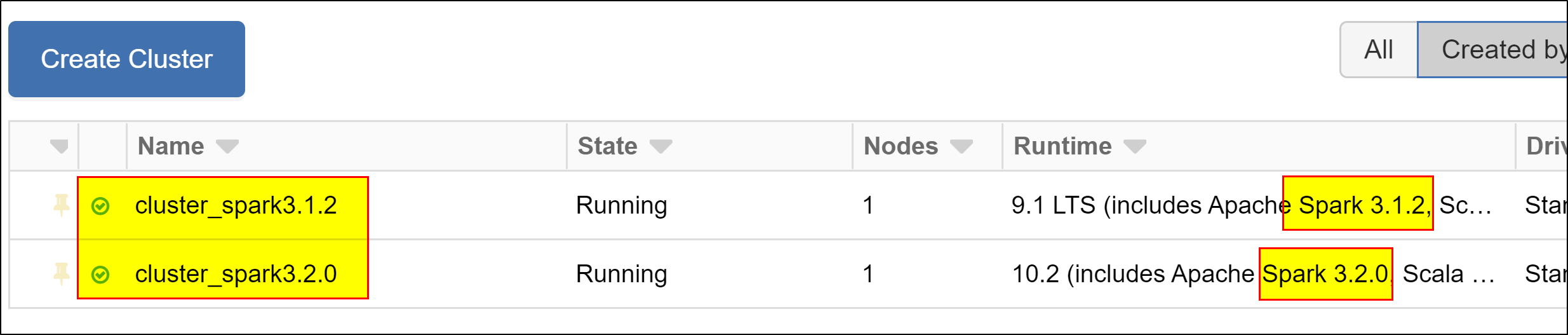

I created two clusters in Azure data bricks. One with Spark version 3.1.2 and another with spark version 3.2.0.

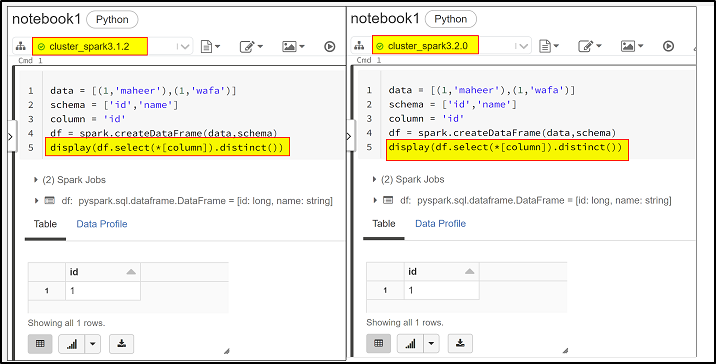

Created a sample notebook with Python as default language and sample code in to to use select() and distinct() functions. I ran my notebook with both clusters with spark version 3.1.2 & spark version 3.2.0. I see everything works good to me. Kindly check below screenshot.

Could you please share your full code if its not the case with your issue. So that i can try same and help.

Hope this helps. Please let us know if any further queries.

---------------

Please consider hitting Accept Answer button. Accepted answers helps community as well.

and upvote

and upvote  for the same. And, if you have any further query do let us know.

for the same. And, if you have any further query do let us know.