Unfortunately, there's no guaranteed maximum ratio of the amount of RAM required to process a Tabular Model to its processed size. The biggest variable are calculated tables and columns. Depending on the DAX and the data model, the engine could end up with a query plan with a virtually unlimited interim materialization requirement.

Note that even the rule of thumb, that Vertipaq boasts a 10:1 compression ratio, puts your 40GB compressed model at 400GB uncompressed. While I'm not saying the engine requires to store the full uncompressed model in RAM at some point during processing, this is still evidence that your 200GB are not the overkill you feel they must be.

Facing memory issues with the Azure analysis services

Hi All,

We are facing memory related issues with azure analysis server while processing the data. The total cube size is around 40 GB and we were having the S4 tier (100 GB memory) then we increased it to S8 which have 200GB memory but still we fact memory issue. Can anyone explain what is the ratio of utilizing for its internal operations versus the data that it holds in memory. What i feel is that for 40 GB of olap db S8 should be more than enough.

Thanks in advance

Azure Analysis Services

SQL Server Analysis Services

4 answers

Sort by: Most helpful

-

Alexei Stoyanovsky 3,416 Reputation points

2022-01-29T12:42:24.037+00:00 -

Nandan Hegde 36,716 Reputation points MVP Volunteer Moderator

Nandan Hegde 36,716 Reputation points MVP Volunteer Moderator2022-01-28T09:08:58.38+00:00 Hey,

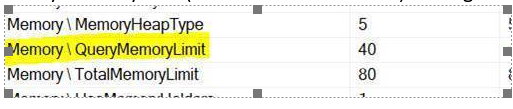

Can you please share the exact error message because there are some other properties as well at server level :

The issue can be wherein 1 of the query might be failing because it requires lets say 30GB out of 100 GB but due to that property being 20%, it might just get 20GB thereby creating an issue

-

Joyzhao-MSFT 15,646 Reputation points

Joyzhao-MSFT 15,646 Reputation points2022-01-31T05:02:44.337+00:00 Hi @Amit Tomar ,

Did you get any errors in the memory configuration?

Analysis Services pre-allocates a modest amount of memory at startup so requests can be handled immediately. Additional memory is allocated as query and processing workloads increase. By specifying configuration settings, you can control the thresholds at which memory is released. For example, the HardMemoryLimit setting specifies a self-imposed out-of-memory condition (by default, this threshold is not enabled), where new requests are rejected outright until more resources become available.

For more information, please refer to: Memory properties.

Best Regards,

Joy

If the answer is the right solution, please click "Accept Answer" and kindly upvote it. If you have extra questions about this answer, please click "Comment".

Note: Please follow the steps in our documentation to enable e-mail notifications if you want to receive the related email notification for this thread. -

Amit Tomar 1 Reputation point

2022-02-07T03:26:51.753+00:00 Hi All,

Sorry I was bit busy with my deliverables, below is the error which usually we get

"messages": [

{

"message": "Retry attempts for failures while executing the refresh exceeded the retry limit set on the request.",

"type": "error"

},

{

"code": "0xC11C0005",

"message": "You have reached the maximum allowable memory allocation for your tier. Consider upgrading to a tier with more available memory.",

"type": "error"

}Thanks