Hello, thank you for reaching out to us here.

I think you are mentioning two scenarios:

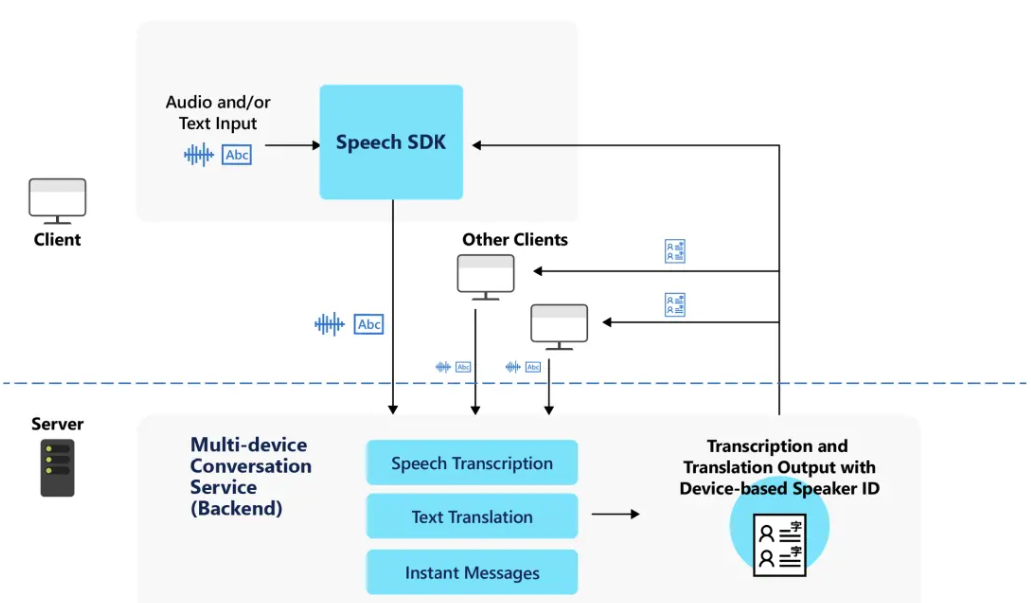

- Multi-device conversation: https://learn.microsoft.com/en-us/azure/cognitive-services/speech-service/multi-device-conversation

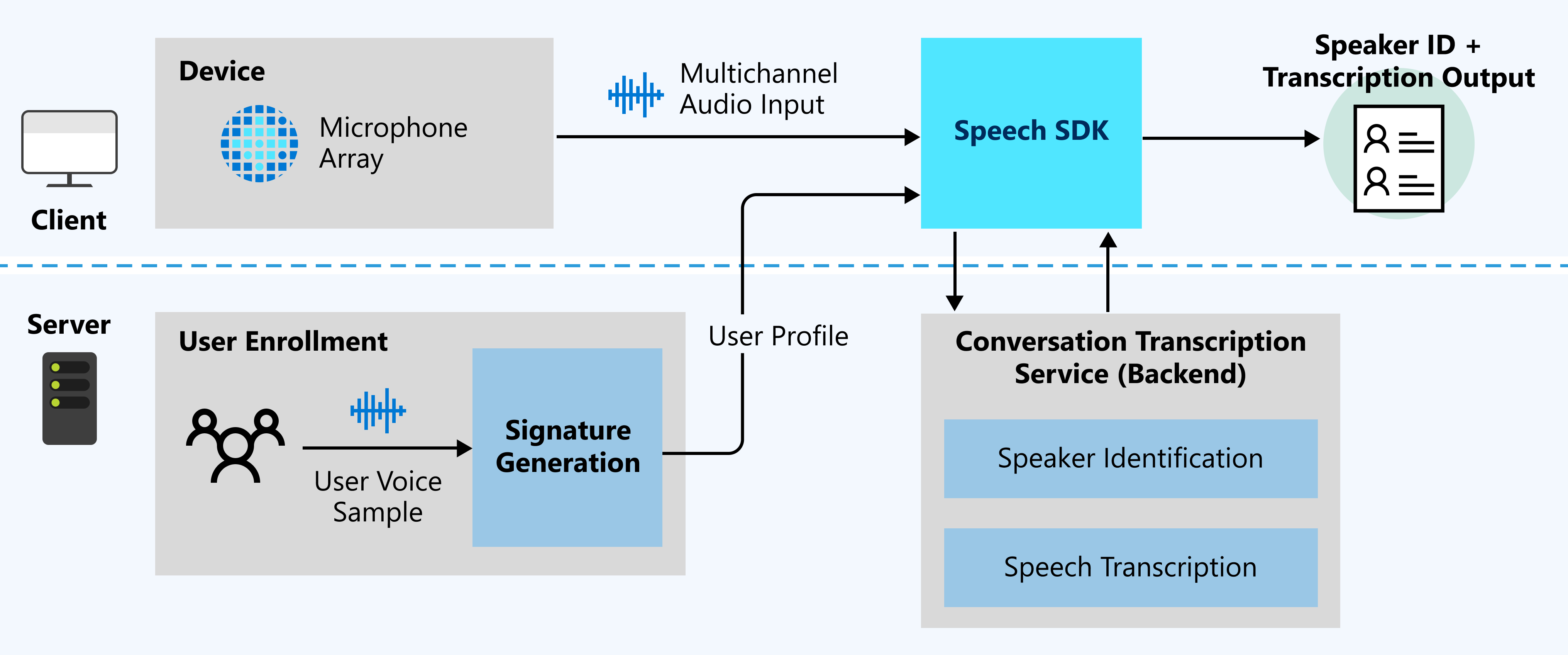

- Real time conversation transcript: https://learn.microsoft.com/en-us/azure/cognitive-services/speech-service/how-to-use-conversation-transcription?pivots=programming-language-javascript

Please correct me if I misunderstood since the first link you share is the document of general Speech to Text service.

For the Multi-device conversation feature, everyone use it's conversation ID to join, this feature uses Speech Service default model.

For the Real time conversation transcription, it creates voice signatures for the conversation participants so that they can be identified as unique speakers, but this is not necessary if you don't want to pre-enroll users.

Both of them use Speech SDK default models, but Real time conversation transcription has a improvement feature which may make the result better.

If you want to train model by your own data set, I think you are mentioning Custom Speech. By using Custom Speech, you can train and deploy your own model with you data set.

https://learn.microsoft.com/en-us/azure/cognitive-services/speech-service/custom-speech-overview

Hope this helps! Please let us know if you have more questions.

Please kindly accept the answer if you feel helpful, thank you.

Regards,

Yutong