I have 4 data flows, that need the same transformation steps from JSON to Parquet.

I get a new dataset for each flow once every hour. Every set contails 8000-13000 rows. These new files land in a "hot" folder. I want to collect these files and add them to the parquet sink in stead of running a full load with "clean the folder" option.

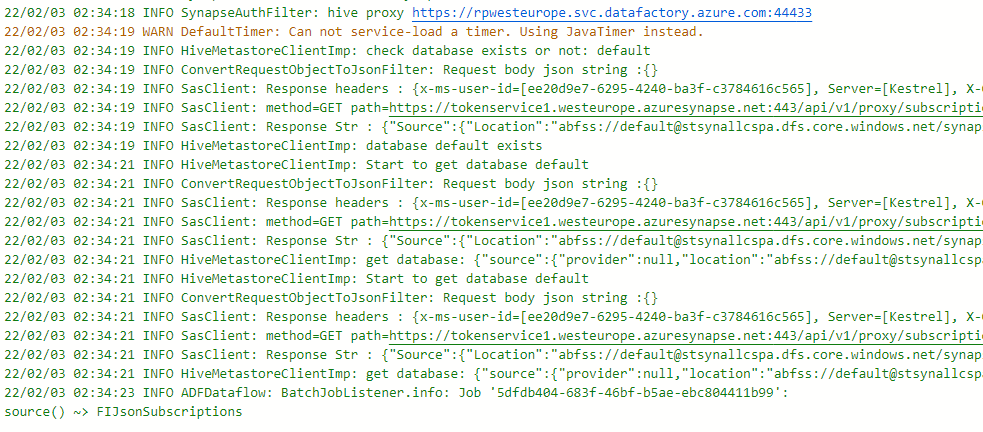

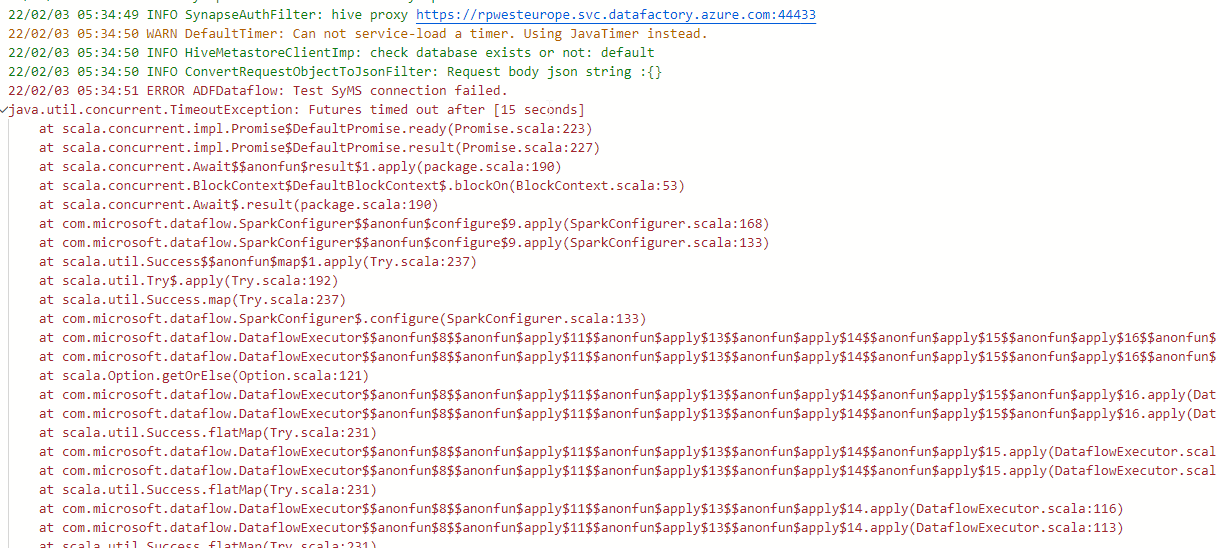

I have successfully done the initial load, and I have had several successful incremental loads, but after a while, the incremental loads stop working intermittently. I get the same error on the failing data flows:

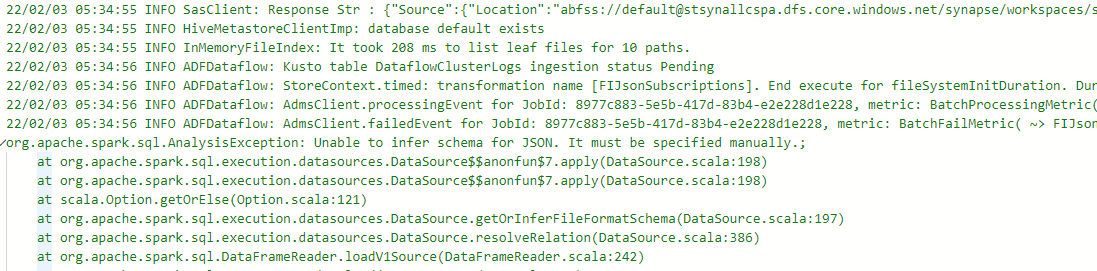

{"message":"Job failed due to reason: at Source 'Flow3JsonSubscriptions': org.apache.spark.sql.AnalysisException: Unable to infer schema for JSON. It must be specified manually.;. Details:org.apache.spark.sql.AnalysisException: Unable to infer schema for JSON. It must be specified manually.;\n\tat org.apache.spark.sql.execution.datasources.DataSource$$anonfun$7.apply(DataSource.scala:198)\n\tat org.apache.spark.sql.execution.datasources.DataSource$$anonfun$7.apply(DataSource.scala:198)\n\tat scala.Option.getOrElse(Option.scala:121)\n\tat org.apache.spark.sql.execution.datasources.DataSource.getOrInferFileFormatSchema(DataSource.scala:197)\n\tat org.apache.spark.sql.execution.datasources.DataSource.resolveRelation(DataSource.scala:386)\n\tat org.apache.spark.sql.DataFrameReader.loadV1Source(DataFrameReader.scala:242)\n\tat org.apache.spark.sql.DataFrameReader.load(DataFrameReader.scala:231)\n\tat com.microsoft.dataflow.transformers.formats.JSONFileReader$$anonfun$read$2$$anonfun$apply$2$$anonfun$apply$4$$anonfun$apply$5.apply(JSONFileReader.scala:41)\n\tat com.microsoft.dataflow.transformers.formats.JSONFileReader$$anonfun$read$2$$anonfun$apply$2$$anonfun$apply$4$$anonfun$apply$5.apply(JSONFileReader.scala:32)\n\t","failureType":"UserError","target":"Get all subscriptions","errorCode":"DFExecutorUserError"}

I see no differences in schemas between the parquet sink and the Json source, taking the transformations into account. REsetting schemas does not change anything. It still fails intermittently

How can I debug this further?