Hi @Jos Neutel ,

Thank you for posting query in Microsoft Q&A Platform and thanks for helping on clarifications.

As I understand ask here, you are trying to load your json data in to parquet file but making sure to create a new key called channelnumber on existing json. Correct me if it is wrong.

You can do that, by looping through your input json array to take value and then inside the loop use copy activity. Under source of copy activity use some SQL resource dataset so that you can make use of power of SQL engine to convert your json in to desired format and then load that in to some table in DB. Finally once all data loads to DB, use another copy activity outside of loop to take that data and load as parquet in final destination.

Please check below screenshots and details to make sense above suggested implementation.

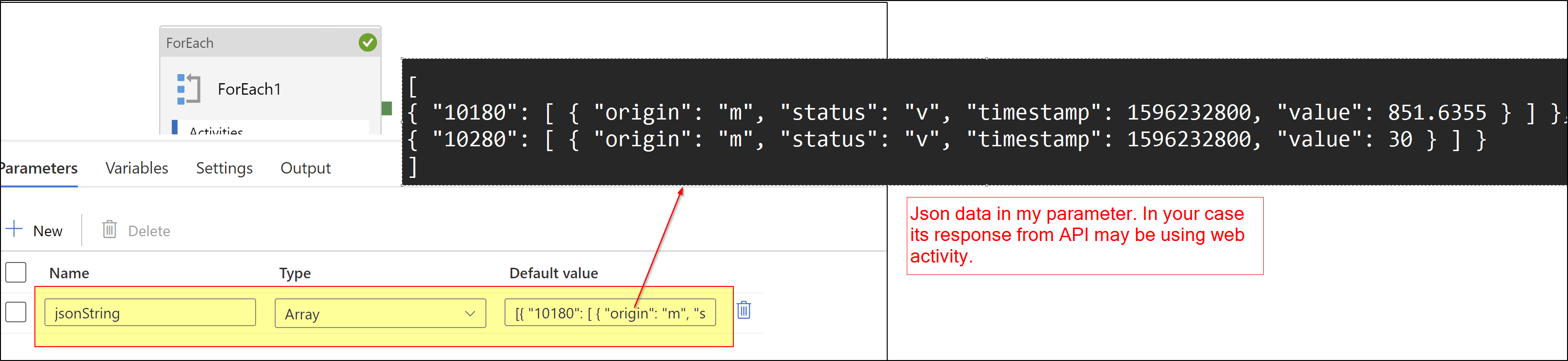

In below example I am passing your input json as parameter value. In your case its from REST API.

Step1: Pipeline parameter(array type) which holds input json array.

Step2: Pass step1 parameter to Foreach activity to loop through on each item.

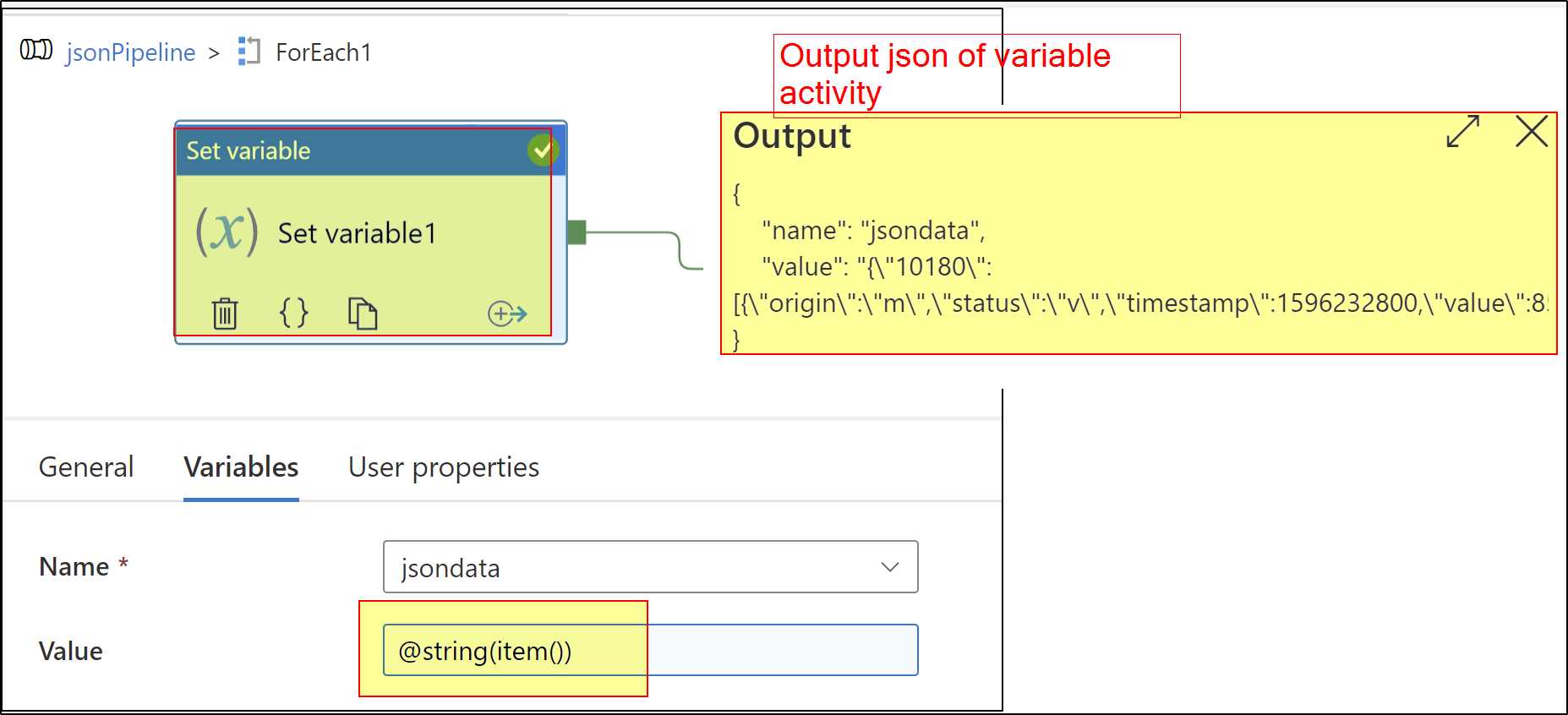

Step3: Inside Foreach activity, Take First item for json array in to variable.

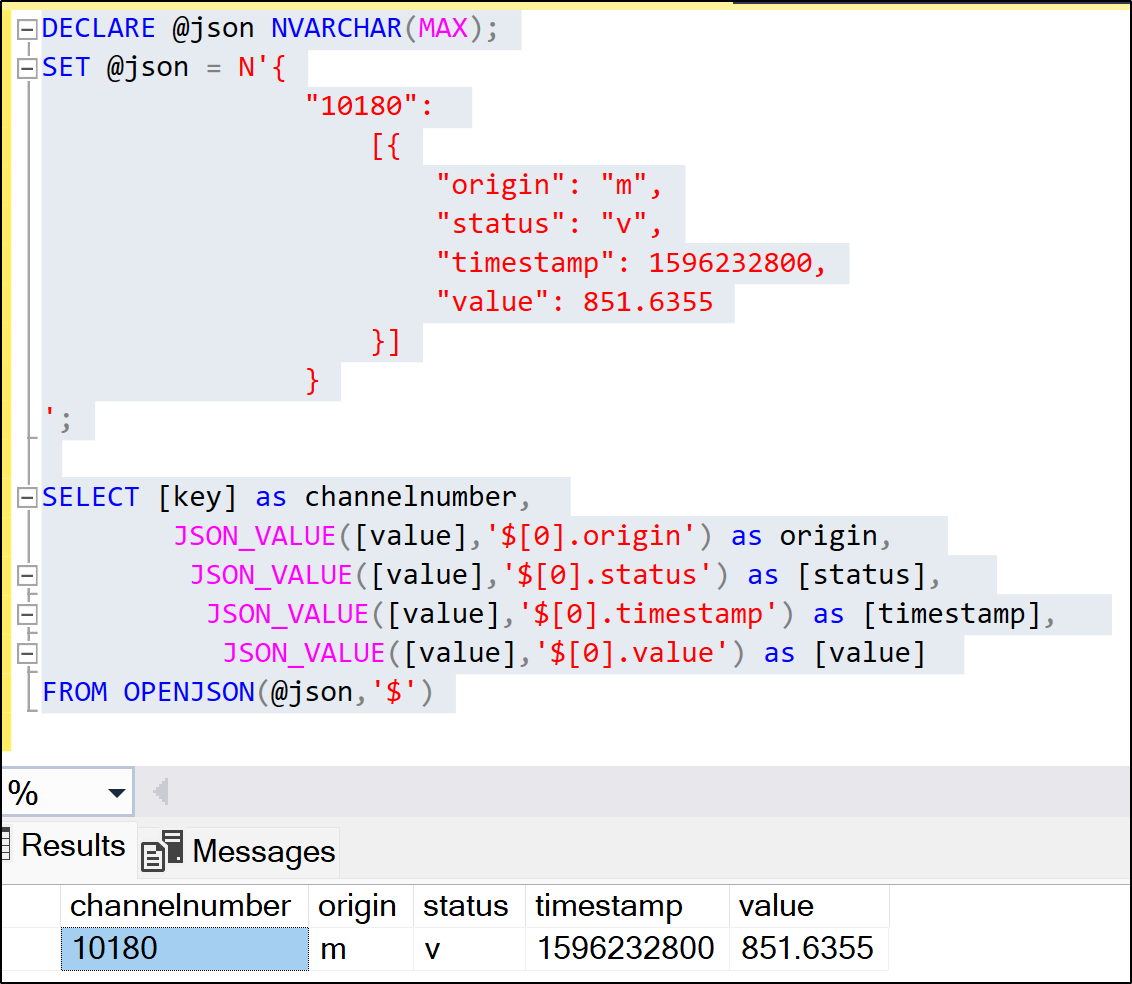

Step4: Inside Foreach activity, Copy activity. In copy activity under source use some SQL dataset and use query option. Write query that transform json data as we needed.

Code used in above screenshot.

DECLARE @json NVARCHAR(MAX);

SET @json = N'@{variables('jsondata')}';

SELECT [key] as channelnumber,

JSON_VALUE([value],'$[0].origin') as origin,

JSON_VALUE([value],'$[0].status') as [status],

JSON_VALUE([value],'$[0].timestamp') as [timestamp],

JSON_VALUE([value],'$[0].value') as [value]

FROM OPENJSON(@json,'$')

Please check below screenshot where similar query I ran in SQL for your reference.

Hope this helps. Please let us know if any further queries.

-------------

Please consider hitting Accept Answer button. Accepted answers helps community as well.