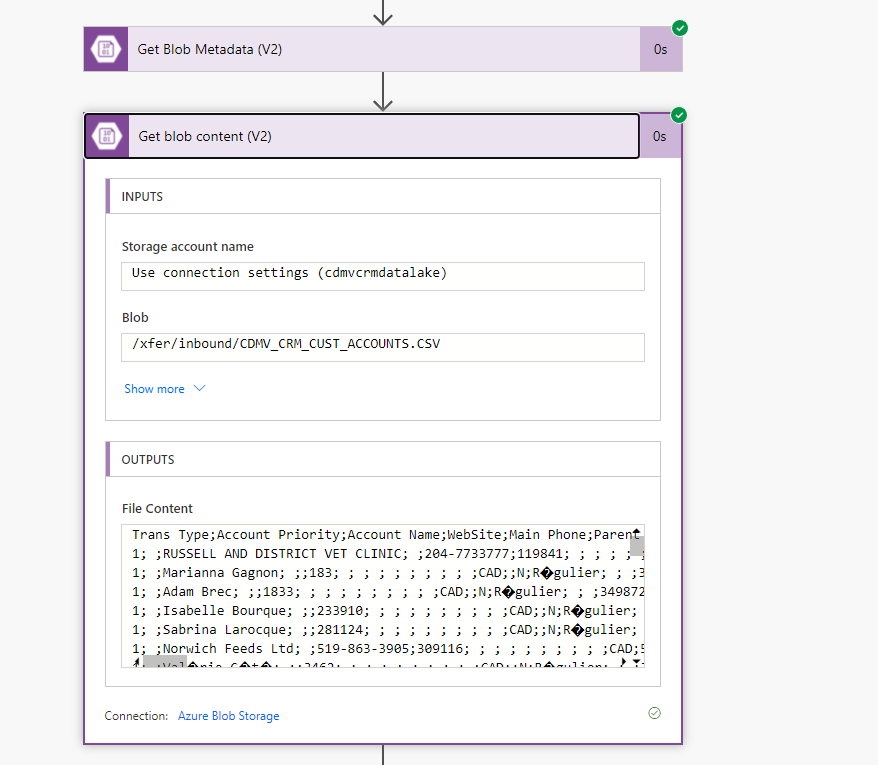

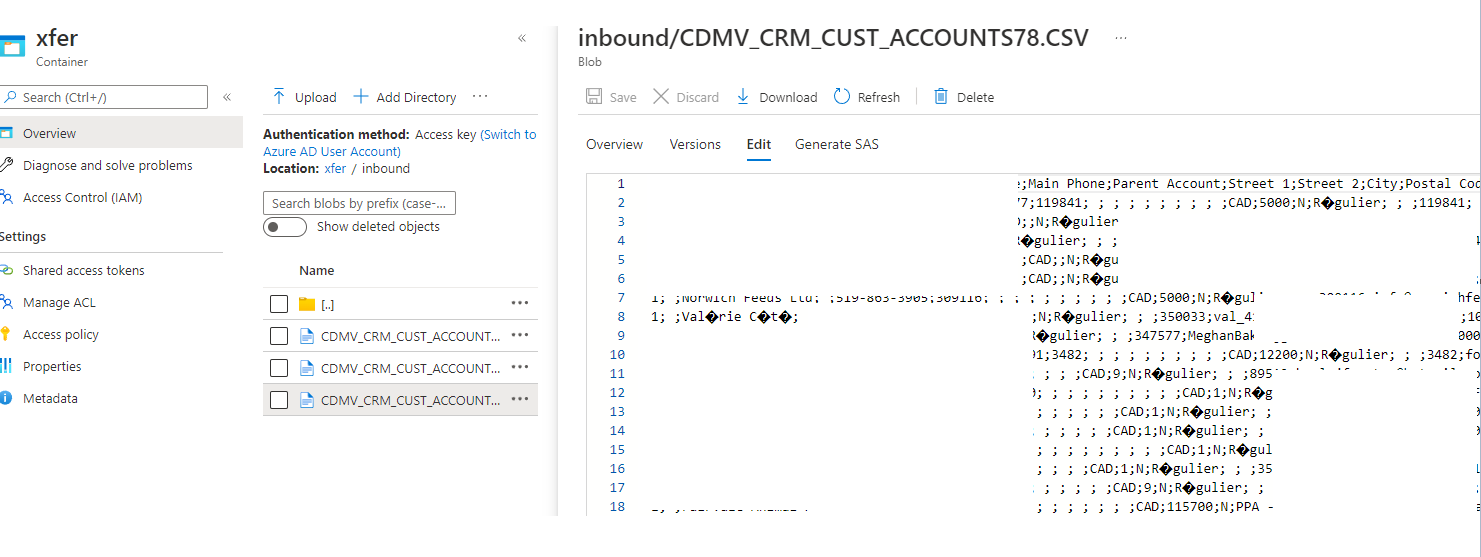

I think, you will have to reach out to client team, request them to investigate, use correct encoding and write files to Blob storage. I don't see any way to read this file correctly as it has corrupted characters for special characters.

Somewhat related thread: https://stackoverflow.com/questions/61331729/problem-writing-to-csv-german-characters-in-spark-with-utf-8-encoding

or upvote

or upvote  button whenever the information provided helps you.

button whenever the information provided helps you.