I created and published a simple pipeline with the code below:

from azureml.core import Workspace, Dataset

from azureml.core.runconfig import RunConfiguration

from azureml.pipeline.steps import PythonScriptStep

from azureml.core.compute import ComputeTarget

from azureml.pipeline.core import Pipeline

ws = Workspace.from_config()

compute_target = ComputeTarget(workspace=ws, name='DS3-v2-standard-cpu')

compute_target.wait_for_completion(show_output=True)

aml_run_config = RunConfiguration()

aml_run_config.target = compute_target

da_rolled = Dataset.get_by_name(ws, 'da_rolled', version = 'latest')

step1 = PythonScriptStep(

name="Step1",

script_name="test.py",

source_directory="./",

inputs=[da_rolled.as_named_input('da_rolled')],

compute_target=compute_target,

runconfig=aml_run_config,

allow_reuse=False

)

pipeline = Pipeline(workspace=ws, steps=[[step1]])

published_pipeline = pipeline.publish(name = "TestPipeline")

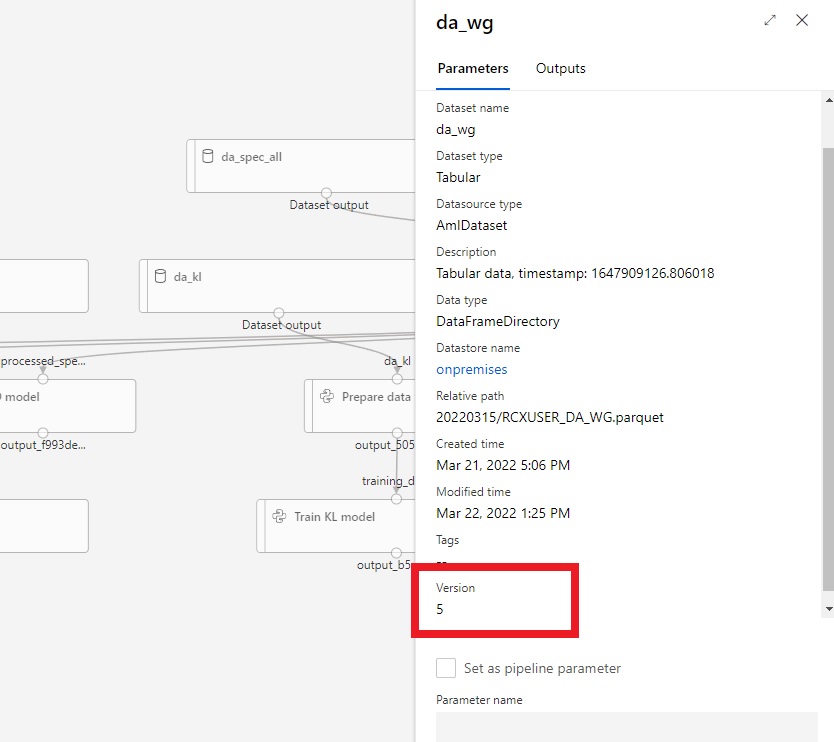

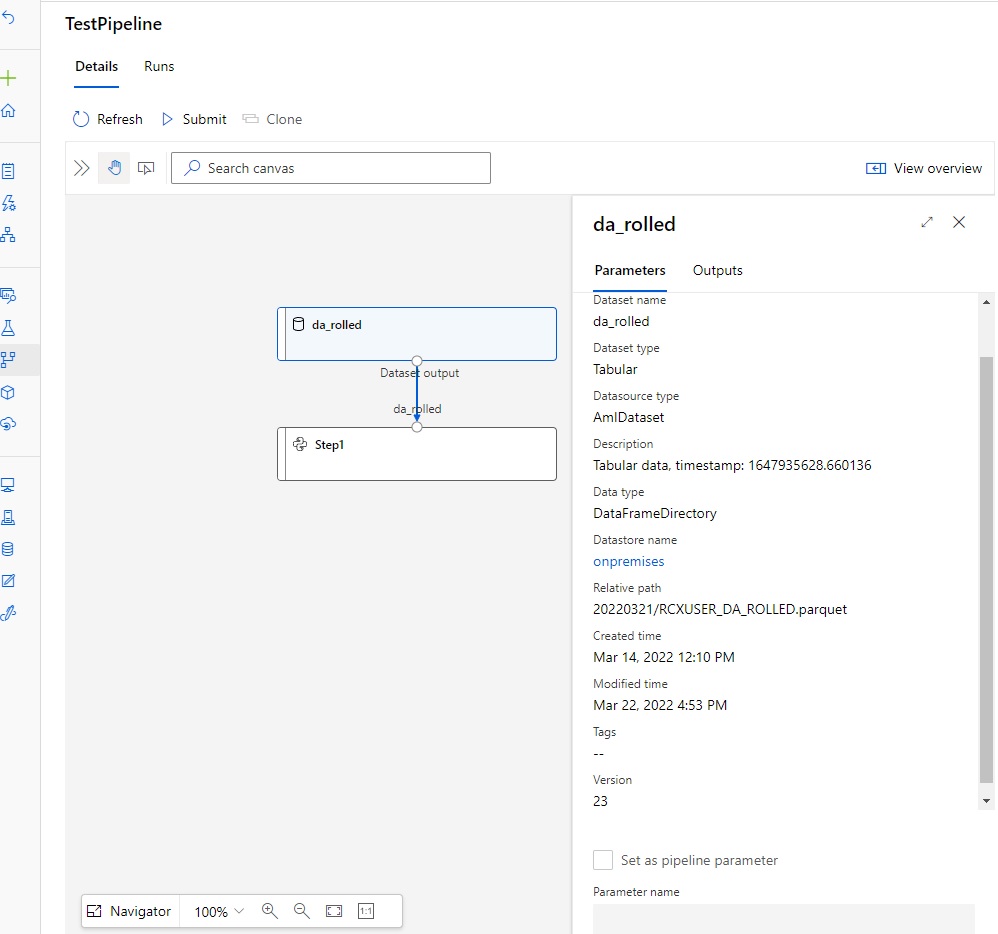

You can see in the image below that the version of the dataset (23, which is the latest version at the time the pipeline is published) is hardcoded in the pipeline definition.

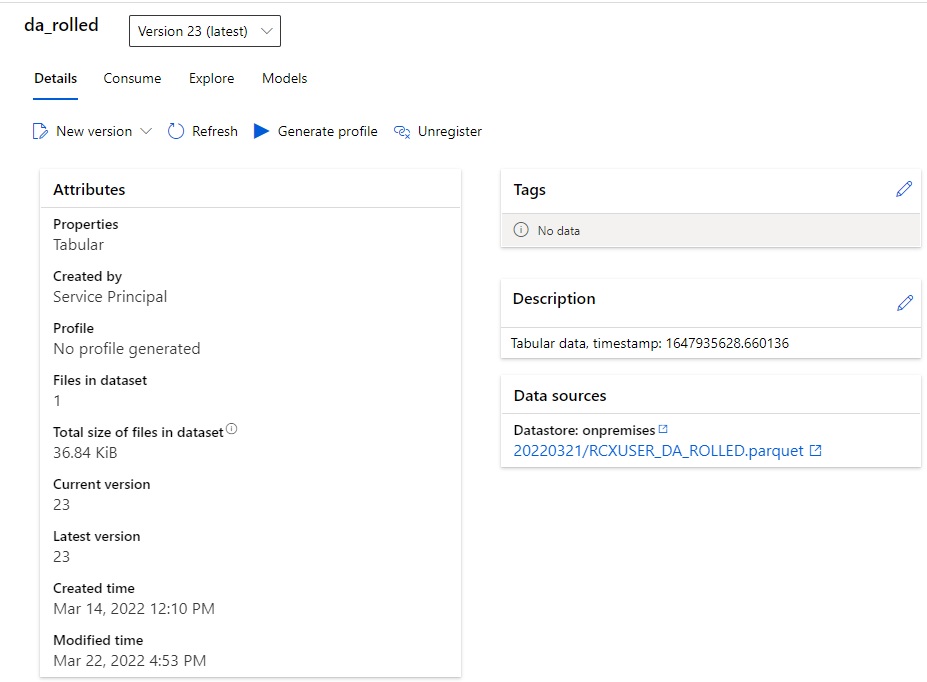

And this is my dataset.

Now if I run my Data Factory pipeline to update the dataset to a new version (which will make it version 24), the version in the pipeline definition will still be 23.

It seems like I need to republish the pipeline every time the dataset is updated.