Hello and thanks for your answer.

I solved my problem and I will explain how.

What I was trying to do was to get the value of a PipelineParameter (containing the date at which the pipeline was triggered by Data Factory) in my Azure ML pipeline definition script in order to use it in the destination name of my OutputFileDatasetConfig object. Basically I wanted the destination name to be something like 'output_model/20220328' where '20220328' is the value of the PipelineParameter.

But it seems impossible to read the value of a PipelineParameter outside of a PythonScriptStep.

What I did to solve this is to create my OutputFileDatasetConfig first without specifying the full destination path.

I specify only 'output_model' in the path. Then I get a reference to the PipelineParameter (at this point I still don't know its value).

model_output_config = OutputFileDatasetConfig(destination = (def_data_store, 'output_model'))

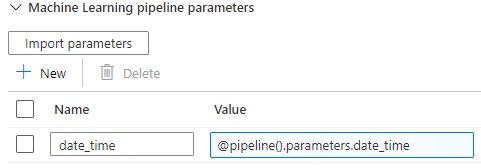

output_model_date = PipelineParameter(name="date_time", default_value="20220328")

Then I pass both references as arguments to my PythonScriptStep.

train = PythonScriptStep(

name="Train model",

script_name="train.py",

source_directory="./",

arguments=[

"--output-model-dir", model_output_config ,

"--output-model-date", output_model_date

],

compute_target=compute_target,

runconfig=aml_run_config

)

And finally in my training script I get the actual value of the PipelineParameter and I just concatenate both parameters to create the full path:

parser.add_argument("--output-model-dir", type=str, dest="output_model_dir", default="output_model", help="Directory to store trained output models and artifacts")

parser.add_argument("--output-model-date", type=str, dest="output_model_date", default="20220328", help="Date to use in the name of the output model folder")

output_model_dir = args.output_model_dir

output_model_date = args.output_model_date

full_output_model_dir = os.path.join(output_model_dir, output_model_date)

And now I can save directly my model artifacts to 'full_output_model_dir'.