Data Compression in Azure Data Factory via Data Flow

Suman C

1

Reputation point

Hi Microsoft Team,

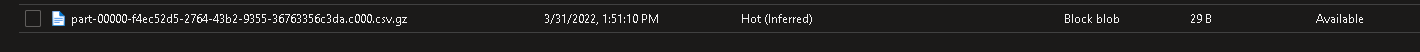

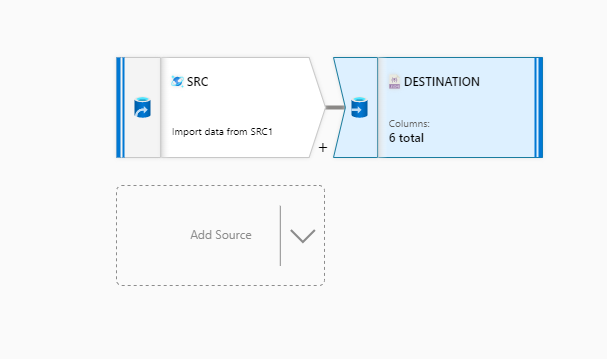

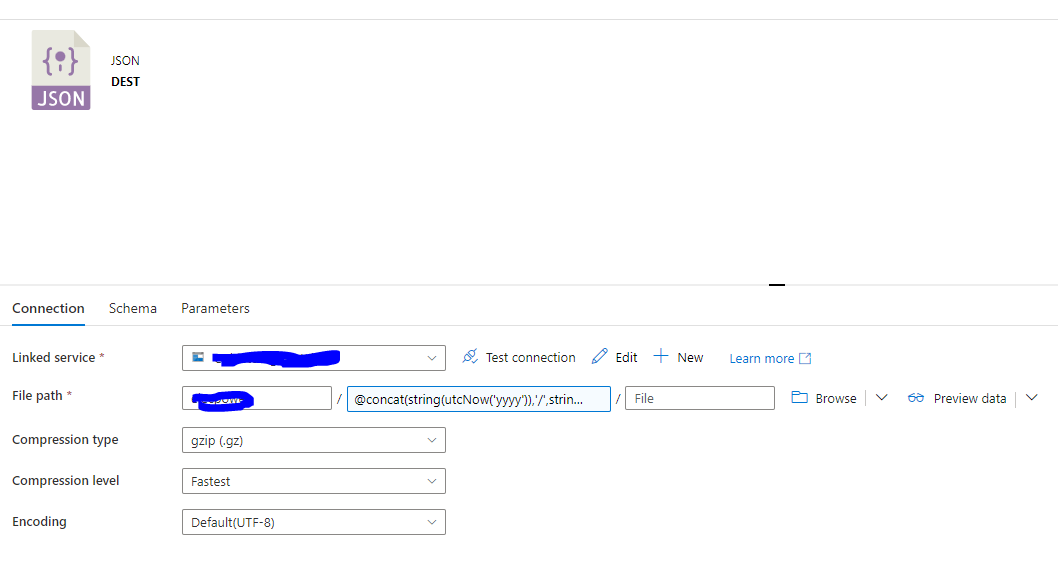

We have a Azure Data Factory Pipeline which executes a simple Data Flow which takes data from cosmosdb and sinks in Data Lake . As destination Optimize logic , we are using Partition Type as Key and unique value partition as a cosmosdb identifier. The destination Dataset also has a compression type as gzip and compression level to Fastest

Problem:

The data is partitioned as expected but we do not see the compression on the files created. Is this the expected behavior or is it a bug ? Can some one please help.

Azure Data Lake Storage

Azure Data Lake Storage

An Azure service that provides an enterprise-wide hyper-scale repository for big data analytic workloads and is integrated with Azure Blob Storage.

Azure Cosmos DB

Azure Cosmos DB

An Azure NoSQL database service for app development.

Azure Data Factory

Azure Data Factory

An Azure service for ingesting, preparing, and transforming data at scale.

Sign in to answer